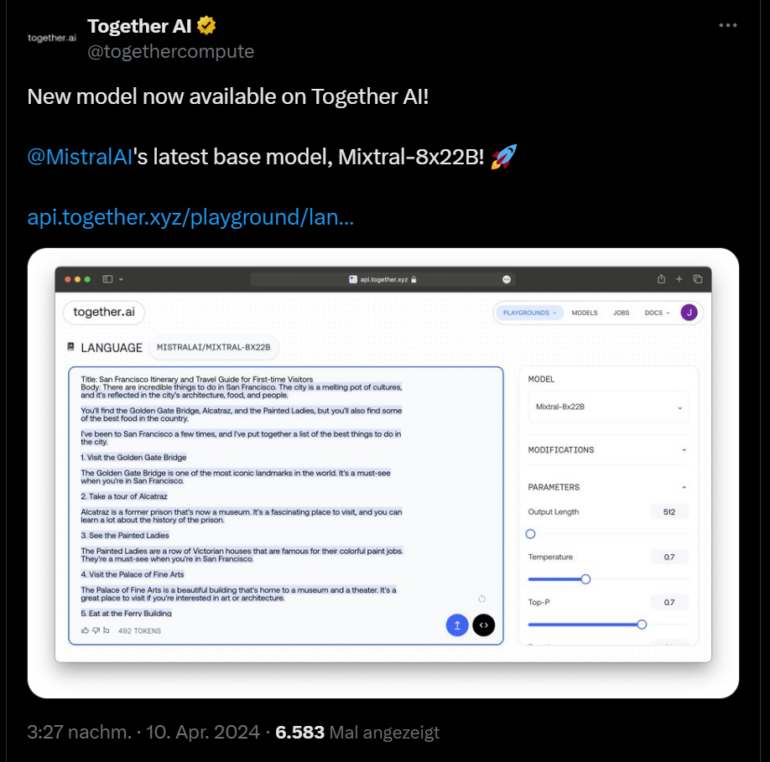

Paris-based AI startup Mistral has released Mixtral-8x22B MoE, a new open language model, via a torrent link. An official announcement with more details will follow later. According to early users, the model offers 64,000 token context windows and requires 258 gigabytes of VRAM. Like the Mixtral-8x7B, the new model is a mixture-of-experts model. You can try out the new model at Together AI.

Ad