Multimodal AI: Between dietary advice and surveillance dystopia

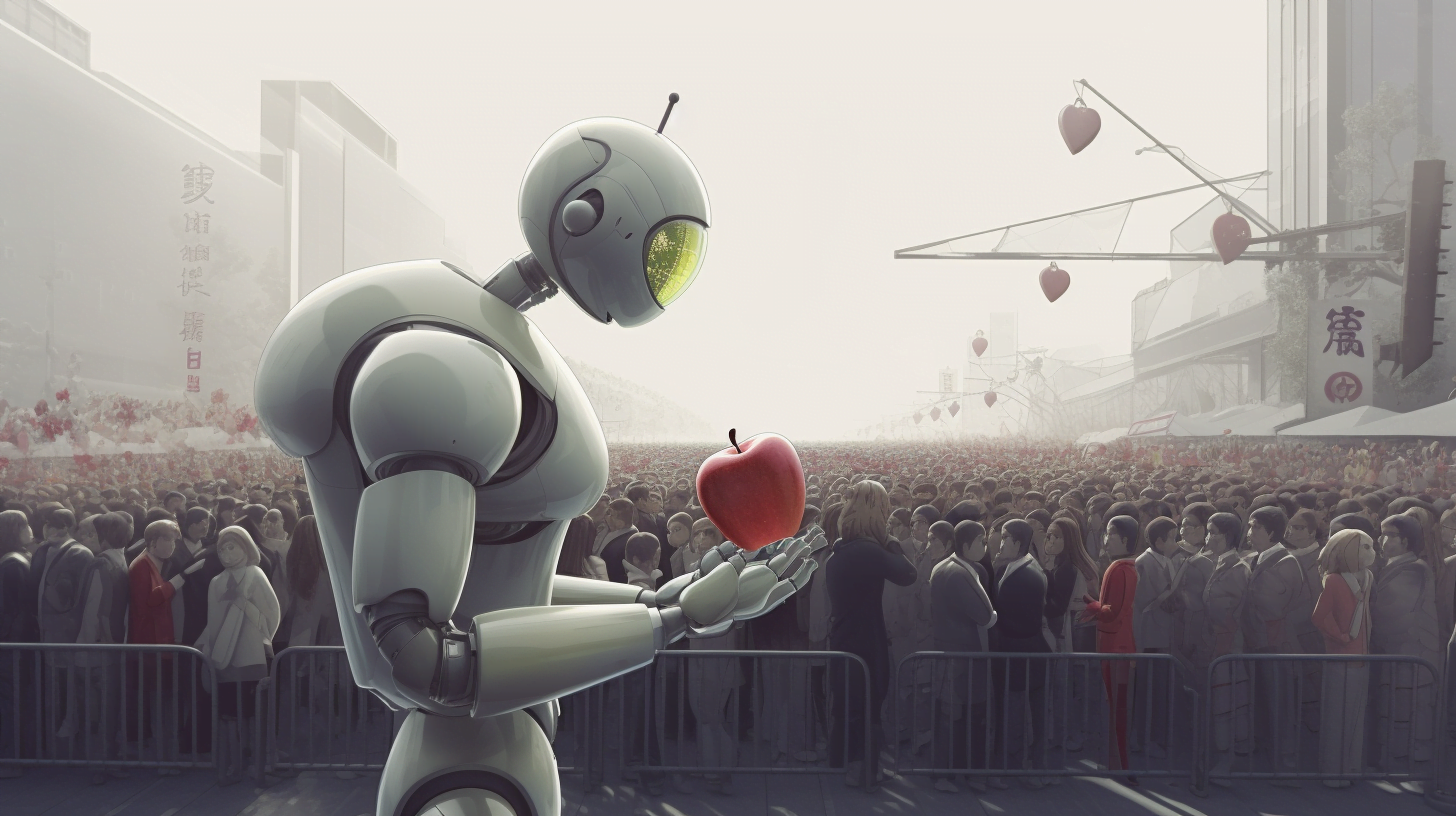

DetGPT gives a preview of the AI applications that will be possible with multimodal models in the future - and not just the good ones.

At the GPT-4 launch, OpenAI demonstrated some multimodal capabilities, including converting a photographed and scribbled web design into code or the ability to answer questions about images, which is already available through the "Be My Eyes" application. Although these capabilities are not yet widely available, open-source models such as miniGPT-4 have provided a first glimpse of them.

Researchers at the Hong Kong University of Science and Technology and the University of Hong Kong now demonstrate a more powerful miniGPT-4 alternative in DetGPT. The multimodal AI model combines the BLIP-2 visual encoder with a 13-billion-parameter Robin or Vicuna family language model and a pre-trained detector (grounding-DINO).

DetGPT combines language model capabilities with image recognition

DetGPT focuses on implementing a "take a photo and ask" capability that allows users to request specific information or actions related to objects in an image. The model goes beyond existing alternatives such as miniGPT-4 by enabling accurate localization of objects and understanding of complex human commands through a large language model.

In one example, the team shows how DetGPT, in response to the statement "I want a cold drink" in a photo of a kitchen where no drink can be found, identifies the refrigerator as the best option. In response to "I want to wake up in the morning," the system marks the alarm clock on a cluttered table, and in response to "Which fruits help with high blood pressure?" the system marks fruits on a market stall that might help with high blood pressure.

For training, the team created a dataset of 30,000 examples from 5,000 images in the COCO dataset and ChatGPT-generated examples of image instructions.

DetGPT for surveillance?

Models such as DetGPT, miniGPT-4, a multimodal GPT-4, or robotics-specific models such as PaLM-E show the potential of additional modalities in large AI models. How far their use will extend is still unclear, but like language models, they are likely to change many jobs - and possibly simplify government surveillance.

A Reddit post in which one of the researchers involved presents the work includes the following passage:

Moreover, the examples of object categories provided by humans are not always comprehensive. For instance, if monitoring is required to detect behaviors that violate public order relative to public places, humans may only be able to provide a few simple scenarios, such as holding a knife or smoking. However, if the question "detect behaviors that violate public order" is directly posed to the detection model, the model can think and reason based on its own knowledge, thus capturing more unacceptable behaviors and generalizing to more relevant categories that need to be detected.

This would first require a language model that is fine-tuned to the particular society and its norms. The result would then be an AI system that could recognize undesirable behavior much more dynamically, no longer recognizing its occurrence according to a statically labeled pattern, but also inferring it from the occurrence of accompanying factors.

The code and dataset are available on GitHub, as is a demo. The model is not yet available.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.