Nvidia's 3D MoMa generates a complete 3D model from just under 100 photos within an hour - including textures and lighting.

Advances in the use of artificial intelligence for computer graphics enable corresponding systems to learn 3D representations from 2D photos that can beat classic approaches such as photogrammetry.

So-called Neural Radiance Fields (NeRFs) deliver particularly impressive results. They generate photorealistic renderings of objects, landscapes or interiors for Google's Immersive View.

NeRFs have problems, for example, when representing motion or when a viable 3D object with mesh, texture, and lighting must be created from the mesh representation. Researchers at Google, among others, are trying to solve the motion problem with HumanNeRF.

For the second issue, there are already initial solutions, but extracting a 3D object from the neural network is still tedious.

Nvidia's 3D MoMa does without NeRFs

But efficient variants of so-called inverse rendering - generating traditional 3D models from photos - could greatly speed up the workflow in the graphics industry.

Researchers at Nvidia are now demonstrating 3D MoMa, a neural inverse rendering method that generates usable 3D models significantly faster than alternative methods - including those that use NeRFs.

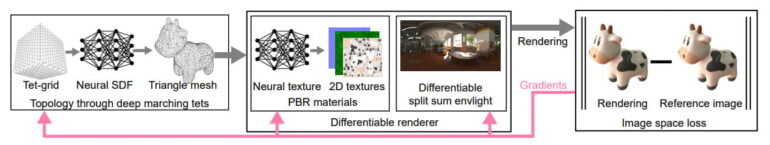

Nvidia's 3D MoMa instead learns topology, materials and ambient lighting from 2D images with separate meshes, including one for textures and one that learns signed distance field (SDF) values from a traveling tetrahedral mesh, among other things.

3D MoMa thus directly outputs a 3D model in the form of triangular meshes and textured materials, which can then be edited in common 3D tools. It needs about one hour on an Nvidia Tensor Core GPU for the training with about 100 photos.

Alternative methods that rely on NeRFs often require one or more days of training. Nvidia's Instant NeRF is significantly faster and learns a 3D representation in a few minutes, but it does not support decomposition of geometry, materials and lighting.

Video: Nvidia

Inverse rendering is the "holy grail"

David Luebke, vice president of graphics research at Nvidia, sees 3D MoMa as an important step toward rapidly generating 3D models that creatives could import, edit and extend without limitations in existing tools. Inverse rendering has long been the "holy grail" of unifying computer vision and computer graphics, Luebke said.

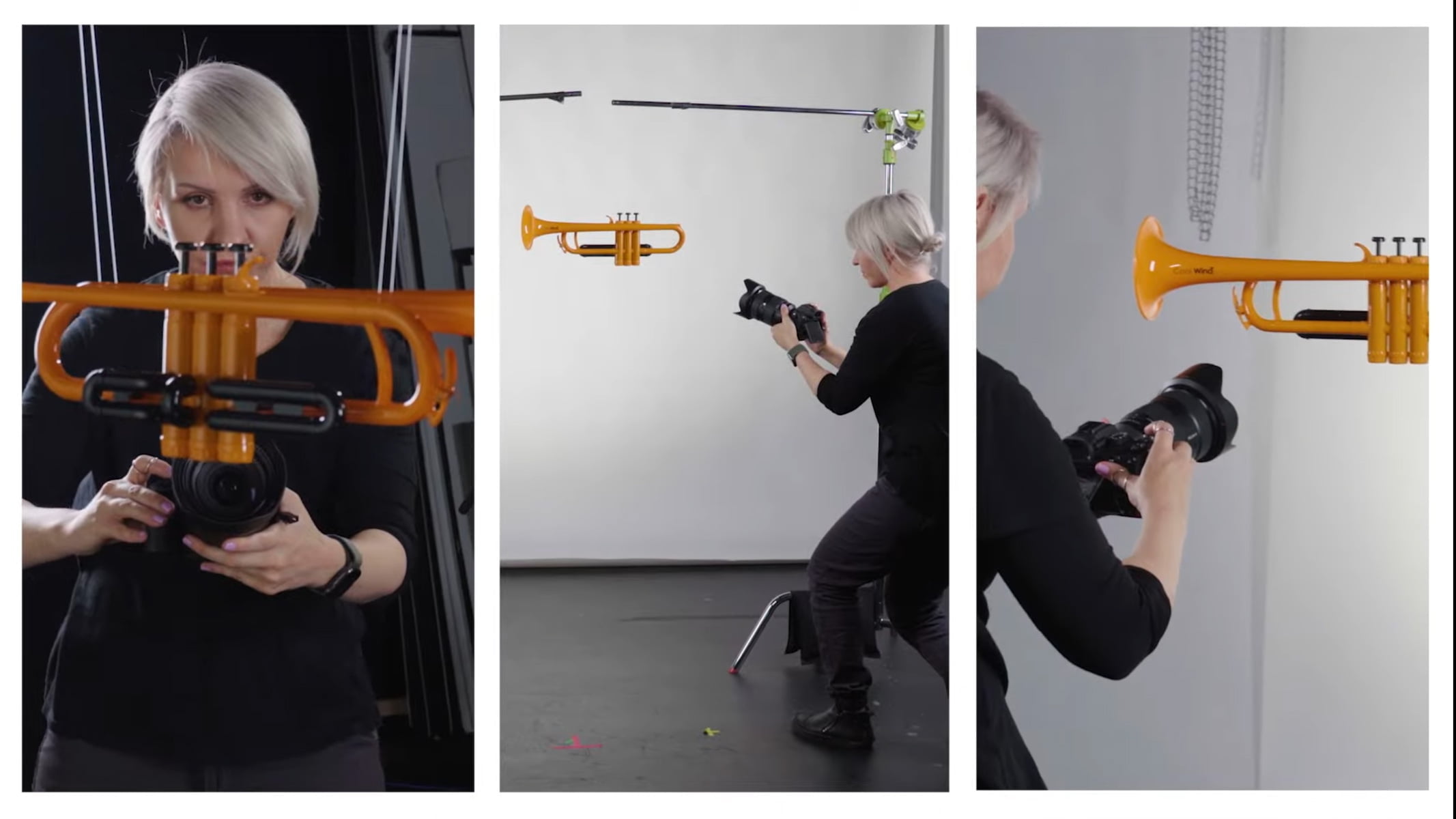

For a showcase, Nvidia researchers collected nearly 100 photos of each of five jazz band instruments and used the 3D MoMa pipeline to create and manipulate 3D models from them.

The results can be seen in the video above, and they're not perfect yet - but further improvements are on the horizon and could soon change the modeling process as much as the advent of photogrammetry already has.