Nvidia positions GR00T N1 to dominate robotics ecosystem

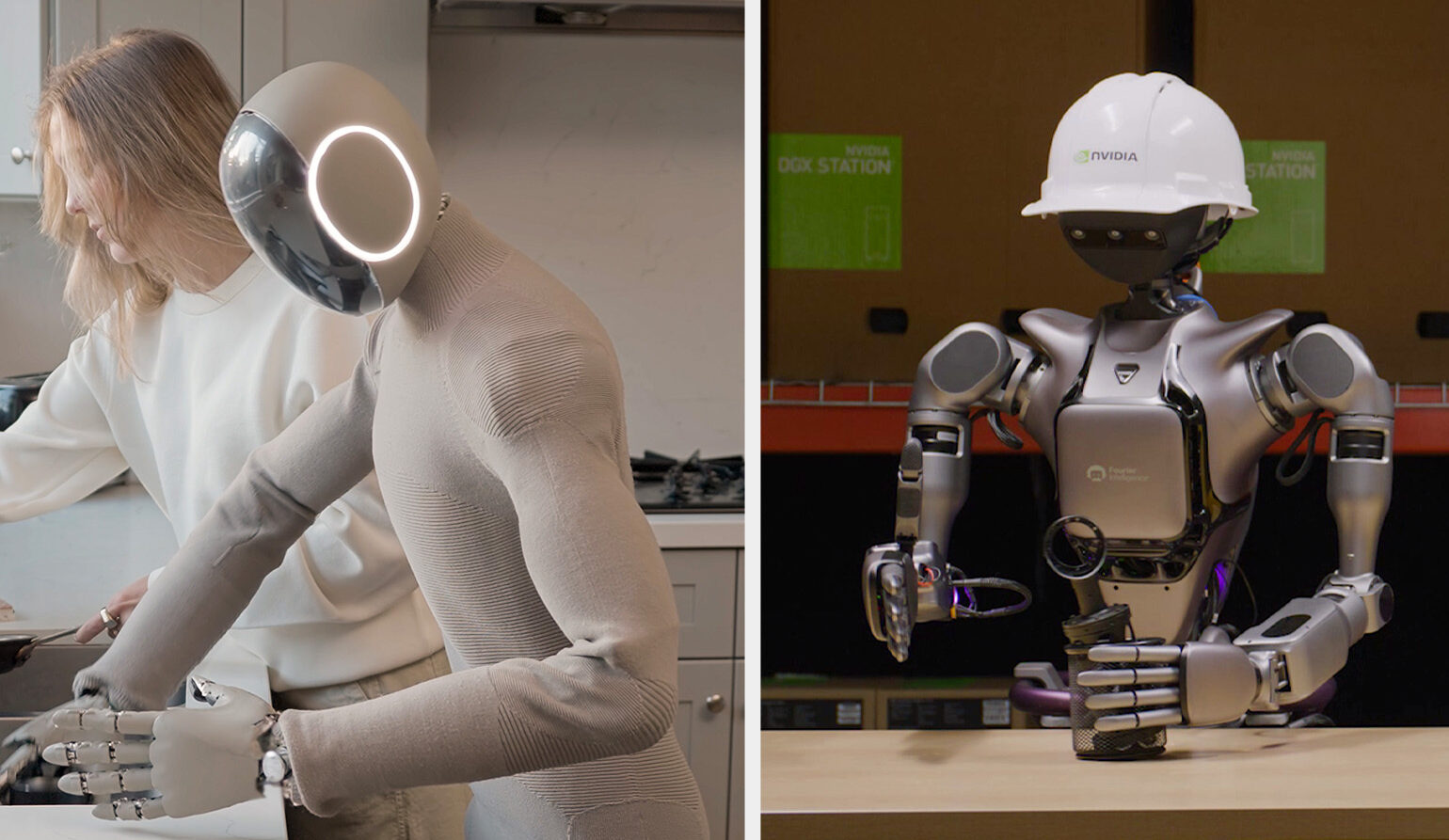

Nvidia's introduction of Isaac GR00T N1 at GTC 2025 reveals the company's strategy to control every layer of robotics development, from hardware to software.

The system, which builds on the company's earlier AI agents Voyager and Eureka, represents Nvidia's most ambitious move yet to become the standard platform for robot development.

The model uses a two-tier approach similar to Figures Helix. System 2 functions as a vision-language model with memory and planning capabilities, handling the "slow" cognitive processes of perception, reasoning, and planning. System 1 operates as a high-frequency motor control network, translating plans into real-time movement commands.

For example, when a GR00T-powered robot needs to grab a box from a shelf, System 2 processes the scene and develops the plan, while System 1 handles the physical execution - from walking to coordinating precise hand movements. Training combines reinforcement learning in simulations with imitation learning from human demonstrations.

Controlling the data pipeline

Nvidia addresses the massive data requirements of embodied AI by pushing developers toward its proprietary tools. The Isaac Lab simulator can run thousands of parallel robot simulations through Omniverse and Isaac Sim, though the company acknowledges that differences between simulated and real-world behavior haven't been eliminated.

The ecosystem extends through the Newton Physics Engine, developed with Google DeepMind and Disney, and includes workflow templates like GR00T-Teleop for remote operation and GR00T-Dexterity for fine motor skills. Partnerships with robotics startups like 1X Technologies, whose humanoid "NEO" demonstrated at GTC 2025, further expand Nvidia's influence.

From Minecraft to real-world robotics

Jim Fan, who heads Nvidia's Generative Embodied AI Research (GEAR) group after completing his doctorate under AI pioneer Fei-Fei Li at Stanford, predicts a major breakthrough in robot foundation models within the next few years. He compares the potential impact to GPT-3's transformation of language processing.

The path to GR00T runs through two key projects from Fan's team. Voyager, developed as the first "lifelong learning Minecraft agent," showed how GPT-4 could enable an AI system to write and improve its own code while building a library of reusable skills. Eureka followed, demonstrating how combining generative AI with reinforcement learning could automate the creation of reward algorithms for robot training.

Fan outlines three critical areas where foundation agents must generalize: available skills, compatible body types, and operational environments. Nvidia aims to control all these aspects through its integrated approach, following a strategy similar to its CUDA platform dominance but with broader ambitions for robotics.

This comprehensive approach sets Nvidia apart from competitors like Google DeepMind's Robotic Transformer, which lacks full platform integration, and Tesla's closed Optimus project. While Nvidia makes core components publicly available, it maintains tight control over critical optimizations and hardware integration, ensuring its position at the center of the robotics ecosystem.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.