Nvidia researcher says that "AI is the new graphics"

Nvidia is expanding its AI graphics technology with its new GeForce RTX 50 series cards, building on the foundation it laid years ago with DLSS. The latest desktop and laptop GPUs push even deeper into AI-assisted rendering.

The lineup includes the usual suspects: a $1,999 RTX 5090 and $999 RTX 5080 launching January 30th, with the 5070 Ti and 5070 following in February. Laptop versions are coming in March. The cards feature the new Blackwell architecture and GDDR7 memory, with the 5090 promising double the performance of the 4090 (if you can spare the 575 watts it needs, 125 watts more than the RTX 4090).

The real story here is how Nvidia is weaving AI into every aspect of graphics processing. According to Nvidia, these new GPUs are specifically optimized for AI to handle most of the heavy lifting, from ray tracing to rendering complex scenes and creating lifelike characters. Their RTX Kit aims to improve everything from geometry to textures, materials, and lighting, promising better visuals and performance while reducing artifacts, instability, and VRAM usage.

New RTX Neural Shaders embed tiny neural networks directly into programmable shaders, enabling specific AI-powered features such as RTX Neural Texture Compression for better texture compression, RTX Neural Materials for compressing complex shader code, and RTX Neural Radiance Cache for calculating indirect lighting.

Developers can train these neural networks on their game data using the RTX Neural Shaders SDK, and then use the trained models at runtime. The technology promises better-looking games with fewer artifacts and lower memory usage through AI-powered texture compression, material rendering, and lighting calculations.

Make digital humans look more human

Nvidia's also pushing AI into character rendering. Their new RTX Neural Faces technology uses generative AI to transform basic 3D face data into more natural-looking results. Instead of brute-forcing every detail, the AI infers realistic features from simpler input.

They're also rolling out the RTX Character Rendering SDK, focusing on more realistic hair and skin rendering through new GPU-accelerated techniques like Linear-Swept Spheres for hair and Subsurface Scattering for skin.

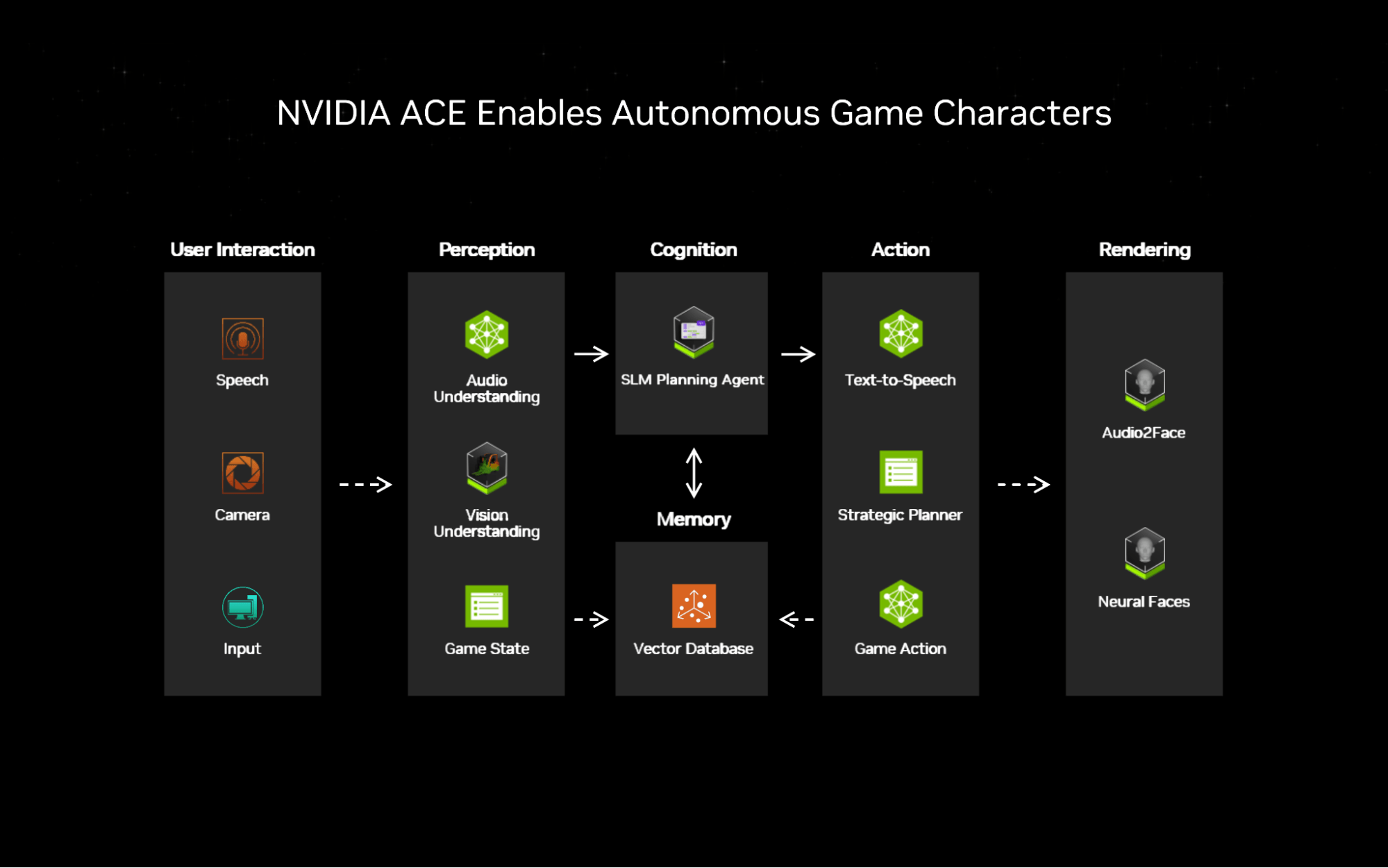

Nvidia's ACE (Autonomous Character Engine) aims to create more intelligent game characters using generative AI. The system processes speech, visual input, and game states to generate more natural interactions.

They use small language models for planning and multimodal processing, allowing AI characters to understand both visual and audio cues. A new Audio2Face diffusion model promises better lip sync and emotional expression.

A new technology called RTX Mega Geometry promises to render worlds with hundreds of millions of triangles in real-time - up to 100 times more than current methods. It uses smarter ways to organize and process these triangles on the GPU, which means less work for your CPU and better-looking ray-traced graphics.

DLSS 4: Now With Extra Frames

The latest version of Nvidia's DLSS technology can now generate up to three additional frames for each rendered frame. It uses a new transformer-based architecture to handle super resolution, ray reconstruction, and anti-aliasing.

Alongside this, Reflex 2 adds something called Frame Warp, which updates frames with your most recent mouse movement right before they hit your screen, promising to cut input lag by up to 75% compared to regular rendering.

So it's a lot - AI-powered shaders, smarter character rendering, better geometry processing, frame generation, and even AI-driven NPCs. As Nvidia's chief scientist Jim Fan puts it: "Y'all expecting RTX 5090, cool specs and stuff. But do you fully internalize what Jensen said about graphics? That the new card uses neural nets to generate 90+% of the pixels for your games? Traditional ray-tracing algorithms only render ~10%, kind of a "rough sketch", and then a generative model fills in the rest of fine details. In one forward pass. In real time. AI is the new graphics, ladies and gentlemen."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.