Nvidia's EmerNeRF to make self-driving cars safer

EmerNeRF learns 3D representations of the real world to help robots and autonomous cars navigate safely.

Researchers from the University of Southern California, Georgia Institute of Technology, University of Toronto, Stanford University, Technion, and Nvidia have developed EmerNeRF. The AI model can use video recordings to independently recognize which parts of a traffic scene are dynamic and which are static.

For self-driving cars or other robots moving in the real world, it is crucial to recognize which elements in their environment are static and which are dynamic. This is the only way they can orient themselves in the world and interact with it safely. In autonomous driving, for example, traffic scenes are divided into static and dynamic objects, such as other vehicles. Today, however, this process usually requires human supervision, which is expensive and difficult to scale.

EmerNeRF learns self-supervised and outperforms supervised methods

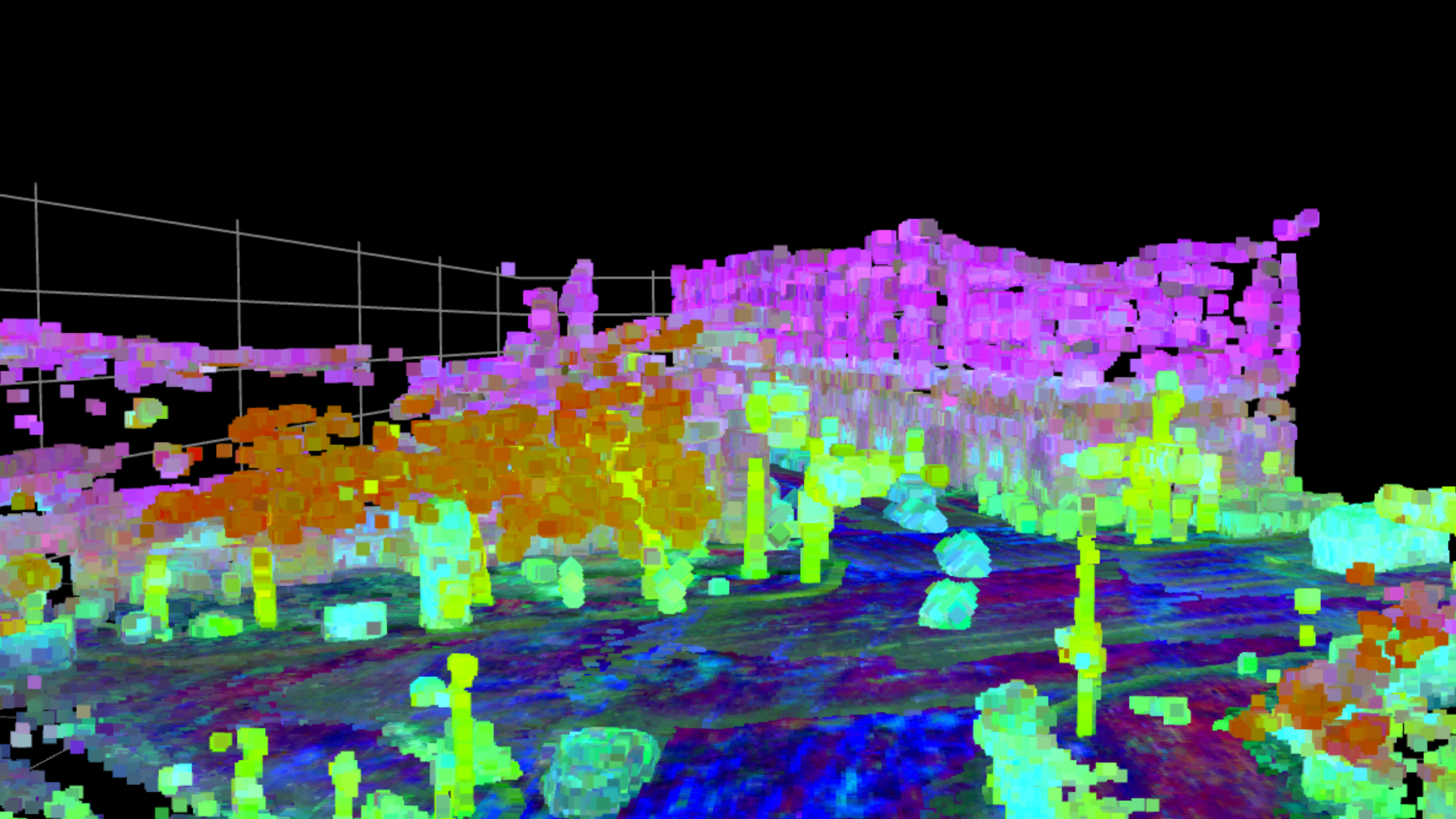

EmerNeRF, on the other hand, learns the classification completely self-supervised from camera and LiDAR images. For EmerNeRF, the researchers used the neural radiation fields of the same name: one for static and one for dynamic representations. The program optimizes these fields so that the replicas of the scenes look as realistic as possible - without any information about which objects are static or moving.

To better represent moving objects, EmerNeRF also calculates a flow field that shows how the objects move over time. With this motion information, it can combine data from multiple points in time to visualize moving objects in more detail.

Through training, EmerNeRF was then able to directly separate dynamic scenes, such as video footage of car journeys without labels, into static and moving elements. The team also enhanced EmerNeRF with parts of a basic model for 2D images, increasing its performance in recognizing objects in 3D environments by an average of almost 38 percent.

Video: Yang et al.

In a demanding benchmark with 120 driving scenes, EmerNeRF outperforms previous state-of-the-art methods such as HyperNeRF and D2NeRF.

More information, videos and the code are available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.