Open-source PixArt-δ image generator spits out high-resolution AI images in 0.5 seconds

Stable Diffusion may soon have some competition when it comes to open-source image generators. In its latest iteration, PixArt becomes faster and more accurate while maintaining a relatively high resolution.

In a paper, researchers from Huawei Noah's Ark Lab, Dalian University of Technology, Tsinghua University, and Hugging Face presented PixArt-δ (Delta), an advanced text-to-image synthesis framework designed to compete with the Stable Diffusion family.

This model is a significant improvement over the previous PixArt-α (Alpha) model, which was already able to quickly generate images with a resolution of 1024 x 1024 pixels.

High-resolution image generation in half a second

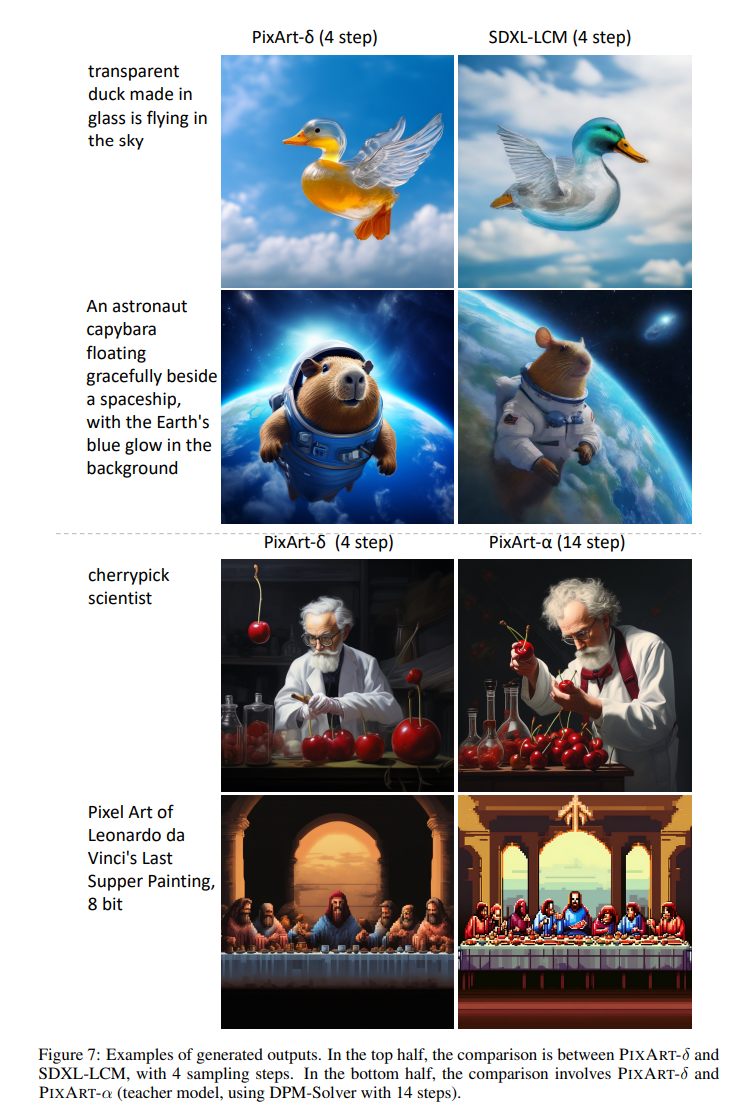

PixArt-δ integrates the Latent Consistency Model (LCM) and ControlNet into the PixArt-α model, significantly accelerating inference speed. The model can generate high-quality images with a resolution of 1,024 x 1,024 pixels in just two to four steps in as little as 0.5 seconds, seven times faster than PixArt-α.

SDXL Turbo, introduced by Stability AI in November 2023, can generate images of 512 x 512 pixels in just one step, or about 0.2 seconds.

However, PixArt-δ's results are higher resolution and seem more consistent compared to SDXL Turbo and a four-step variant of SDXL with LCM. The images appear to have fewer errors and the model follows the instructions more accurately.

The new PixArt model is designed to train efficiently on V100 GPUs with 32 GB of VRAM in less than a day. In addition, its 8-bit inference capability allows it to synthesize 1024-pixel images even on 8-GB GPUs, greatly improving its usability and accessibility.

More control over image generation

The integration of a ControlNet module into PixArt-δ allows finer control of text-to-image diffusion models using reference images. The researchers have introduced a novel ControlNet architecture specifically designed for transformer-based models that provide explicit controllability while maintaining high-quality image generation.

The researchers have published the weights for the ControlNet variant of PixArt-δ on Hugging Face. However, an online demo seems to be available only for PixArt-α with and without LCM.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.