Updated August 21, 2022:

Stable Diffusion is now available via a web interface. After logging in, you can generate images via text prompts, similar to DALL-E 2, and have several additional options for fine-tuning. As with DALL-E 2, there are restrictions on prompts, such as sexual or violent images.

The Stable Diffusion model, which can run locally or in the cloud, will no longer have these restrictions. The model is expected to be released on Github in the next few days.

You can try the Web-based Stable Diffusion for free. For the equivalent of just under $12, you can buy about 1000 prompts. The actual number of prompts available depends on the complexity of the calculations and the resolution of your image.

Click here to go to Dreamstudio, the web interface for Stable Diffusion.

The original article is dated August 14, 2022:

Open-source rival for OpenAI's DALL-E runs on your graphics card

OpenAI's DALL-E 2 is getting free competition. Behind it is an AI open-source movement and the startup Stability AI.

Artificial intelligence that can generate images from text descriptions has been making rapid progress since early 2021. At that time, OpenAI showed impressive results with DALL-E 1 and CLIP. The open-source community used CLIP for numerous alternative projects throughout the year. Then in 2022, OpenAI released the impressive DALL-E 2, Google showed Imagen and Parti, Midjourney reached millions, and Craiyon flooded social media with AI images.

Startup Stability AI now announced the release of Stable Diffusion, another DALL-E 2-like system that will initially be gradually made available to new researchers and other groups via a Discord server.

After a testing phase, Stable Diffusion will then be released for free - the code and a trained model will be published as open source. There will also be a hosted version with a web interface for users to test the system.

Stability AI funds free DALL-E 2 competitor

Stable Diffusion is the result of a collaboration between researchers at Stability AI, RunwayML, LMU Munich, EleutherAI and LAION. The research collective EleutherAI is known for its open-source language models GPT-J-6B and GPT-NeoX-20B, among others, and is also conducting research on multimodal models.

The non-profit LAION (Large-scale Artificial Intelligence Open Network) provided the training data with the open-source LAION 5B dataset, which the team filtered with human feedback in an initial testing phase to create the final LAION-Aesthetics training dataset.

Patrick Esser of Runway and Robin Rombach of LMU Munich led the project, building on their work in the CompVis group at Heidelberg University. There, they created the widely used VQGAN and Latent Diffusion. The latter served as the basis for Stable Diffusion with research from OpenAI and Google Brain.

"Jazz robots." by TheRealBissy#StableDiffusion #AIArt #AIArtwork @StableDiffusion pic.twitter.com/V6hBWZUuM9

- Stable Diffusion Pics (@DiffusionPics) August 14, 2022

Stability AI, founded in 2020, is backed by mathematician and computer scientist Emad Mostaque. He worked as an analyst for various hedge funds for a few years before turning to public work. In 2019, he helped found Symmitree, a project that aims to lower the cost of smartphones and Internet access for disadvantaged populations.

With Stability AI and his private fortune, Mostaque aims to foster the open-source AI research community. His startup previously supported the creation of the "LAION 5B" dataset, for example. For training the stable-diffusion model, Stability AI provided servers with 4,000 Nvidia A100 GPUs.

“Nobody has any voting rights except our 75 employees — no billionaires, big funds, governments, or anyone else with control of the company or the communities we support. We’re completely independent,” Mostaque told TechCrunch. “We plan to use our compute to accelerate open source, foundational AI.”

Stable Diffusion is an open-source milestone

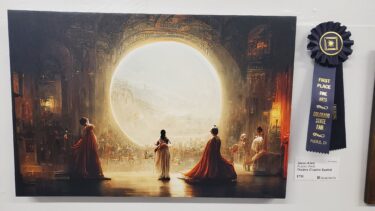

Currently, a test for Stable Diffusion is underway, with new additions being distributed in waves. The results, which can be seen on Twitter, for example, show that a real DALL-E-2 competitor is emerging here.

Unlike DALL-E 2, Stable Diffusion can generate images of prominent people and other subjects that OpenAI prohibits in DALL-E 2. Other systems like Midjourney or Pixelz.ai can do this as well, but do not achieve comparable quality with the high diversity seen in Stable Diffusion - and none of the other systems are open source.

Turns out #stablediffusion can do really awesome interpolations between text prompts if you fix the initialization noise and slerp between the prompt conditioning vectors: pic.twitter.com/lWOoETYVZ3

- Xander Steenbrugge (@xsteenbrugge) August 7, 2022

Stable Diffusion is already expected to run on a single graphics card with 5.1 gigabytes of VRAM - bringing AI technology to the edge that until now has only been available through cloud services. Stable Diffusion thus offers researchers and interested parties without access to GPU servers the opportunity to experiment with a modern generative AI model. The model is also supposed to run on MacBooks with Apple's M1 chip. However, image generation takes several minutes instead of seconds here.

Stability AI itself also wants to enable companies to train their variant of Stable Diffusion. Multimodal models are thus following the path previously taken by large language models: away from a single provider and toward the broad availability of numerous alternatives through open source.

Runway is already researching text-to-video editing enabled by Stable Diffusion.

#stablediffusion text-to-image checkpoints are now available for research purposes upon request at https://t.co/7SFUVKoUdl

Working on a more permissive release & inpainting checkpoints.

Soon™ coming to @runwayml for text-to-video-editing pic.twitter.com/7XVKydxTeD

- Patrick Esser (@pess_r) August 11, 2022

Stable diffusion: Pandora's box and net benefits

Of course, with open access and the ability to run the model on a widely available GPU, the opportunity for abuse increases dramatically.

“A percentage of people are simply unpleasant and weird, but that’s humanity,” Mostaque said. “Indeed, it is our belief this technology will be prevalent, and the paternalistic and somewhat condescending attitude of many AI aficionados is misguided in not trusting society."

Mostaque stresses, however, that free availability allows the community to develop countermeasures.

"We are taking significant safety measures including formulating cutting-edge tools to help mitigate potential harms across release and our own services. With hundreds of thousands developing on this model, we are confident the net benefit will be immensely positive and as billions use this tech harms will be negated."

More information is available on the Stable Diffusion github. You can find many examples of Stable Diffusion's image generation capabilities in the Stable Diffusion subreddit. Go here for the beta signup for Stable Diffusion.