The ToolLLaMA language model, which specializes in API calls, can use over 16,000 APIs and achieves the performance of ChatGPT.

Chinese researchers have presented ToolLLM, a framework that brings open source models up to the quality of ChatGPT in using APIs, an aspect in which these models have lagged far behind commercial offerings.

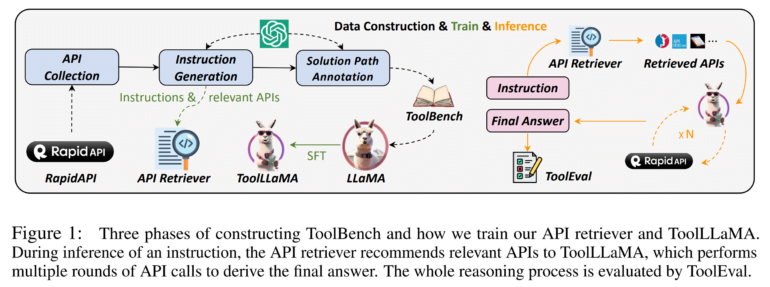

ToolLLM is based on the LLaMA open-source model. The team trained Meta's model on a high-quality dataset called ToolBench, which was automatically generated using ChatGPT, creating the specialized ToolLLaMA. ToolBench contains instructions with corresponding API calls from 49 categories.

An example of such a request might be, "I am organizing a movie night and need some movie suggestions. Can you find me the best romantic movies from the U.S. and also a suitable venue near me?" To resolve such a request, the model must correctly call the relevant APIs, for example, a movie search API and a hotel search API.

Decision trees help with dataset creation

To build the ToolBench dataset, the team also uses a technique called Depth-First Search Decision Tree (DFSDT), which allows language models like GPT-4 to follow multiple search paths to find the best solution to an API request. According to the researchers, in experiments, DFSDT shows a clear advantage in solving difficult tasks compared to the native model or other methods such as chain-of-thought reasoning.

To further enhance ToolLLaMA's capabilities, the researchers also trained a neural API retriever that automatically recommends relevant APIs for each statement from a pool of more than 16,000 APIs.

Integrating the retriever with ToolLLaMA creates an automated pipeline for using complex tools without the need for manual API selection.

ToolLLaMA reaches ChatGPT quality for API calls

To evaluate the capabilities of ToolLLaMA, the team is also introducing an automated model evaluator called ToolEval. It measures two key indicators - success rate (ability to successfully complete an instruction) and win rate (comparison of solution quality with existing methods).

In the ToolEval comparison, the ToolLLaMA model achieves a success rate comparable to ChatGPT, even though it was trained with significantly fewer examples. ToolLLaMA can also successfully deal with previously unknown APIs by reading their documentation. A recently published study by Google also shows that studying such documentation can be useful.

More information and code is available on GitHub.