Language models that read documentation successfully learn to use tools - and in some cases even invent new methods, according to a new research paper.

Large language models such as ChatGPT can make rudimentary use of tools or APIs. Traditionally, language models are trained with a few examples using the tools. For more complex tools, however, such demonstrations are rare or nonexistent. A team of researchers from the University of Washington, National Taiwan University, and Google has a different idea: Just read the manual - often abbreviated RTFM on the web.

Such documentation describes exactly what a tool does, such as API documentation. They are more general than a demonstration of how to use the tool for a particular task and are readily available for most software tools via README files or API references. The team, therefore, assumed that they would not only scale better but also produce better results than demonstrations because models also learn about tools in a more general and flexible way.

Training with documentation enables zero-shot tool use

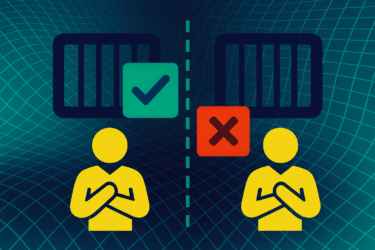

The team trained several models on six different tasks using both documentation and demonstration and compared their performance. Using documentation alone, the zero-shot performance was equal to or better than models that learned only from demonstrations. Then, after scaling to a dataset of 200 tools, the first model significantly outperformed the second.

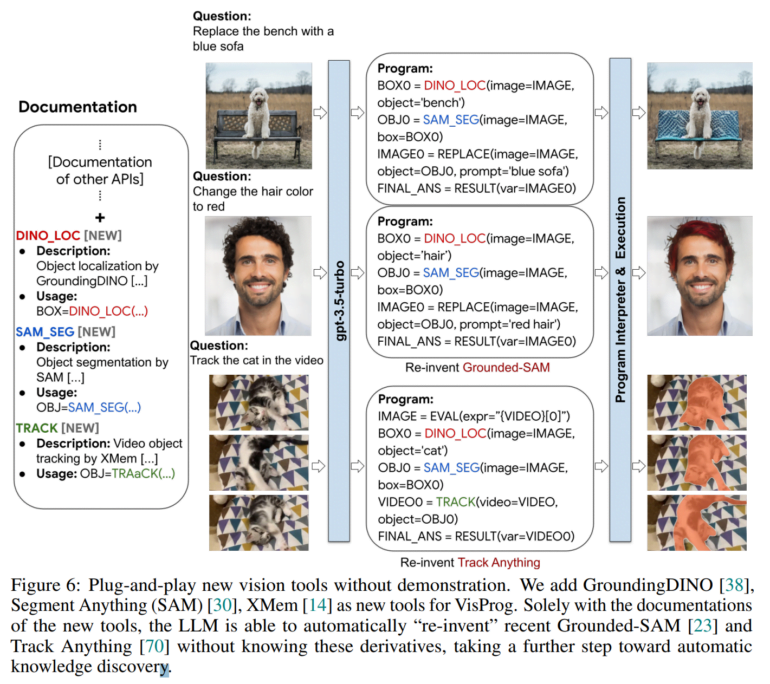

In the area of image processing, the model was able to perform complex image processing and video tracking functions without further demonstration by learning from the documentation of new, state-of-the-art image processing modules. The team highlights as particularly noteworthy that the model was able to reproduce recently released image processing techniques such as Grounded-SAM and video tracking with Track Anything, demonstrating the potential of the method for automatic knowledge discovery.

"Overall, we shed light on a new perspective of tool usage with LLMs by focusing on their internal planning and reasoning capabilities with docs, rather than explicitly guiding their behaviors with demos," the paper states.