OpenAI co-founder says AI is reaching "peak data" as it hits the limits of the internet

During his presentation at the NeurIPS conference, AI pioneer and OpenAI co-founder Ilya Sutskever warned that AI development is approaching the limits of available training data.

While computing power keeps growing through better hardware, algorithms, and larger data centers, Sutskever explained that training data isn't keeping pace. He introduced the concept of "peak data," noting that "we have but one internet" and that won't change.

Sutskever compared training data to fossil fuels, noting that both resources have finite limits. While data files can be copied unlike oil, Sutskever's concern likely focuses on a different limitation: the actual knowledge and insights that AI systems can extract from this data, which cannot be infinitely duplicated or expanded.

From early vision to current constraints

Looking back at modern AI's beginnings, Sutskever recalled his 2014 "deep learning hypothesis," which proposed that a neural network with ten layers could match any human task performed in a fraction of a second. He chose ten layers simply because that's what could be practically trained at the time.

The hypothesis was based on similarities between artificial and biological neurons. Sutskever argued that if artificial neurons were even slightly similar to biological ones, large neural networks should be able to perform the same tasks as the human brain. One key difference remained: while the brain can reconfigure itself, AI systems require as much training data as they have parameters.

This idea sparked what Sutskever terms the era of pre-training, leading to models like GPT-2 and GPT-3. He credited his former colleagues Alec Radford and Anthropic founder Dario Amodei for driving this progress. Now, however, Sutskever believes this approach is reaching its natural limits.

New scaling horizons: Agents, Synthetic Data, and Test-Time Compute

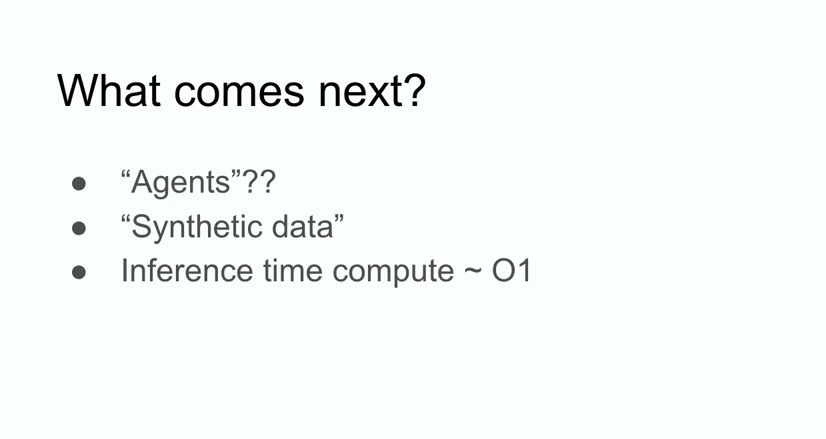

Sutskever outlined several potential paths forward beyond pre-training: AI agents, synthetic data (which he described as a "big challenge"), and increased computing power during inference could help overcome training data limitations. The AI researcher recently described this period as a new "age of discovery" for the field.

According to Sutskever, tomorrow's AI systems will be fundamentally different from today's models. He explained that current systems are only minimally "agentic," but predicted this will change as future AI systems develop genuine abilities to think and reason independently.

This evolution, however, comes with challenges. Sutskever warned that increased reasoning ability leads to less predictability, pointing out that chess AIs already surprise even grandmasters with their moves.

On a positive note, he suggested this shift toward real reasoning could help reduce hallucinations, as future AI systems might use logical thinking and self-reflection to verify and correct their own statements—capabilities that current systems, which rely mainly on pattern recognition and intuition, don't have.

Pre-training stagnation becomes industry consensus and new ventures

This shift in thinking matches what's happening in the AI industry. OpenAI, Google, and Anthropic are reportedly hitting the ceiling with traditional pre-training methods in their newest language models.

Google's Gemini AI lead, Oriol Vinyals, recently explained that making models bigger isn't enough anymore - each improvement now requires exponentially more effort and resources.

In response, companies like OpenAI are exploring alternatives such as "test-time compute," which gives AI models more time and computing power to process information rather than just increasing their pre-training capacity.

Following his departure from OpenAI in May 2024, Sutskever founded Safe Superintelligence Inc (SSI), a startup that has raised more than $1 billion at a $5 billion valuation. The company, which operates from offices in Palo Alto and Tel Aviv, focuses on developing safe superintelligent systems.

SSI plans to maintain a small team of top engineers and researchers, with most of its funding allocated to computing power and hiring. The company says it's particularly interested in recruiting employees who aren't swayed by the AI industry's hype.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.