From now on, ChatGPT Plus subscribers can send up to 50 messages in three hours to GPT-4. After the introduction of the new model in March, the number was limited to 25 messages in two hours for computational and cost reasons.

The increase in the number of messages may be related to the recently introduced GPT-4 version "0613", which may be a more efficient model, but may also sacrifice quality (see below).

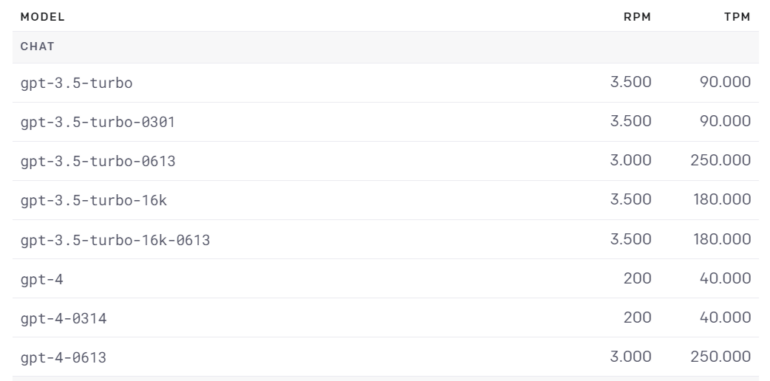

What is noticeable compared to the March model "0314" is the significantly higher writing speed of the new model. This was also the case when switching from GPT-3 to GPT-3.5. However, there was no loss of quality. The new GPT-4 model "0613" can handle significantly more RPM (requests per minute) and TPM (tokens per minute).

GPT-4 offers more volume and is faster - at the expense of quality?

In our tests via the API, the new GPT-4 version follows our prompt templates created for the March release less reliably and in less detail and is more prone to factual errors.

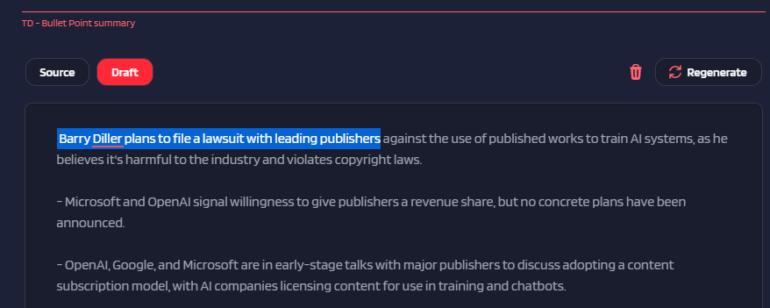

For example, we generate some summaries below our articles with GPT-4. In the summary of this news item about Barry Diller's lawsuit plans, the old GPT-4 model correctly captures that Diller wants to team up with publishers to sue AI companies.

The new version of GPT-4 claims that Diller wants to sue the publishers. This bug could be reproduced on multiple regenerations with the same prompt. The old version is always right, the new one is always wrong.

Of course, this is just an anecdotal observation. However, a more systematic investigation also suggests that ChatGPT has lost quality with GPT-3.5 and GPT-4 since March. Ultimately, however, this is not conclusively proven and possible reasons are not known.

Criticism of the allegedly declining quality of the model has been voiced for several weeks, especially by frequent users on social media and Reddit. OpenAI has always pointed out that there has been no degradation in quality, and if anything has changed, it's an improvement. The examples sometimes cited are likely bugs. Now that the study has been published, OpenAI wants to investigate the examples and possible quality degradation.

LLMs must be reliable for everyday work

Regardless of whether the criticism of potentially poor performance proves true, OpenAI would be well advised to ensure the reliability of its model across model changes and to communicate better and more transparently. Especially for business customers who resell services, quality fluctuations in day-to-day use can jeopardize the business model.

This may not even be a degradation of the model, but rather a deviation in prompt compatibility, for example. Since the understanding of the exact functioning of the models is still low, OpenAI faces a challenging task here, which will only become more difficult as its customer base grows.