OpenAI launches red teaming network to root out AI risks

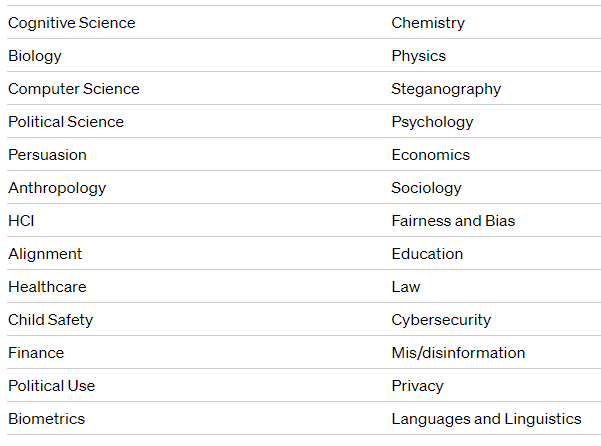

OpenAI has announced an open call for experts across a range of domains to join the OpenAI Red Teaming Network, aimed at improving the safety of their AI models.

Red teaming is part of OpenAI's iterative development process, which includes internal and external adversarial testing. With this formal launch, OpenAI aims to deepen and broaden its collaboration with external experts.

Experts in the network will assist with risk assessment based on their area of expertise throughout the model and product development lifecycle. The goal is to provide diverse and continuous input to make red teaming an iterative process. OpenAI's call to action is open to experts from around the world, regardless of prior experience with AI systems or language models.

Network members are compensated for their contributions to a red teaming project. The experts will also have the opportunity to interact with each other on general red teaming practices and lessons learned. They won't be restricted from publishing their research or pursuing other opportunities per se, but they will have to abide by non-disclosure agreements (NDAs).

Applications are open until December 1, 2023.

Microsoft does red teams too

If you want to learn more about red teaming, check out Microsoft's red teaming process. The company has detailed its strategy for "red teaming" AI models like GPT-4, using independent teams to test for vulnerabilities in the system before deployment.

The tech giant uses red teaming at the model and system level, including for applications such as Bing Chat. Red teaming for AI is particularly challenging due to the unpredictable nature and rapid evolution of AI systems. Multiple layers of defense are needed, according to Microsoft.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.