OpenAI releases new language model InstructGPT-3.5

Key Points

- OpenAI introduces "gpt-3.5-turbo-instruct", a new instruction language model that is as efficient as the chat-optimized GPT-3.5 Turbo.

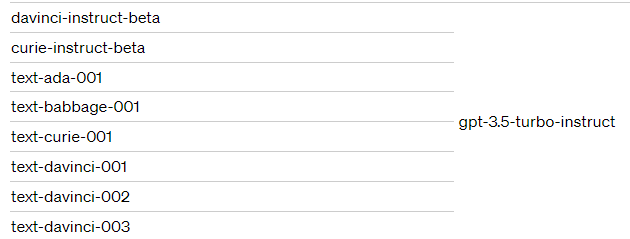

- The new model replaces several previous Instruct models and language models that will be retired in January 2024. The model's training data is current as of September 2021.

- Instruct models are refined by human feedback and are better able to understand and respond to human queries as expected. Gpt-3.5-turbo-instruct is optimized for direct question answering or text completion, not chat applications.

OpenAI introduces "gpt-3.5-turbo-instruct", a new instruction language model that is as efficient as the chat-optimized GPT-3.5 Turbo.

OpenAI is introducing "gpt-3.5-turbo-instruct" as a replacement for the existing Instruct models, as well as text-ada-001, text-babbage-001, text-curie-001, and the three text-davinci models that will be retired on January 4, 2024.

The cost and performance of "gpt-3.5-turbo-instruct" is the same as the other GPT-3.5 models with 4K context windows. The cutoff date for training data is September 2021.

OpenAI says that gpt-3.5-turbo-instruct was trained "similarly" to previous Instruct models. The company does not provide details or benchmarks for the new Instruct model, instead referring to the January 2022 announcement of InstructGPT, which in turn was the basis for GPT-3.5.

OpenAI's general statement is that GPT-4 follows complex instructions better than GPT-3.5 and produces higher quality than GPT-3.5, which in turn is significantly faster and cheaper.

Gpt-3.5-turbo-instruct is not a chat model, unlike GPT-3.5. Instead of conversations, it is optimized for directly answering questions or completing text. OpenAI claims that it is as fast as GPT-3.5-turbo.

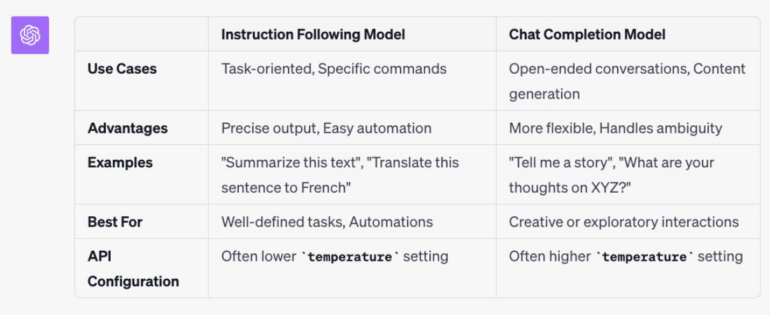

The following graphic shows an OpenAI-designed differentiation between the Instruct and Chat models. The difference can have implications for how prompts need to be written.

OpenAI's Logan Kilpatrick, who is responsible for developer relations, calls the new Instruct model a stopgap solution for the transition to 3.5 Turbo. It is not a "long-term solution," he said.

Customers who have fine-tuned models in use will need to re-tune based on the new model versions. The fine-tuning feature is available for GPT-3.5, with GPT-4 scheduled for release later this year.

Instruct models are the basis for ChatGPT's breakthrough

Instruct models are large language models that are refined through human feedback (RLHF) after being pre-trained with a large amount of data. In this process, humans evaluate the model's output in response to user-provided prompts and improve it to achieve a target result, which is then used to further train the model.

As a result, Instruct models are better able to understand and respond to human queries as expected, making fewer mistakes and spreading less harmful content. OpenAI's tests have shown that people prefer an InstructGPT model with 1.3B parameters to a GPT model with 175B parameters, even though it is 100 times smaller.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now