OpenAI unveils o3, its most advanced reasoning model yet

OpenAI has announced o3, a new AI model that achieves breakthrough performance in complex reasoning tasks. A cost-effective mini version is set to launch in late January 2025, followed by the full version.

OpenAI's new o3 model represents a significant leap forward in AI capabilities, particularly in how it approaches problem-solving. Like its just released predecessor o1, o3 takes more time and computing power to work through problems, using a complex reasoning process to reach solutions.

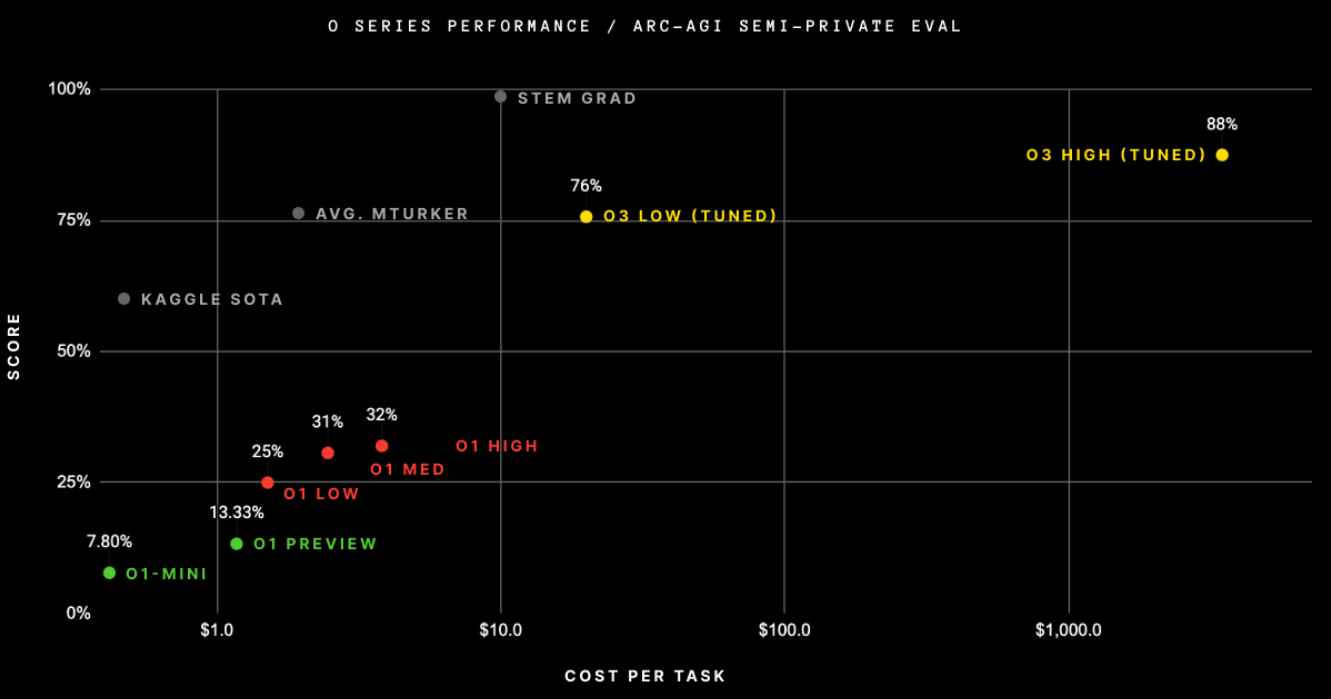

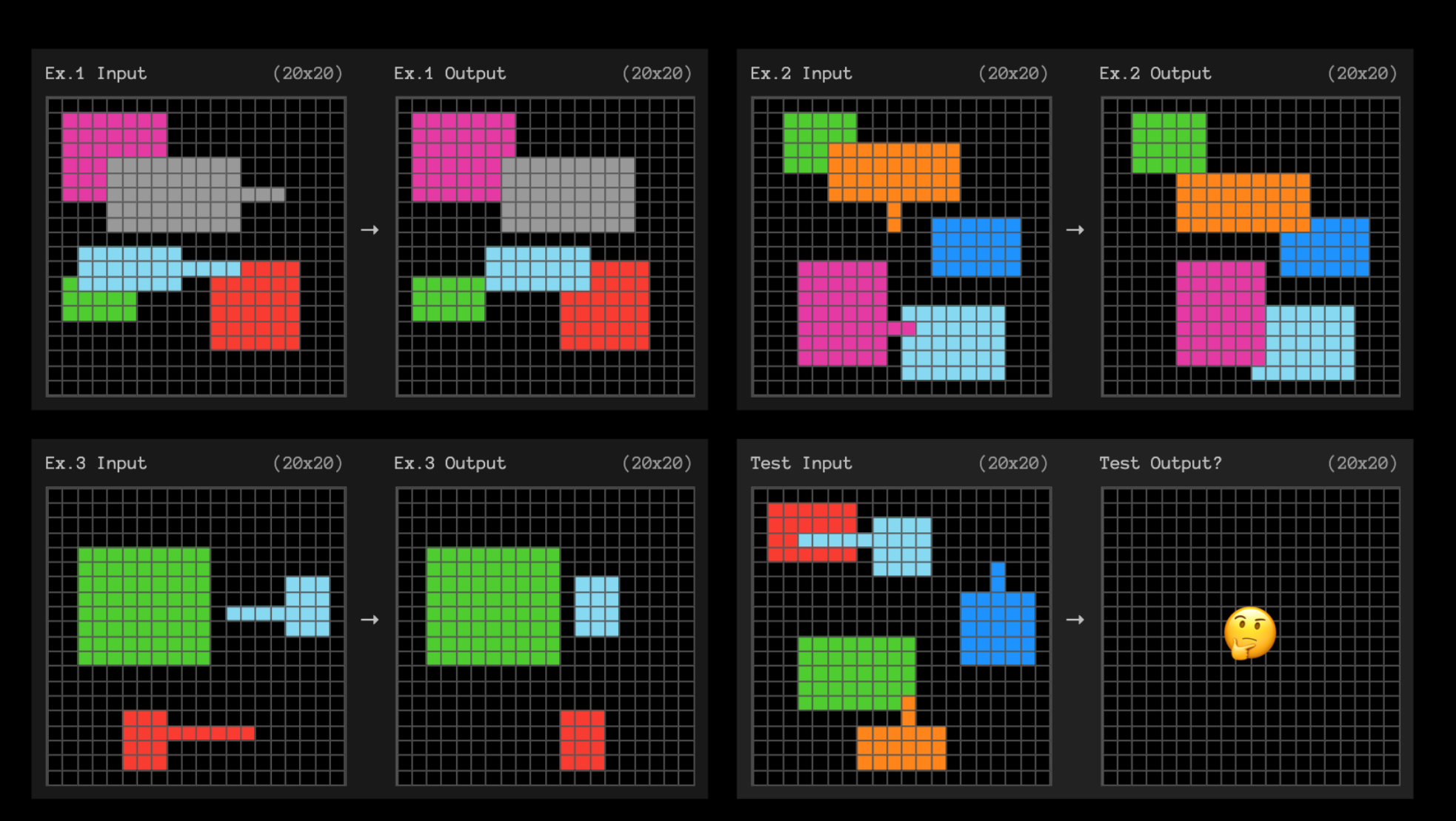

o3 sets records across key benchmarks. Using standard computing power, o3 achieves 75.7 percent on the AGI benchmark ARC Prize, jumping to 87.5 percent with increased resources. The ARC benchmark is considered an indicator of progress toward artificial general intelligence (AGI).

In EpochAI's Frontier Math Benchmark, introduced last November as one of the most challenging AI math tests available, o3 achieved a 25.2 percent success rate - far ahead of previous models that couldn't break 2 percent. The benchmark's developers called these results a "significant leap" and said they're already preparing "tougher, next-generation benchmarks" to test upcoming AI models.

The system shows similar gains in other areas. Software task accuracy improved by 20 percent compared to o1, reaching 71.7 percent. In competitive programming, o3 achieved a Codeforces score of 2727, surpassing OpenAI's Chief Scientist's score of 2665.

For PhD-level science questions in the GPT Diamond Benchmark, o3 scored 87.7 percent, well above the roughly 70 percent average for PhD experts in their fields, according to OpenAI.

The cost of reasoning

François Chollet, who developed the ARC benchmark, describes o3's performance as "a surprising and important step-function increase in AI capabilities."

What makes o3 different, Chollet explains, is how it approaches problems. Unlike traditional language models that mainly retrieve stored patterns, o3 creates new programs in real-time to solve unfamiliar challenges.

According to Chollet, the system appears to work similarly to Google DeepMind's AlphaZero chess program, methodically searching through possible solutions until it finds the right approach. This thorough process explains why o3 needs so much computing power - it processes up to 33 million tokens for a single task.

This intensive token processing comes with significant costs compared to current AI systems. The high-efficiency version runs about $20 per task, which adds up quickly - $2,012 for 100 test tasks, or $6,677 for the full set of 400 public tasks (averaging about $17 per task).

The low-efficiency version demands even more resources - 172 times more computing power than the high-efficiency version. While OpenAI hasn't revealed the exact costs, testing shows this version processes between 33 and 111 million tokens and requires about 1.3 minutes of computing time per task.

Not quite AGI

Despite these impressive results, Chollet emphasizes that o3 isn't yet artificial general intelligence. The system still struggles with some basic tasks and shows fundamental differences from human intelligence.

True AGI, he explains, will only arrive when we can no longer create tasks that humans find easy but AI finds difficult.

With o3 pushing the limits of the current ARC benchmark, Chollet has announced a more challenging successor for 2025. Early tests suggest o3 will only achieve around 30 percent on ARC-AGI-2, while humans without special training can solve about 95 percent of its tasks.

o3-Mini version coming soon

OpenAI plans to release a more affordable o3 mini version in late January 2025, followed by the full version. The mini version will offer three speed settings (low, medium, and high) and outperforms o1 even at medium settings, while being both faster and more cost-effective.

During a live demo, OpenAI showed o3 mini generating and executing code independently, including creating a Python script that built a user interface for self-evaluation on a dataset. The mini version also supports API functions like function calls and structured outputs, matching or exceeding o1's capabilities in these areas.

Before release, OpenAI is launching a safety testing program, with applications open until January 10. The company is also introducing "Deliberative Alignment," a new safety approach that uses the model's reasoning abilities to establish better safety boundaries. As for the name, OpenAI chose "o3" because they had to skip "o2" out of consideration for the telecommunications company O2.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.