DALL-E 2 from OpenAI generates credible photos and drawings, but it can't write - or can it? A study shows that the words generated by DALL-E 2 on images are not a random jumble of letters, but can have meaning.

In April, OpenAI released initial details on DALL-E 2, an AI system that generates impressive images. The results were far beyond what artificial intelligence could do up to that point.

As with the GPT-3 language model, DALL-E 2 started in a closed beta phase. Meanwhile, approved users have generated over three million images with DALL-E 2. OpenAI now aims to activate 1,000 new accesses every week.

DALL-E 2 has problems with text

OpenAI's image system generates partially photo-realistic scenes, such as fake vacation pictures, teddy bears in Picasso style, or an antique statue of a man tripping over a cat. There seem to be hardly any limits to creativity.

But DALL-E 2 also has weaknesses, such as when the system incorrectly arranges colored cubes on a picture contrary to the instructions, mixes concepts like supermarket and renaissance, or understands "operated" in the medical sense instead of, for example, operating a machine.

Didn't do the captions - it's not great at text #dalle pic.twitter.com/4YvxdAqZPZ

- Benjamin Hilton (@benjamin_hilton) April 28, 2022

Moreover, DALL-E 2 has trouble putting text on an image. Something Google's new image AI Imagen has ahead of OpenAI's product. An example from OpenAI's related research: instead of writing "Deep Learning" on a sign in a generated image, the AI texts "Deinp Lerpt" or "Diep Deep."

In numerous other tests, DALL-E 2 produced only fantasy words. The reason is probably OpenAI's static multimodal CLIP model, which is part of the DALL-E 2 architecture. Google's Imagen, on the other hand, relies on a large language model with a better understanding of language.

Does DALL-E 2 have a hidden vocabulary?

Now, researchers at the University of Texas have shown that DALL-E 2's strange strings are probably not as random as previously thought. In numerous experiments, they were able to show that DALL-E 2 has developed a hidden vocabulary that appears in images with text. These supposed fantasy words can in turn be used to control the AI system.

For example, the input "Two farmers talking about vegetables, with subtitles" generates an image with seemingly meaningless text.

A known limitation of DALLE-2 is that it struggles with text. For example, the prompt: "Two farmers talking about vegetables, with subtitles" gives an image that appears to have gibberish text on it.

However, the text is not as random as it initially appears... (2/n) pic.twitter.com/B3e5qVsTKu

- Giannis Daras (@giannis_daras) May 31, 2022

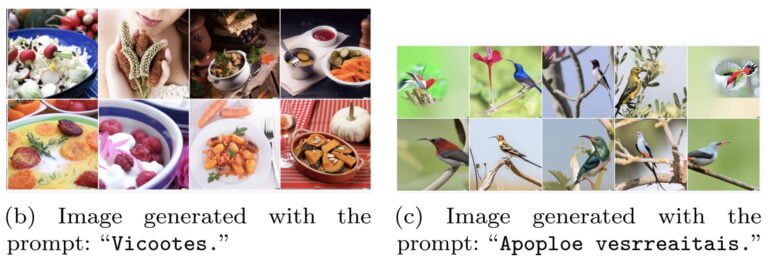

But when the text "Vicootes" is used as input to DALL-E 2, the system generates images of vegetables. The text "Apoploe vesrreaitars", on the other hand, generates images of birds.

"It seems that the farmers are talking about birds, messing with their vegetables," co-author Giannis Daras said on Twitter.

Using the same method, the researchers find other examples of DALL-E-specific vocabulary: "Wa ch zod ahaakes rea" produces images of seafood, "Apoploe vesrreaitais" not only of birds but also of insects, depending on the style - so the term seems to encompass flying objects.

"Contarra ccetnxniams luryca tanniounons" means mostly insects. Taken together, the input "Apoploe vesrreaitais eating Contarra ccetnxniams luryca tanniounons" thus produces images of birds eating insects.

However, it is difficult to find such robust examples, the authors write. Often, one and the same word generates different images that, at first glance, have nothing in common.

Nevertheless, the discovery of a DALL-E vocabulary creates new and interesting challenges regarding the model's security and interpretability. Currently, speech systems filter text inputs for DALL-E 2 and detect those that violate OpenAI's guidelines. The seemingly nonsensical inputs with DALL-E vocabulary could be used to bypass these filters, the authors said.

The researchers plan to find out more about DALL-E 2's hidden vocabulary in the next step.