OpenAI's DALL-E 2 produces impressive images, but the AI system is not perfect. First experiments show its current limitations.

A few weeks ago, OpenAI demonstrated the impressive capabilities of DALL-E 2. The multimodal AI model sets a new standard for AI-generated images: From sometimes complex text descriptions, DALL-E 2 generates images in a variety of styles, from oil paintings to photorealism.

OpenAI chief Sam Altman sees DALL-E 2 as an early example of AI's impact on the labor market. A decade ago, he says, physical and cognitive labor were singled out as the first victims of AI systems - while creative labor was targeted last. Now it looks like the order is reversing, Altman says.

OpenAI's DALL-E 2 makes mistakes

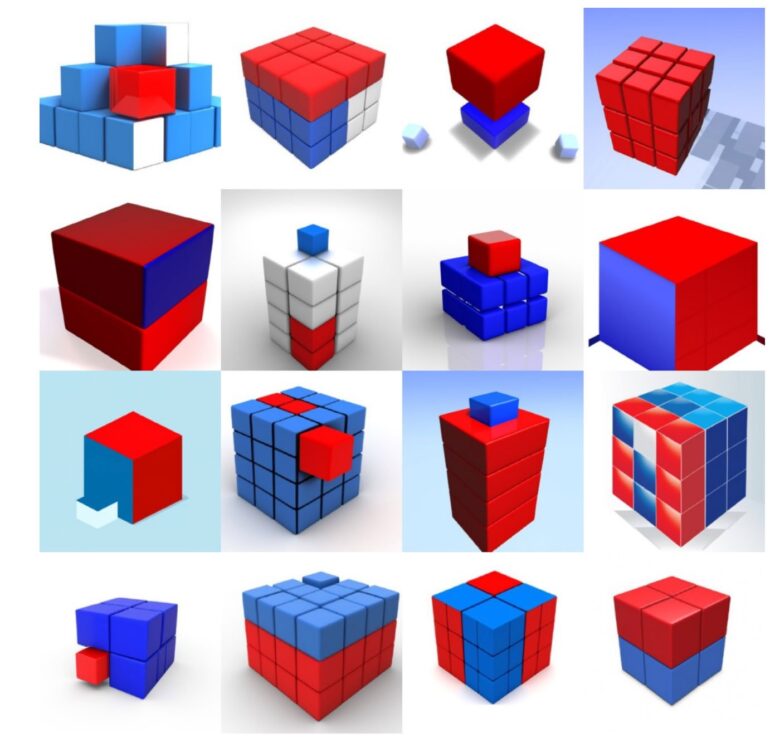

In the scientific paper that accompanied the unveiling of DALL-E 2, OpenAI points out some limitations of the system. For example, the researchers tested DALL-E's ability to perform compositionality, which is the meaningful merging of multiple object properties, such as color, shape and positioning in the image.

The tests show that DALL-E 2 does not understand the logical relationships given in the descriptions and therefore arranges colored cubes incorrectly, for example. The following motifs show DALL-E's attempt to place a red cube on top of a blue cube.

Meanwhile, some applicants have gained access to the closed beta test of the system and reveal further limitations of DALL-E 2.

Twitter user Benjamin Hilton reports in a corresponding thread that he often needs numerous input variants for a good result. As an example, he provides an image for the input "A renaissance-style painting of a modern supermarket aisle. In the aisle is a crowd of shoppers with shopping trolleys trying to get reduced items".

#dalle pic.twitter.com/2kuoVnaqR2

- Benjamin Hilton (@benjamin_hilton) April 28, 2022

Although shopping carts and customers are part of the image, the supermarket looks anything but modern. Misunderstandings would also occur in other cases, e.g. when the English word "operated" is processed in the medical sense instead of, for example, operating a machine.

Didn't do the captions - it's not great at text #dalle pic.twitter.com/4YvxdAqZPZ

- Benjamin Hilton (@benjamin_hilton) April 28, 2022

In some cases, complex input produced no meaningful results at all. As an example, Hilton cites the description "Two dogs dressed like roman soldiers on a pirate ship looking at New York City through a spyglass."

DALL-E 2 mixes concepts - and has a very positive attitude

In some cases, DALL-E 2 also mixes concepts: In one image, a skeleton and a monk are supposed to be sitting together, but the monk still looks pretty bony even after multiple attempts.

#dalle pic.twitter.com/rsCKclmtD0

- Benjamin Hilton (@benjamin_hilton) April 28, 2022

DALL-E 2 also had problems with faces, coherent plans like a site plan or a maze, and with text. The system could not handle negations at all: An input like "A spaceship without an apple" results in a spaceship with an apple.

By the way, DALL-E can represent 2 apples excellently - only when counting, the system is not so accurate. It only counts up to four.

6) "10 apples" pic.twitter.com/MoGgpNFSn4

- Benjamin Hilton (@benjamin_hilton) April 30, 2022

AdAdJoin our communityJoin the DECODER community on Discord, Reddit or Twitter - we can't wait to meet you.