Jan Leike, the former head of AI alignment and superintelligent AI safety at OpenAI, has sharply criticized his former employer for a lack of safety priorities and processes.

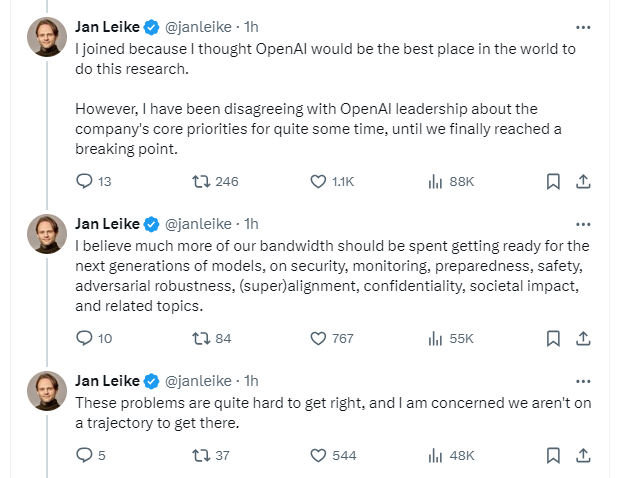

Leike joined OpenAI because he believed it was the best place in the world to do AI safety research specifically for superintelligence. But for some time, he disagreed with OpenAI's leadership about the company's core priorities, until a "breaking point" was reached, Leike says.

Leike, until yesterday the head of AI Alignment and Superintelligence Safety at OpenAI, announced his resignation from the company shortly after renowned AI researcher, co-founder, and AI safety guru Ilya Sutskever also left the company.

Leike now says he is convinced that OpenAI needs to devote much more computing bandwidth to preparing and securing the next generations of AI models.

When OpenAI announced its super-AI alignment team last summer, it said it would dedicate 20 percent of its then-available computing power to the safety team. Apparently, that promise was not kept.

AI safety is a big challenge, Leike says, and OpenAI might be on the wrong track.

"Building smarter-than-human machines is an inherently dangerous endeavor. OpenAI is shouldering an enormous responsibility on behalf of all of humanity," writes Leike.

For the past few months, Leike's team has been "sailing against the wind." At times, they had to fight for computing power. According to Leike, it has become increasingly difficult to conduct this critical research.

Safety culture and processes have taken a back seat to "shiny products," Leike says. "We are long overdue in getting incredibly serious about the implications of AGI."

Sutskever's and Leike's departures fit with earlier rumors of a groundswell at OpenAI against the company's over-commercialization and rapid growth.