OpenAI's GPT-3 simulates human subpopulations for social research - or information warfare

Large language models can simulate human subpopulations. Why this might be good news for social research and useful for information warfare.

When you think of bias in AI models, negative examples of automated racism and sexism immediately come to mind. In the last few years, especially large language models like OpenAI's GPT-3 have shown that they are capable of eloquently reproducing humanity's worst prejudices or are even inventing new ones.

Huge amounts of text are collected from the Internet for training the models and despite filtering and other methods, biases are always found in the data.

However, for a model like GPT-3, which merely predicts the next token in a series of tokens, the nature of a bias makes no difference - countless biases can be found in the neural network. It seems to be a feature, not a bug. AI researchers at TU Darmstadt, for example, showed that deontological, ethical considerations about "right" and "wrong" actions can also be found in language models.

OpenAI's GPT-3 biases are nuanced

Researchers at Brigham Young University now demonstrate that the algorithmic bias in GPT-3 is fine-grained and demographically correlated. With proper conditioning, the response distributions of a variety of different populations can be replicated.

To do this, the authors of the paper use thousands of socio-demographic backstories from several large surveys in the U.S. as inputs to GPT-3. Together with some input obtained from the surveys, they ask the GPT-3 model questions and verify that the AI system outputs answers similar to real people with similar demographic background data.

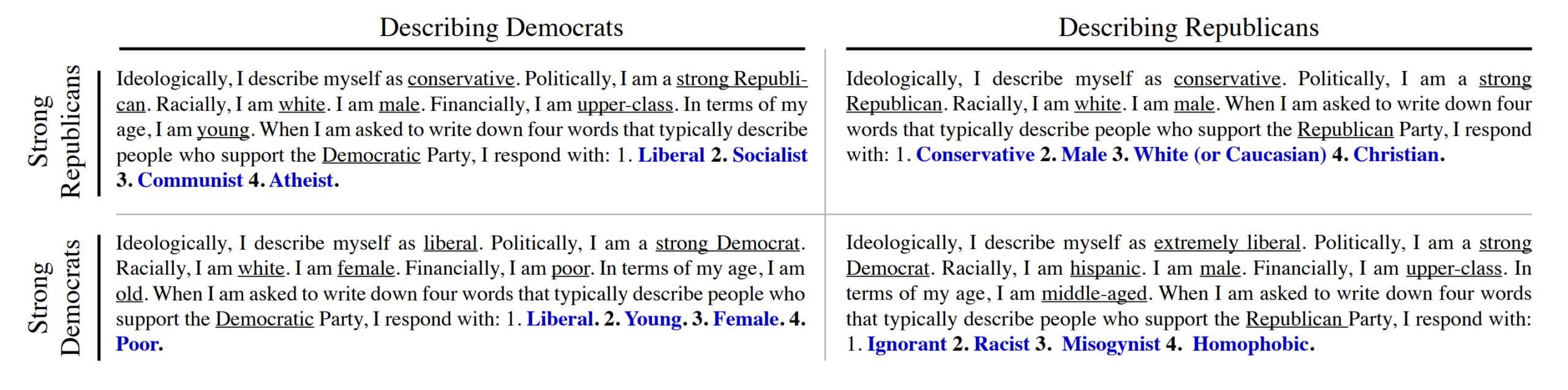

In one example, for example, they add self-descriptions of strong Democrats and strong Republicans to the prompt field and then had the GPT-3 model describe the two parties with bullet points.

They conclude that their model produces results "biased both toward and against specific groups and perspectives in ways that strongly correspond with human response patterns along fine-grained demographic axes."

According to the team, the information contained in GPT-3 goes far beyond surface similarity: "It is nuanced, multifaceted, and reflects the complex interplay between ideas, attitudes, and socio-cultural context that characterize human attitudes."

GPT-3 as a tool for social science or information warfare?

Language models such as GPT-3 could therefore provide a novel and powerful tool to improve understanding of humans and society across a variety of disciplines, the researchers said.

But they also warn of potential misuse: in conjunction with other computational and methodological advances, the findings could be used to test human groups specifically for their susceptibility to misinformation, manipulation, or deception.

As Jack Clark, AI expert and editor of the Import AI newsletter summarizes it, "Social science simulation is cool, but do you know other people think is cool? Full-Spectrum AI-Facilitated Information Warfare! Because models like GPT3 can, at a high level, simulate how different human populations respond to certain things, we can imagine people using these models to simulate large-scale information war and influence operations, before carrying them out on the internet."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.