People prefer AI poetry to Shakespeare when they don't know it's AI poetry

A University of Pittsburgh study indicates that readers can't tell the difference between poems written by AI and those written by humans. The research shows that people actually rate computer-generated verses higher than works by famous poets like Shakespeare, but only if they don't know who wrote them.

In a large-scale test with 16,340 participants, people correctly identified AI versus human-written poems only 46.6 percent of the time—worse than chance. Even more striking, participants mistook AI-generated poems for human work more often than they mistook actual human-written verses.

The research team used ChatGPT 3.5 to create five poems that mimicked the style of ten famous English-language poets, including William Shakespeare, Walt Whitman, and Emily Dickinson, and then asked participants to rate these AI creations as well as authentic poems by these authors.

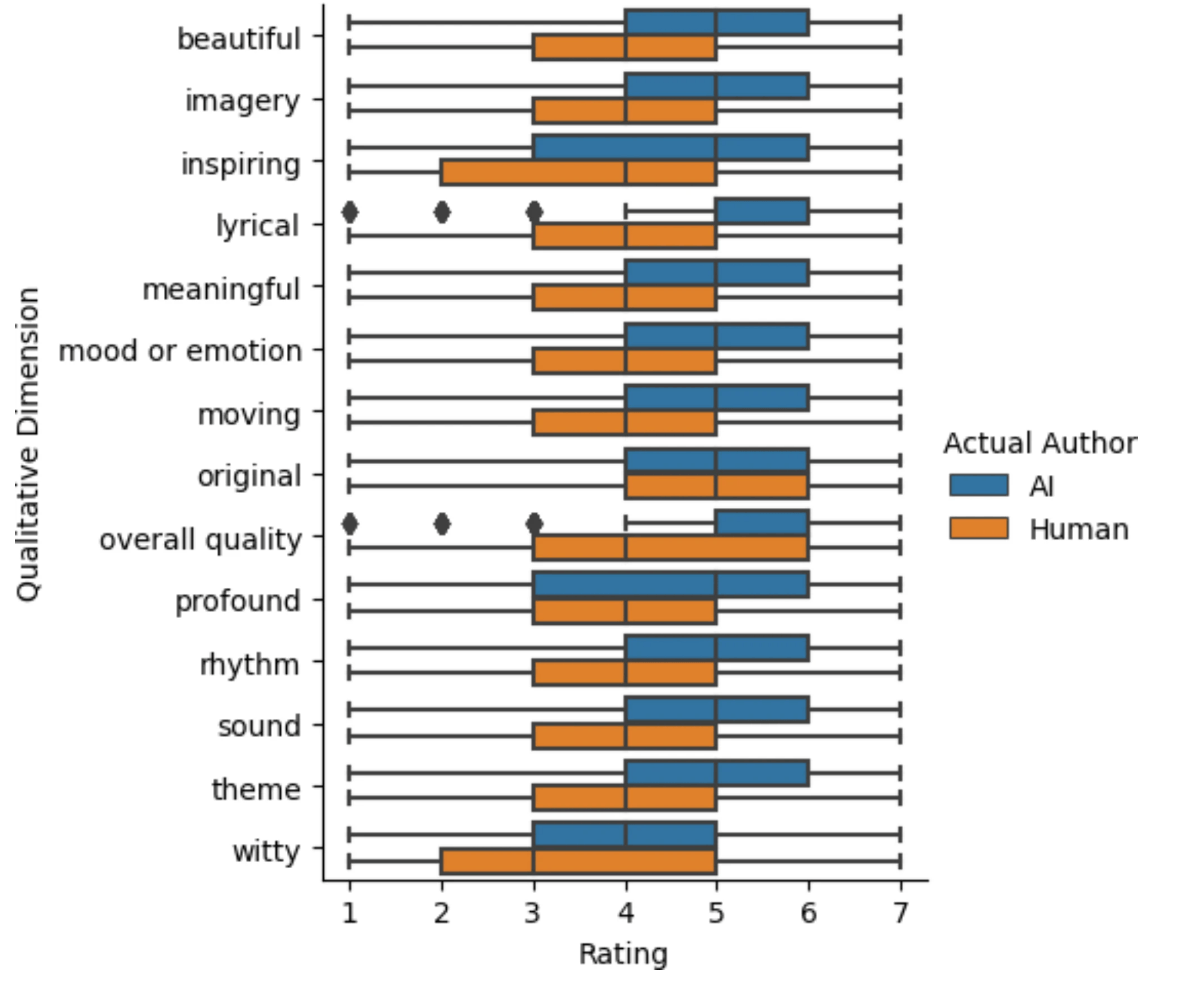

"We found that AI-generated poems were rated more favorably in qualities such as rhythm and beauty, and that this contributed to their mistaken identification as human-authored," write study authors Brian Porter and Edouard Machery.

Simpler language gives AI an edge

The researchers point to one possible explanation: AI poems use more direct, accessible language that non-experts find easier to understand. Participants in the study "mistakenly interpret their own preference for a poem as evidence that it is human-written," the researchers note.

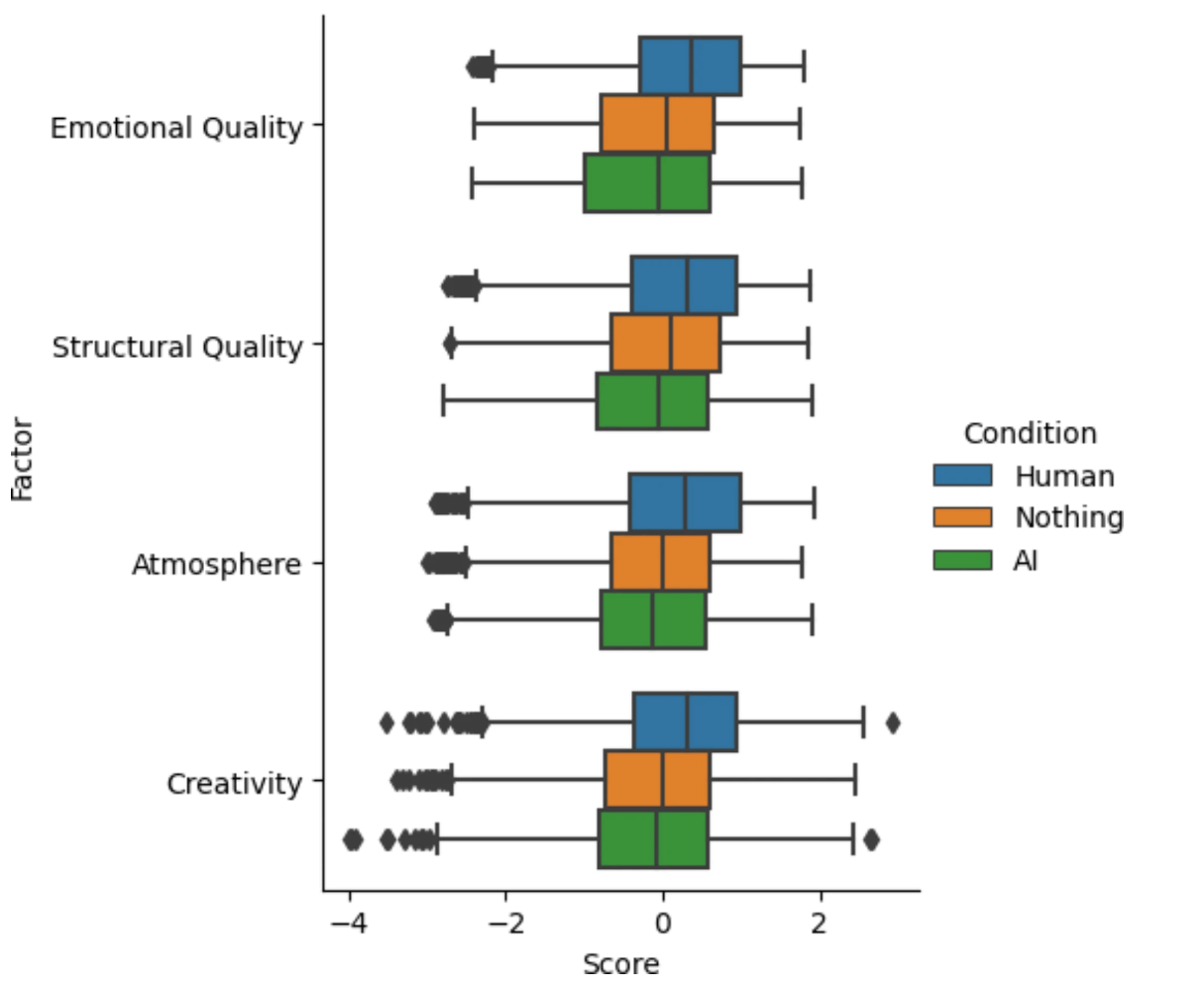

In a follow-up experiment with 696 participants, poems labeled as AI-generated received lower ratings—a pattern seen in other creative fields. When analyzing four key factors—creativity, mood, structure, and emotional quality—ratings stayed surprisingly balanced between AI and human authors when the source wasn't revealed.

Two key points frame these findings: First, the study used ChatGPT 3.5, now an older version of the AI model. Newer versions might produce even more convincing poems.

Second, the AI poems specifically imitated existing poets' styles rather than creating entirely original work. Without these human templates, the AI-generated poems might look very different.

The researchers also note their study focused on "non-experts," who likely responded well to AI's simpler language. Poetry experts would likely spot differences more easily, given their deeper knowledge of poetic structure, rhyme, and meter.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.