Peter Thiel reportedly warned Sam Altman about AI safety conflicts shortly before the OpenAI crisis

Fresh reporting shows deeper tensions at OpenAI than previously known, with investor Peter Thiel warning Sam Altman about internal conflicts shortly before his November 2023 dismissal.

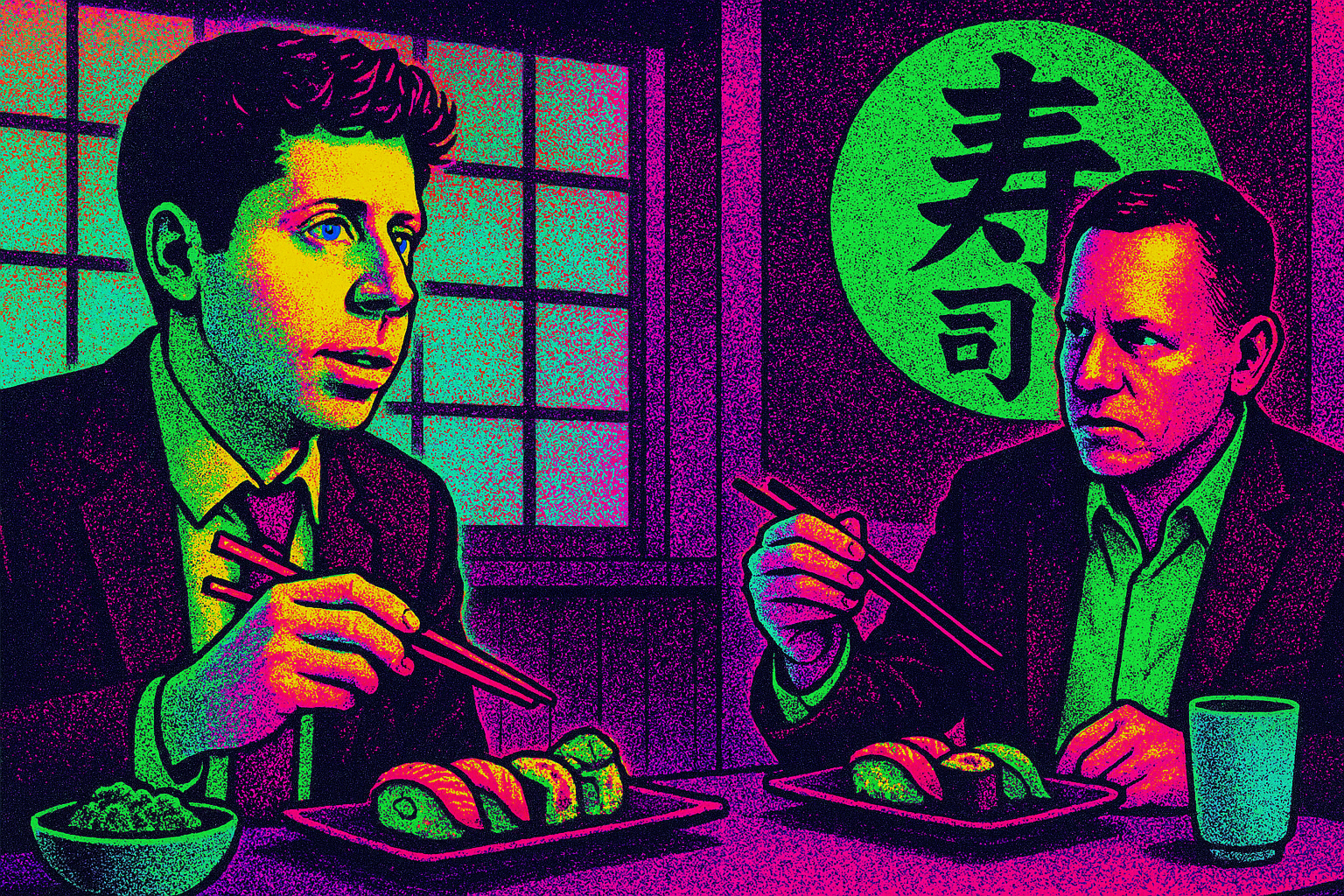

According to a Wall Street Journal report, based on the upcoming book "The Optimist: Sam Altman, OpenAI, and the Race to Invent the Future" by reporter Keach Hagey, Thiel confronted Altman during a private dinner in Los Angeles. The conversation centered on growing friction between AI safety advocates and the company's commercial direction.

At that dinner, Thiel specifically warned about the influence of the "Effective Altruism" (EA) movement within OpenAI. "You don’t understand how Eliezer has programmed half the people in your company to believe in that stuff," Thiel told Altman, referring to AI researcher Eliezer Yudkowsky, whom he had previously funded.

Altman dismissed Thiel's concerns about an "AI safety people" takeover by pointing to Elon Musk's departure from OpenAI in 2018. Both Musk and Yudkowsky had consistently raised alarms about AI's existential risks. Similar safety concerns later prompted seven OpenAI employees to leave and establish Anthropic in 2021.

Leadership tensions surface

The internal divide became more visible in 2024 when several key AI safety figures departed OpenAI, including Chief Scientist Ilya Sutskever and Jan Leike, who had led the "Superalignment" team. Leike later publicly criticized the company's approach to safety, saying his team had to fight for computing resources.

The WSJ report reveals that CTO Mira Murati and Sutskever spent months gathering evidence about Altman's management practices. They documented instances of him playing employees against each other and bypassing safety protocols. During the GPT-4-Turbo launch, Altman reportedly provided incorrect information to the board and wasn't transparent about his private OpenAI Start-up Fund structure. Similar issues about rushed safety testing emerged after the release of GPT-4o in May 2024.

When Murati tried to raise issues directly with Altman, he responded by bringing HR representatives to her one-on-one meetings for several weeks, until Murati decided not to share her concerns with the board.

The final confrontation

According to the WSJ, four board members, including Sutskever, decided to remove Altman through a series of confidential video calls. Sutskever had prepared two extensive documents detailing Altman's alleged misconduct. The board also decided to remove Greg Brockman after Murati reported he undermined her authority by circumventing her to communicate directly with Altman.

To protect their sources, the board initially kept their public explanation minimal, stating only that Altman had not been "consistently candid." However, the situation quickly reversed when faced with a potential mass exodus of employees.

Both Altman and Brockman were reinstated, with Murati and Sutskever signing the letter supporting their return - despite their earlier roles in the dismissal. Sutskever was reportedly surprised by the staff's support for Altman, having expected employees would welcome the change.

The WSJ's findings contradict earlier statements, particularly Murati's claim that she wasn't involved in Altman's removal. The systematic gathering of evidence against him suggests she played a central role in the events leading to his removal. Both Murati and Sutskever have since left to establish their own AI companies.

The investigation adds weight to earlier reports that AI safety concerns were central to Altman's brief removal. The detailed account reveals how deeply these tensions ran through OpenAI's senior leadership, ultimately leading to significant changes in the organization's approach to AI safety and development.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.