How do open source models compare to commercial models such as GPT-3? A tool provides a handy overview of the qualities of many paid and free major language models.

The commercial AI sector can be roughly divided into three areas:

- Chip manufacturers or cloud providers such as Nvidia and Google are on the hardware or infrastructure level.

- AI model providers are at the platform level.

- And at the application level are those who offer applications based on the models, which may or may not be the same companies as at the platform level.

For example, OpenAI offers its own product based on GPT 3.5, ChatGPT, while many companies use the GPT API to build their own text generators.

At the platform layer, there are numerous companies promoting their models, such as OpenAI or Aleph Alpha, as well as some open source offerings, such as GPT-NeoX. These companies and offerings are the source of most of the language AI interfaces actually used by consumers.

Easily compare output from AI text models

Which language model is right for your application depends on several factors. In most cases, the quality of the generated output is likely to be the deciding factor.

However, logging in to each platform or installing each model and manually checking the output is time-consuming and energy-sapping. Former Github CEO Nathaniel Friedman created Playground, a platform that automatically feeds your prompt to multiple language models, both commercial and open source, and merges the output on one platform.

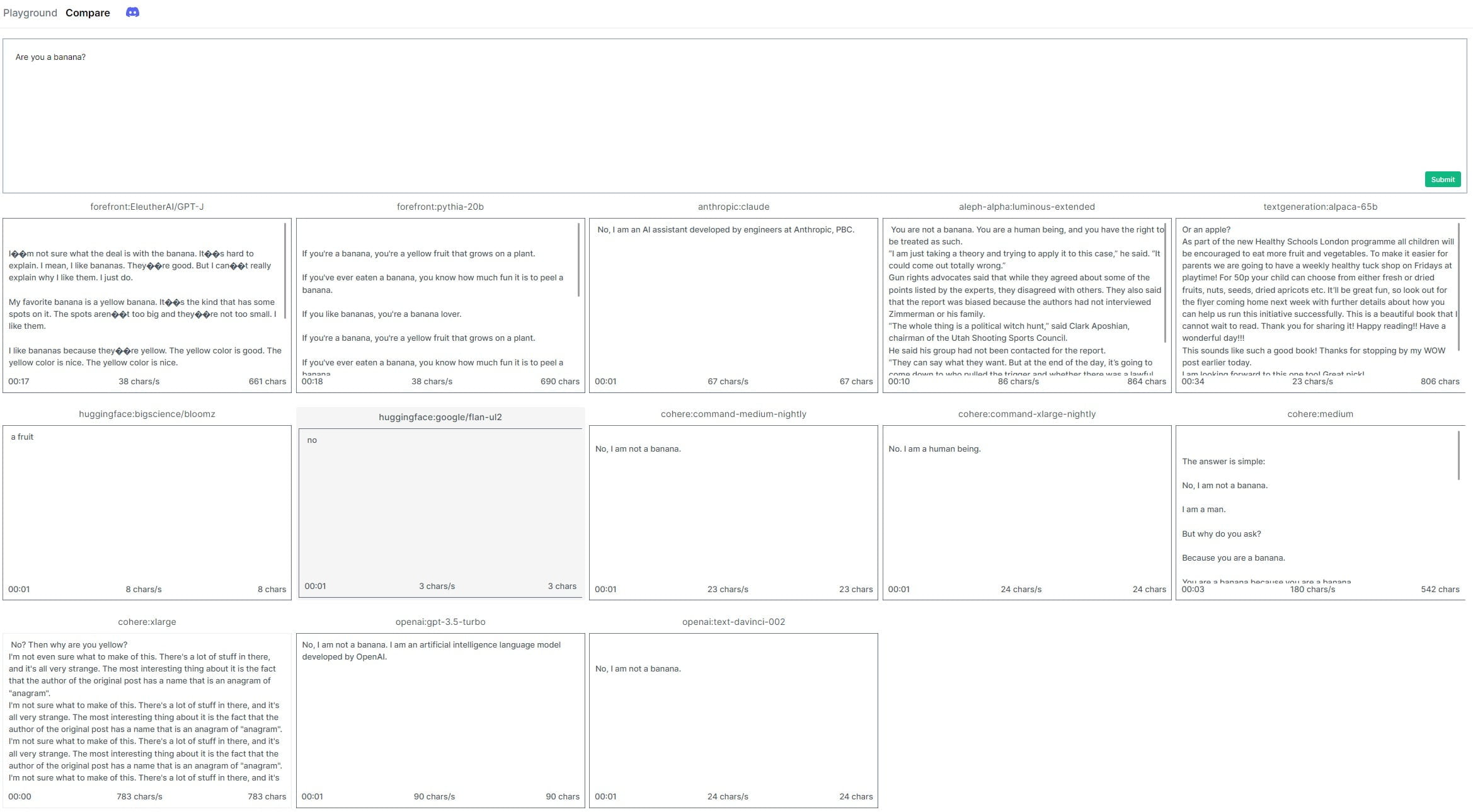

The following screenshot shows the output of the different models when asked if the model is a banana. You can right-click on the image, open it in a new window, and then zoom in to read the text.

OpenAI GPT models are clearly ahead

The tool makes it easy to compare language models. However, this may not always be fair, since the adjustable parameters apply to all models, but not every model reacts to the same parameter in the same way.

For example, the "temperature" slider gives the model some creative leeway, which it will likely use with varying intensity depending on its training and architecture.

But the platform provides a solution for this as well: the output of the same model can be tested with different parameters in parallel. This gives you an idea of the effect of a parameter.

Still, Friedman describes the quality advantage of OpenAI's GPT models on his comparison platform as "depressing."

"OpenAI should be paying for the entire site because it's excellent advertising," Friedman writes. But he adds that some pre-trained models may simply need more fine-tuning and RLHF to produce significantly better results.

Friedman plans to release the code of his platform in the near future. An overview of the currently integrated models and their technical specifications is available here. You can access the Playground platform here.