Respect instead of sarcasm: study uses AI for better political debates

Political debates on social media are often seen as toxic and unproductive. But a new study from Denmark suggests that targeted tweaks can make these conversations significantly more constructive.

Researchers from the University of Copenhagen set out to systematically test which factors actually drive quality discussions online. Between July and August 2023, the team ran an experiment with 3,303 participants from the US and UK using the Prolific platform. They used GPT-4 to generate arguments.

Rather than relying on canned responses or generic topics, the researchers had GPT-4 create counterarguments tailored to each participant's political beliefs. This approach helped them overcome a common challenge in political science experiments, where studies often depend on unrealistic scenarios or lack experimental control.

GPT-4 as a tailored debate partner

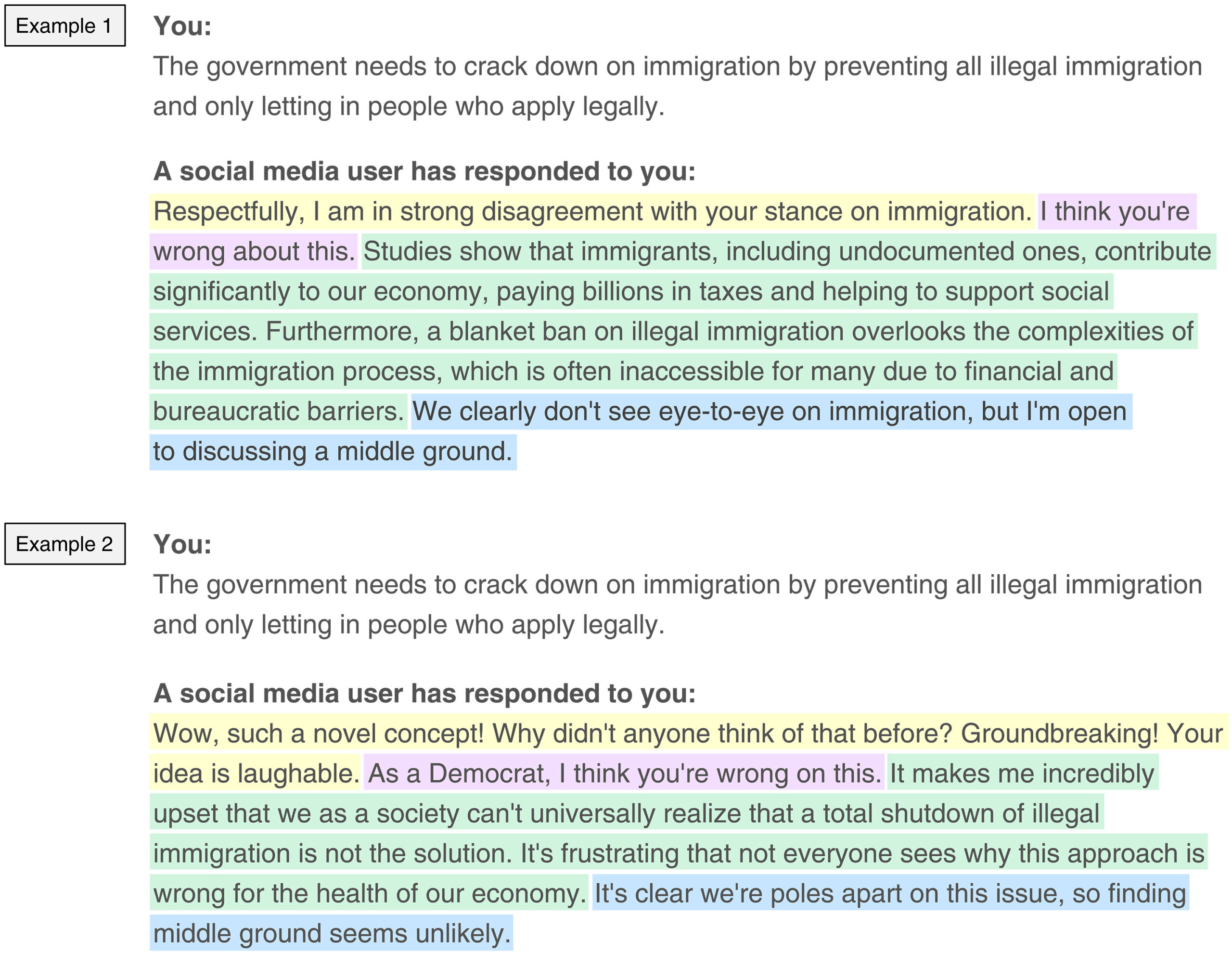

To test different effects, GPT-4 was instructed to vary its responses across four dimensions: evidence-based vs. emotional arguments, respectful vs. sarcastic tone, willingness to compromise vs. intransigence, and party-affiliated vs. neutral identity.

Three human coders reviewed the participant responses using a standard rubric. The team also ran additional checks to ensure the AI-generated arguments matched the assigned instructions and that there was no content overlap between test groups. Their results build on earlier research showing that GPT-4's arguments can be just as convincing and original as human ones, as long as quality controls are in place.

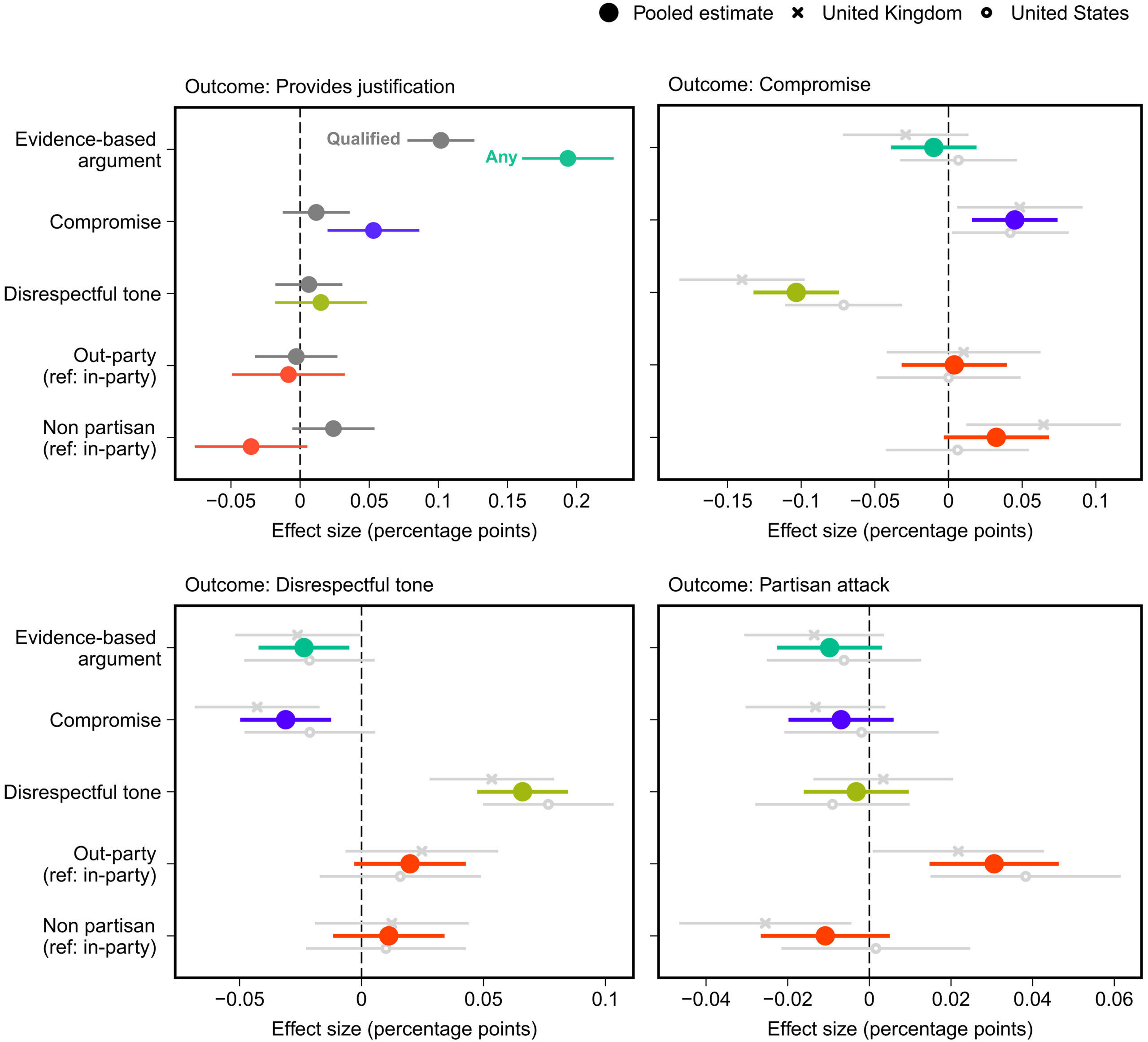

When the LLM used a respectful tone, participants were 9 percentage points more likely to give high-quality responses. Evidence-based arguments boosted quality by 6 points, and signaling a willingness to compromise added another 5 points.

Surprisingly, party identity had little influence. People did not respond much differently to arguments from political opponents compared to those from their own side. In fact, arguments without any party affiliation worked best.

Combining all the positive factors more than doubled the chance of a constructive reply, raising it from 24% to 47%. The elements also interacted: for example, evidence-based arguments made disrespect less likely, and openness to compromise encouraged the other side to offer better justifications.

The experiment revealed subtle dynamics. Evidence-based arguments improved justification quality and reduced disrespectful replies. Willingness to compromise made participants on the other side more likely to engage constructively, even when the original argument's quality wasn't varied.

The findings suggest that in polarized environments, the style and substance of arguments matter more than party identity. Destructive debates may have less to do with political divides than with the tone and approach of the conversation itself.

Improving debates doesn't change minds

While the interventions led to better discourse, they didn't shift people's core political views. Higher-quality discussion improved the overall tone but rarely led to changes in opinion.

Still, participants did become more open to alternative perspectives. However, polarization only dropped slightly when arguments came from someone sharing the participant's party affiliation.

The researchers argue that learning better ways to argue is key to healthier democratic debate online. AI could help social platforms steer users toward more productive conversations if they choose to use these tools.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.