Rubin CPX is Nvidia's first GPU built specifically for massive-context AI applications

Nvidia is planning a new class of GPU called Rubin CPX, designed specifically for the compute-heavy analysis phase in AI models. The strategy, known as split inference, is backed by new benchmark records from Nvidia’s Blackwell Ultra architecture, which uses a similar approach in software.

When an AI model tackles a complex task, it typically works in two phases. For example, if you ask a language model to summarize a long book, it first has to read and analyze the whole text. This is the compute-intensive "analysis" or "context phase." Only after that does the "generation phase" begin, where the summary is created word by word. Nvidia argues that these two phases have different hardware requirements, and Rubin CPX is their new chip built specifically for that demanding analysis phase.

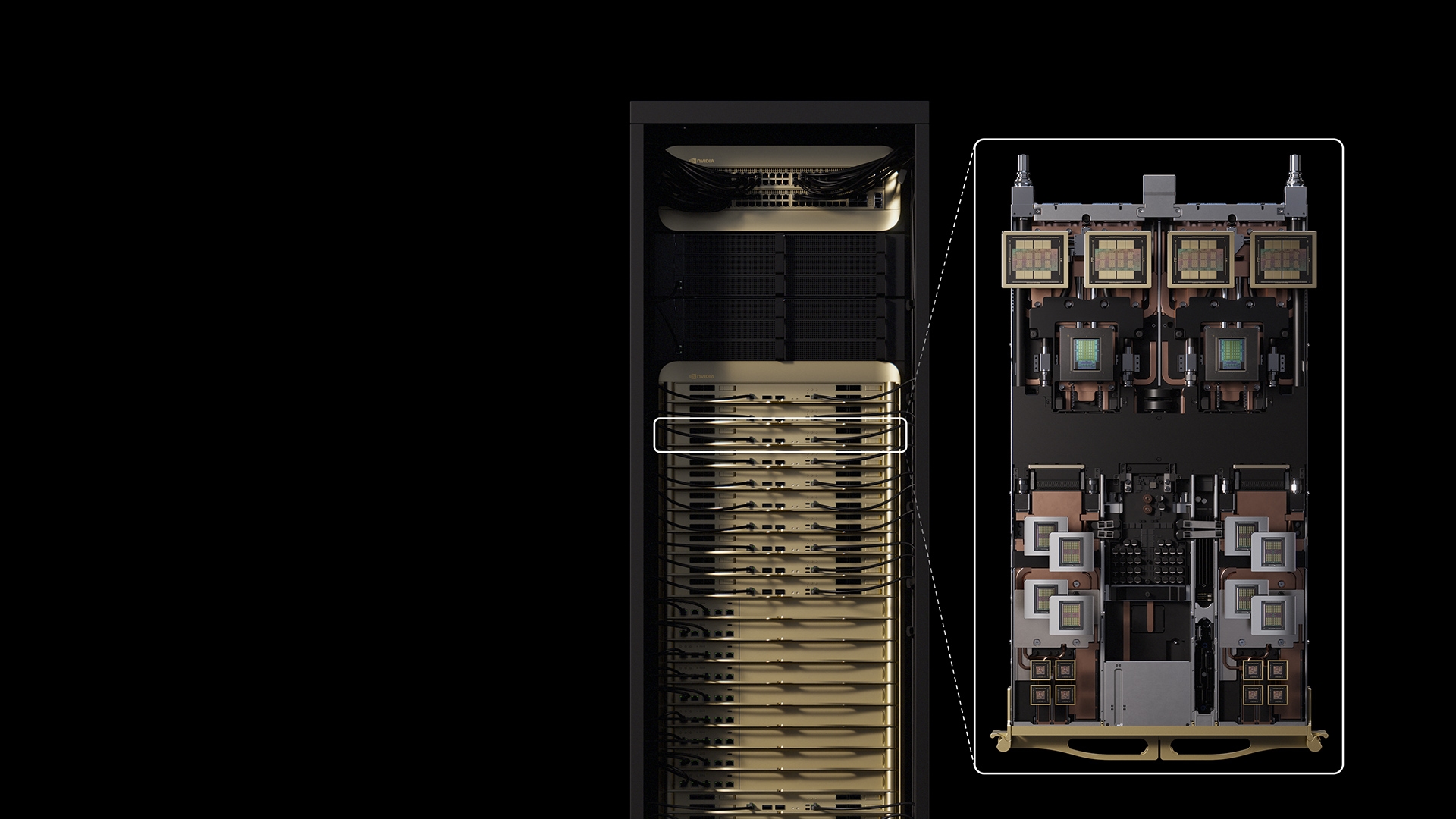

According to an official announcement, Rubin CPX is a derivative of the Rubin product line planned for 2025 and is set to launch at the end of 2026. It will be available as a plug-in card or a standalone computer for data centers.

A specialized chip for massive context windows

Nvidia says Rubin CPX will shine on tasks that need to process huge amounts of data at once. This includes AI applications that require millions of tokens as context, like analyzing entire codebases or generating videos. CEO Jensen Huang calls CPX the first CUDA GPU built specifically for "massive-context AI."

The technical foundation for this is Nvidia’s "disaggregated inference" strategy. As detailed in a technical blog post, the compute-bound context phase places different demands on hardware than the memory bandwidth-bound generation phase that follows. Rubin CPX will use a monolithic die design, deliver 30 petaFLOPs of NVFP4 compute, pack 128 GB of GDDR7 memory, and offer triple the attention layer acceleration compared to Blackwell.

Blackwell results back up the approach

Nvidia points to recent benchmark results to support its strategy. In the latest round of the MLPerf Inference v5.1 industry standard, Nvidia submitted Blackwell Ultra results for the first time—and set new records.

Blackwell Ultra, running in the GB300 NVL72 rack system, delivered up to 45 percent higher performance per GPU than standard Blackwell, according to Nvidia. Throughput on the new DeepSeek-R1 benchmark is reportedly five times higher than the previous Hopper architecture.

Nvidia notes that these tests already used a software approach to split inference. For the Llama 3.1 405B model, "disaggregated serving" was used to divide the context and generation phases across different GPUs. This method, managed by the Nvidia Dynamo Framework, boosted per-GPU throughput by nearly 1.5x compared to traditional methods. With Rubin CPX, this software approach gets a dedicated hardware solution. Nvidia says partners like Cursor (code editor), Runway (video AI), and Magic (AI agents) are already evaluating the technology for their own use cases.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.