Runway's Sora competitor Gen-3 Alpha now available

Update –

- Added release info

Update July 01:

After a short closed alpha phase with select testers, Gen-3 Alpha is now available to everyone. To use the text-to-video model, a paid subscription starting at $15 per month is required.

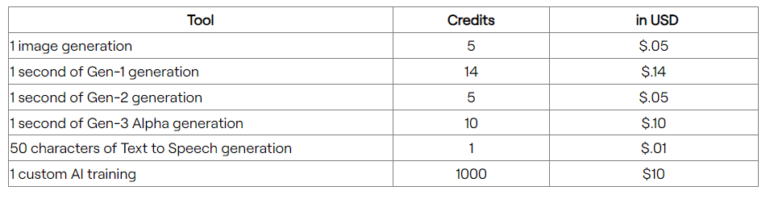

Video generation is billed using a credit system. The standard plan includes 625 credits per month, which equates to 62 seconds of Gen-3 generation according to Runway. Additional credits can be purchased (minimum of 1,000 credits for $10), with more included in the pricier plans.

Gen-3 Alpha can be accessed via RunwayML.

Original article from June 17:

Runway Gen-3 Alpha: New video model closes gap with OpenAI's Sora

Runway has introduced Gen-3 Alpha, a new AI model for video generation. According to Runway, it represents a "significant improvement" over its predecessor, Gen-2, in terms of detail, consistency, and motion representation.

Gen-3 Alpha has been trained on a mix of video and images and, like its predecessor, which was launched in November 2023, supports text-to-video, image-to-video, and text-to-image functions, as well as control modes such as Motion Brush, Advanced Camera Controls, and Director Mode. Additional tools are planned for the future to provide even greater control over structure, style, and motion.

Runway Gen-3 Alpha: First model in a series with new infrastructure

According to Runway, Gen-3 Alpha is the first in a series based on a new training infrastructure for large multimodal models. However, the startup does not reveal what specific changes the researchers have made.

A technical paper is missing, the only information available is a blog post with numerous unaltered video examples of ten seconds maximum, including the prompts used.

Video: RunwayML

Video: Runway

Video: Runway

The company highlights the model's ability to generate human characters with different actions, gestures, and emotions. Gen-3 Alpha also demonstrates advances in the temporal control of elements and transitions in scenes.

"Training Gen-3 Alpha was a collaborative effort from a cross-disciplinary team of research scientists, engineers, and artists," emphasizes RunwayML. It was designed to interpret a wide range of styles and film concepts.

Video: Runway

Video: Runway

Video: Runway

Customized models for industry customers

In addition to the standard version, Runway says it is working with entertainment and media companies on customized versions of Gen-3. These are designed to provide greater stylistic control, more consistent characters, and to meet specific requirements. Interested companies can submit an inquiry using this contact form.

In addition to Gen-3 Alpha, Runway is announcing new security features such as an improved moderation system and support for the C2PA standard, which is used by all major commercial image models. The company also sees the model as a step toward general world models and a new generation of AI-powered video generation.

Runway's race to catch up with Sora

In February 2024, ChatGPT developer OpenAI presented its Sora video model, which marked a new milestone in terms of consistency and image quality. However, the software is still not freely available and is probably far from being commercialized. Since then, several competing companies have introduced similar technologies, most notably KLING and Vidu from China.

RunwayML, which has been a pioneer in this field for several years, seems to have caught up with Gen-3 Alpha. According to the company, Gen-3 Alpha will be available in the next few days.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.