Sakana AI's new algorithm lets large language models work together to solve complex problems

The Japanese AI startup Sakana AI has developed a new method that lets multiple large language models, such as ChatGPT and Gemini, work together on the same problem. Early tests suggest this collaborative approach outperforms individual models working alone.

The technique, called AB-MCTS (Adaptive Branching Monte Carlo Tree Search), is an algorithm that enables several AI models to tackle a problem at the same time. The models exchange and refine their suggestions, working together much like a human team.

AB-MCTS combines two different search strategies: it can either refine an existing solution (depth search) or try out entirely new approaches (breadth search). A probability model continuously decides which direction to pursue next.

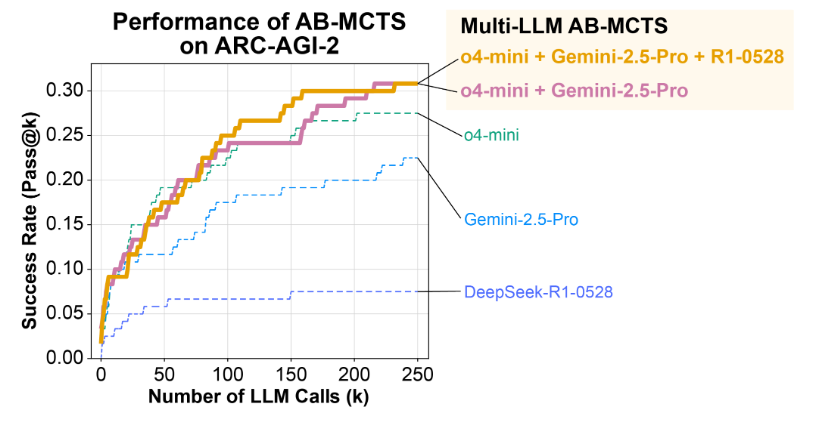

In the multi-LLM version (Multi LLM AB-MCTS), the system dynamically picks which model - such as ChatGPT, Gemini, or DeepSeek - is best suited for the current task. This selection adapts on the fly depending on which model delivers the strongest results for a particular problem.

AB-MCTS gets results in ARC-AGI-2

During tests on the challenging ARC-AGI-2 benchmark, Multi-LLM AB-MCTS solved more problems than any single model working alone (Single-LLM AB-MCTS). In several cases, only the combination of different models led to the right answer.

There are still some limitations. When allowed unlimited guesses, the system finds a correct answer about 30 percent of the time. But in the official ARC-AGI-2 benchmark, where submissions are usually limited to one or two answers, the success rate drops significantly.

To address this, Sakana AI plans to develop new methods for automatically identifying and selecting the best suggestions. One idea is to use an additional AI model to evaluate the options. The approach could also be combined with systems where AI models discuss solutions with each other.

Sakana AI has released the algorithm as open-source software under the name TreeQuest, so other developers can apply the method to their own problems.

It's been a busy summer for the Tokyo startup. Sakana AI recently launched its self-evolving Darwin-Gödel Machine, an agent that rewrites its own Python code in rapid genetic cycles. Dozens of code variants are generated and tested on the SWE-bench and Polyglot suites, with only the top performers making the cut. After just 80 rounds, SWE-bench accuracy jumped from 20% to 50%, while Polyglot scores more than doubled to 30.7%—moving ahead of other leading open-source models.

In June, the company's ALE agent reached the top 21 at a live AtCoder Heuristic Contest, outperforming over 1,000 human participants. ALE uses Google's Gemini 2.5 Pro and classic optimization techniques like simulated annealing, beam search, and taboo lists, showing that LLM-based agents can handle industrial-grade optimization tasks.

These advances build on January's Transformer² study, which tackled continual learning in large language models. Taken together, Darwin-Gödel, ALE, and Transformer² outline a clear direction: evolve code, iterate solutions, and let modular, nature-inspired agents tackle problems that once needed teams of engineers.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.