Large language models alone are unlikely to lead to AGI, says Sam Altman. Finding the solution is the next challenge for OpenAI.

The CEO and co-founder of OpenAI gave a talk and answered questions as part of the 2023 Hawking Fellowship Award, which he accepted on behalf of the team. Questions from the Cambridge students included the role of open source, AI security, awareness, and, finally, the role of scaling on the path to Artificial General Intelligence (AGI).

When asked whether further scaling of large language models would lead to AGI, Altman replied that while the models have made great progress and he sees a lot of potential, it is very likely that another breakthrough will be needed. Although GPT-4 has impressive capabilities, it also has weaknesses that make it clear that AGI has not yet been achieved.

"I think we need another breakthrough. We can push on large language models quite a lot, and we should, and we will do that. We can take our current hill that we're on and keep climbing it, and the peak of that is still pretty far away. But within reason, if you push that super far, maybe all this other stuff emerged. But within reason, I don't think that will do something that I view as critical to a general intelligence," Altman said.

He used physics to illustrate his point: "Let's use the word superintelligence now, as superintelligence can't discover novel physics, I don't think it's a superintelligence. Training on the data of what you know, teaching to clone the behavior of humans and human text, I don't think that's going to get there. So there's this question that has been debated in the field for a long time: what do we have to do in addition to a language model to make a system that can go discover new physics? And that'll be our next quest."

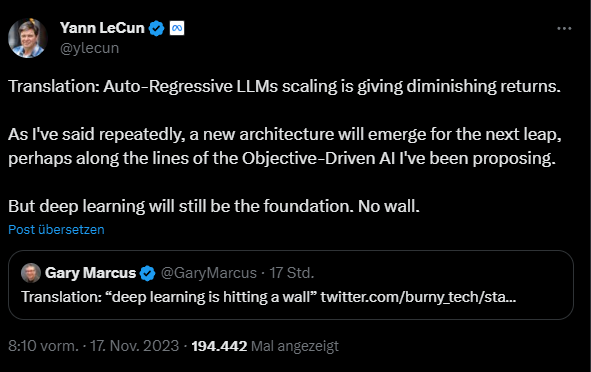

Deep learning critic Gary Marcus translated Altman's statement as "Deep learning is hitting a wall". According to Marcus, networks can sometimes generalize, but never reliably. Meta's AI chief Yann LeCun, on the other hand, translated it into "Auto-Regressive LLMs scaling is giving diminishing returns". He still sees deep learning as the foundation - albeit with different architectures and training goals, similar to JEPA.

Altman: "Safety in theory is easy"

When asked about the role of open source, Altman expressed support for open source models in principle but warned of the potential risks of making every model openly available without thorough testing and knowledge.

"I think open source is good and important," Altman said, "But I also believe that precaution is really good, I respectfully disagree that we should just instantly open source every model we train", answering questions about Andrew Ngs recent interview about AI regulations.

The issue of safety also came up in Altman's presentation and in the questions. He reiterated the importance of developing AI safely and in line with human values and explained OpenAI's approach, which relies on testing, external experts, safety monitoring systems and tools, and best practices.

"Safety in theory is easy. The hard thing is to make a system that is really useful to hundreds of millions of people and is safe," Altman said. "You can't do this work only in theory; you have to do it in contact with reality."

He also spoke about the importance of global cooperation in the regulation of AI, suggesting that a global regulator, similar to the Nuclear Regulatory Authority, may be needed in the future.