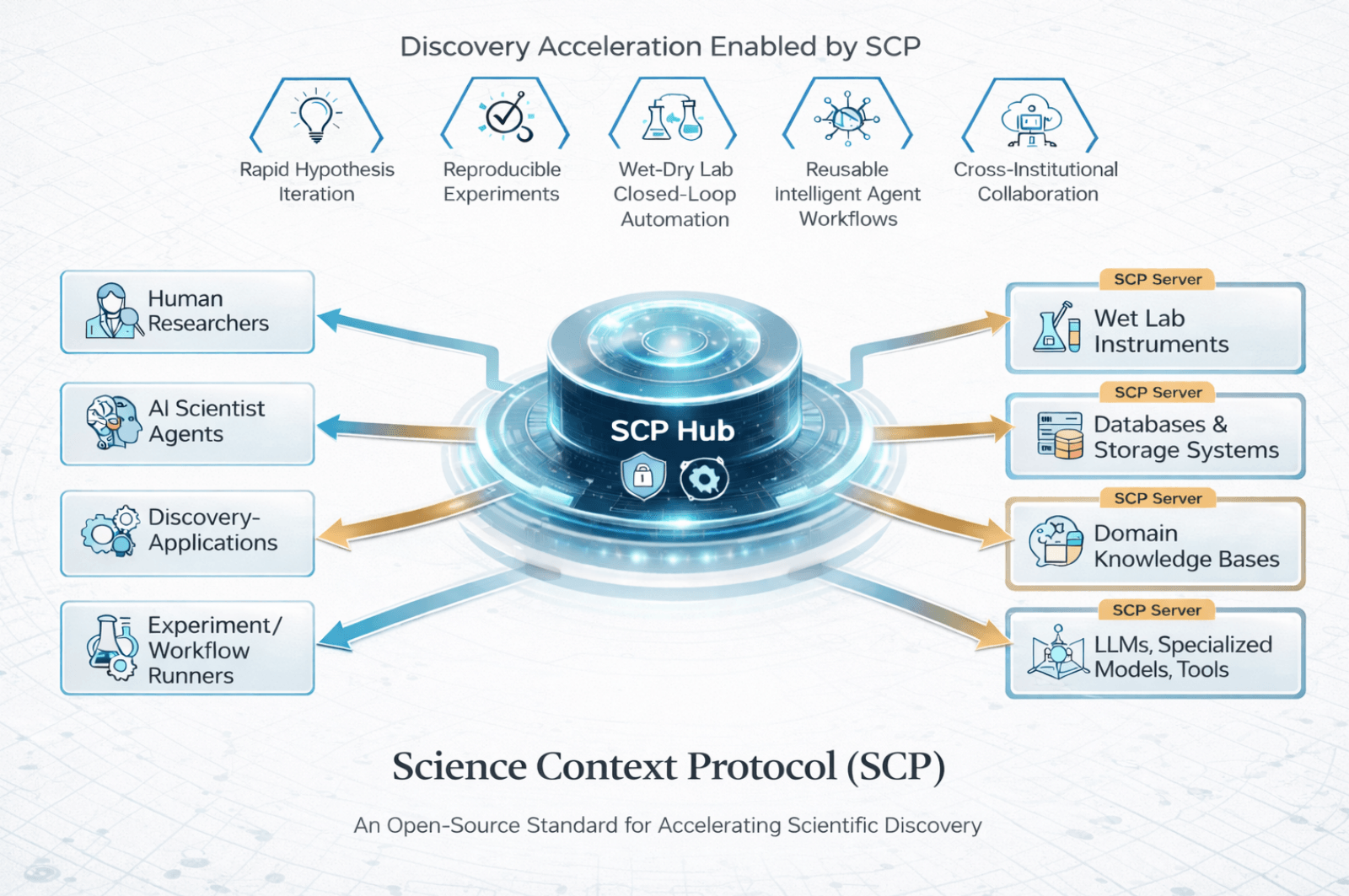

Science Context Protocol aims to let AI agents collaborate across labs and institutions worldwide

Anthropic's MCP data access standard has become the industry standard. Now researchers from the Shanghai Artificial Intelligence Laboratory want to bring the same approach to scientific work.

Current AI systems for research—like A-Lab, ChemCrow, and Coscientist—typically operate in isolation, locked into specific workflows that don't play well across institutional boundaries. The Science Context Protocol (SCP) is designed to fix that. A team from the Shanghai Artificial Intelligence Laboratory developed this open-source standard to enable "a global web of autonomous scientific agents." The core idea: a shared protocol layer that lets AI agents, researchers, and lab equipment work together securely and traceably.

The Science Context Protocol builds on Anthropic's Model Context Protocol (MCP), released in November 2024 and now the go-to standard for connecting AI models with external data sources. MCP works well for general tool interactions, but the researchers say it's missing critical features for scientific workflows: structured representation of complete experiment protocols, support for high-throughput experiments with many parallel runs, and coordination of multiple specialized AI agents.

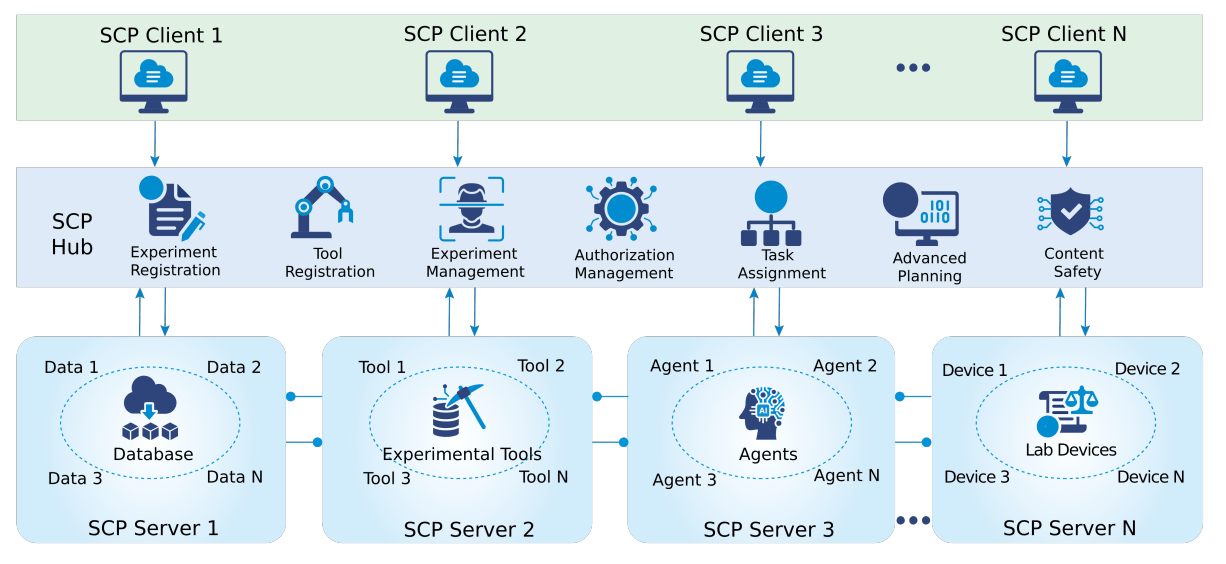

SCP expands on MCP in four key ways: it adds richer experiment metadata, replaces peer-to-peer communication with a centralized hub, provides intelligent workflow orchestration through an experiment flow API, and enables lab device integration with standardized drivers.

The team describes SCP as "essential infrastructure for scalable, multi-institution, agent-driven science." The specification and a reference implementation are available as open source on GitHub.

AI-powered research

SCP rests on two foundations. The first is standardized resource integration: the protocol defines a universal specification for describing and accessing scientific resources, everything from software tools and AI models to databases and physical lab instruments. This lets AI agents discover and combine capabilities across platforms and institutions.

The second is orchestrated experiment lifecycle management. A secure architecture with a central SCP hub and distributed SCP servers handles the entire experiment lifecycle: registration, planning, execution, monitoring, and archiving. The system uses fine-grained authentication and traceable workflows covering both computational and physical lab work.

The SCP hub sits at the center of the architecture—what the researchers call the "brain" of the system. It serves as a global registry for all available tools, datasets, agents, and instruments. When a researcher or AI agent submits a research goal, the hub uses AI models to analyze the request and break it into specific tasks.

The system generates multiple executable plans and presents the most promising options along with rationales covering dependency structure, expected duration, experimental risk, and cost estimates. Selected workflows get stored in structured JSON format that serves as a contract between all participants and ensures reproducibility.

During execution, the hub monitors progress, validates results, and can issue warnings or trigger fallback strategies when anomalies occur. This matters most for multi-stage workflows that combine simulations with physical lab experiments, according to the researchers.

The team built the Internal Discovery Platform on SCP, which currently provides over 1,600 interoperable tools. Biology makes up the largest share at 45.9 percent, followed by physics at 21.1 percent and chemistry at 11.6 percent. Mechanics and materials science, math, and computer science split the rest.

By function, computational tools lead at 39.1 percent, with databases at 33.8 percent. Model services account for 13.3 percent, lab operations 7.7 percent, and literature searches 6.1 percent. Tools range from protein structure predictions and molecule docking to automated pipetting instructions for lab robots.

Use cases span protocol extraction to drug screening

The researchers outline several case studies showing potential applications. In one example, a scientist uploads a PDF containing a lab protocol. The system automatically extracts the experimental steps, converts them to a machine-readable format, and runs the experiment on a robotic platform.

Another scenario demonstrates AI-controlled drug screening: starting with 50 molecules, the system calculates drug-likeness scores and toxicity values, filters by defined criteria, prepares a protein structure for docking, and identifies two promising candidates. The entire process runs as an orchestrated workflow where various SCP servers collaborate on database queries, calculations, and structural analyses. Whether these scenarios work in practice remains to be seen.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.