Stable Cascade looks like a more efficient and higher quality successor to Stable Diffusion

Stable Cascade is a new text-to-image model from Stability AI, now available as a Research Preview.

Stable Diffusion has been a massive success for Stability AI and its partners: The open-source model has been downloaded millions of times and is the basis for countless AI image apps.

With Stable Cascade, Stability AI is now releasing a research preview of a possible successor that should offer more quality, flexibility, efficiency, and easier fine-tuning to specific styles.

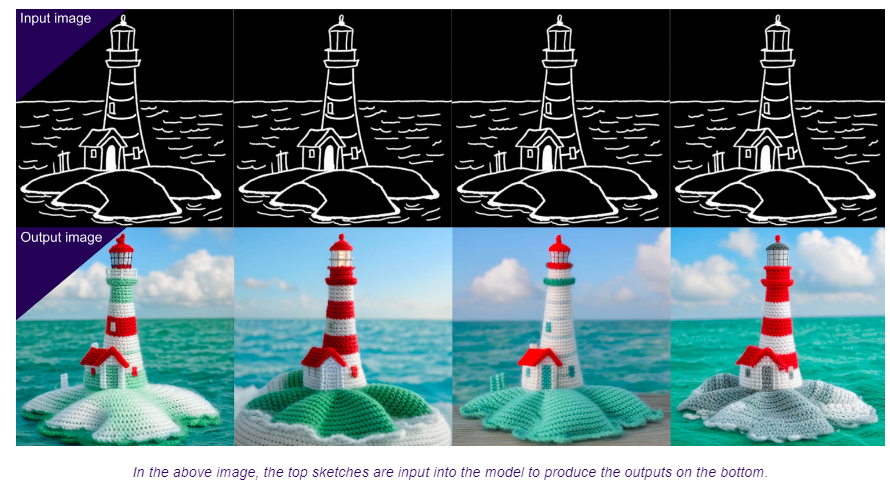

Stable Cascade supports image variations, image-to-image generation, inpainting/outpainting, Canny Edge generation, and 2x super-resolution. Text generation seems to be much improved as well.

Users can generate variations of a given image, create new images based on existing images, fill masked parts of an image, generate images that follow the edges of an input image, and scale images to higher resolutions.

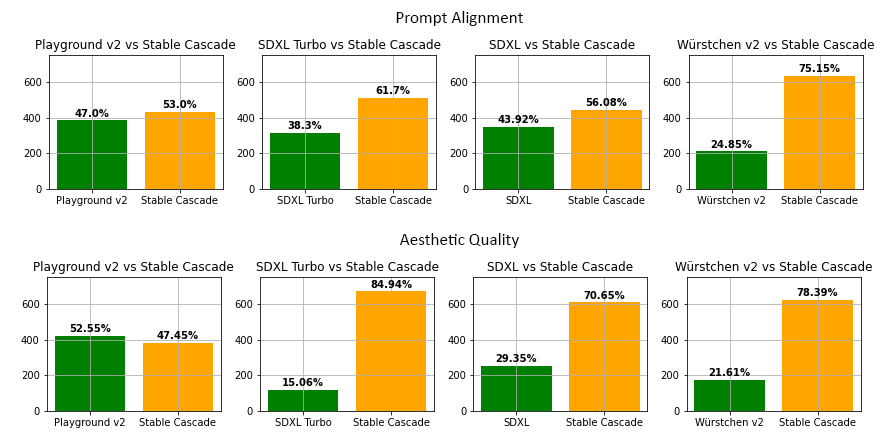

According to Stability AI, Stable Cascade outperforms its predecessors in most model comparisons in terms of prompt following and aesthetic quality. Playground v2, a free-for-commercial-use open-source model released in December 2023, is slightly ahead in aesthetic quality and slightly behind in prompt alignment, according to Stability AI measurements.

The research preview of Stable Cascade is for non-commercial use only. It is not clear from the announcement if and in what form the final model will be available as open source. Stability AI also offers its models via API for commercial use, but Stable Cascade is not yet part of that offering.

Users can experiment with Stable Cascade by accessing the checkpoints, inference scripts, fine-tuning scripts, ControlNet and LoRA training scripts available on the Stability GitHub page. In this way, the model can be adapted to your needs.

"Würstchen" make image generators work fast

Stable Cascade is based on the "Würstchen" (Sausage) architecture introduced in January 2024. It is a three-stage diffusion-based text-image synthesis that learns a highly compressed but detailed semantic "image recipe" (Stage C) that drives the diffusion process (Stage B).

According to Stability AI, this compact representation provides much more detailed guidance compared to latent language representations, reducing computational effort while improving image quality.

Würstchen requires fewer training resources (24,602 A100 GPU hours compared to 200,000 GPU hours for Stable Diffusion 2.1) and less training data.

Stability AI claims that Stable Cascade offers significantly faster generation times despite having more parameters than its current top model, Stable Diffusion XL. Stable Cascade takes about ten seconds for 30 steps to generate the finished image, while SDXL takes 50 steps and 22 seconds. SDXL Turbo is even faster, taking just one step and half a second, but at the expense of image quality.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.