StableDrag's simple point-and-click image editing makes turning Mona Lisa's head easy

Many AI image generators already provide a powerful tool for modifying image content with text, called inpainting. Point-based editing makes adjustments even easier.

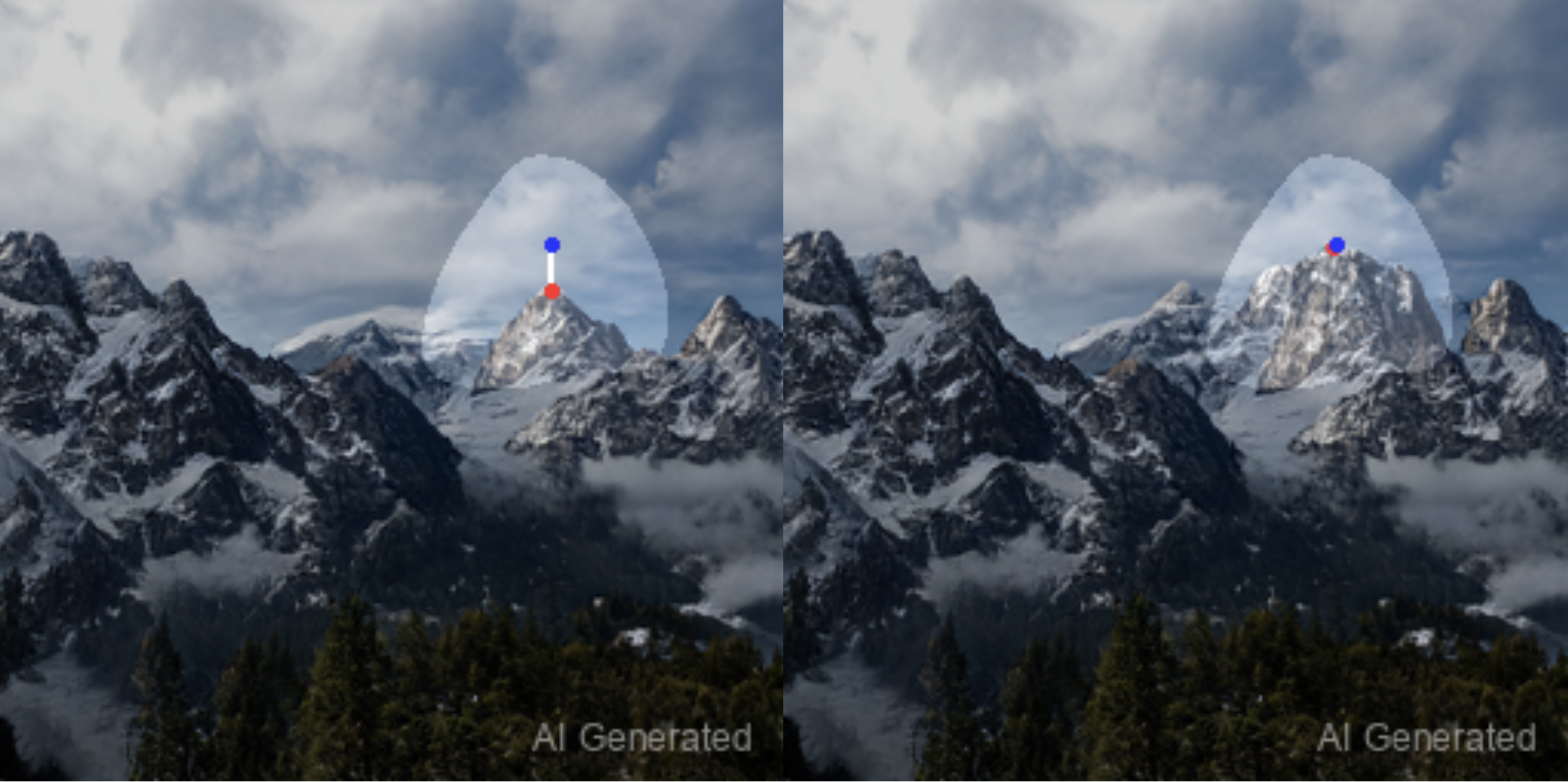

Researchers from Nanjing University and Tencent have developed a new AI-based image editing method called StableDrag that allows elements to be easily moved to new positions while maintaining the correct perspective, according to their paper.

The method builds on recent advances in AI image editing like FreeDrag, DragDiffusion, and Drag-GAN, and delivers significantly better results in benchmarks.

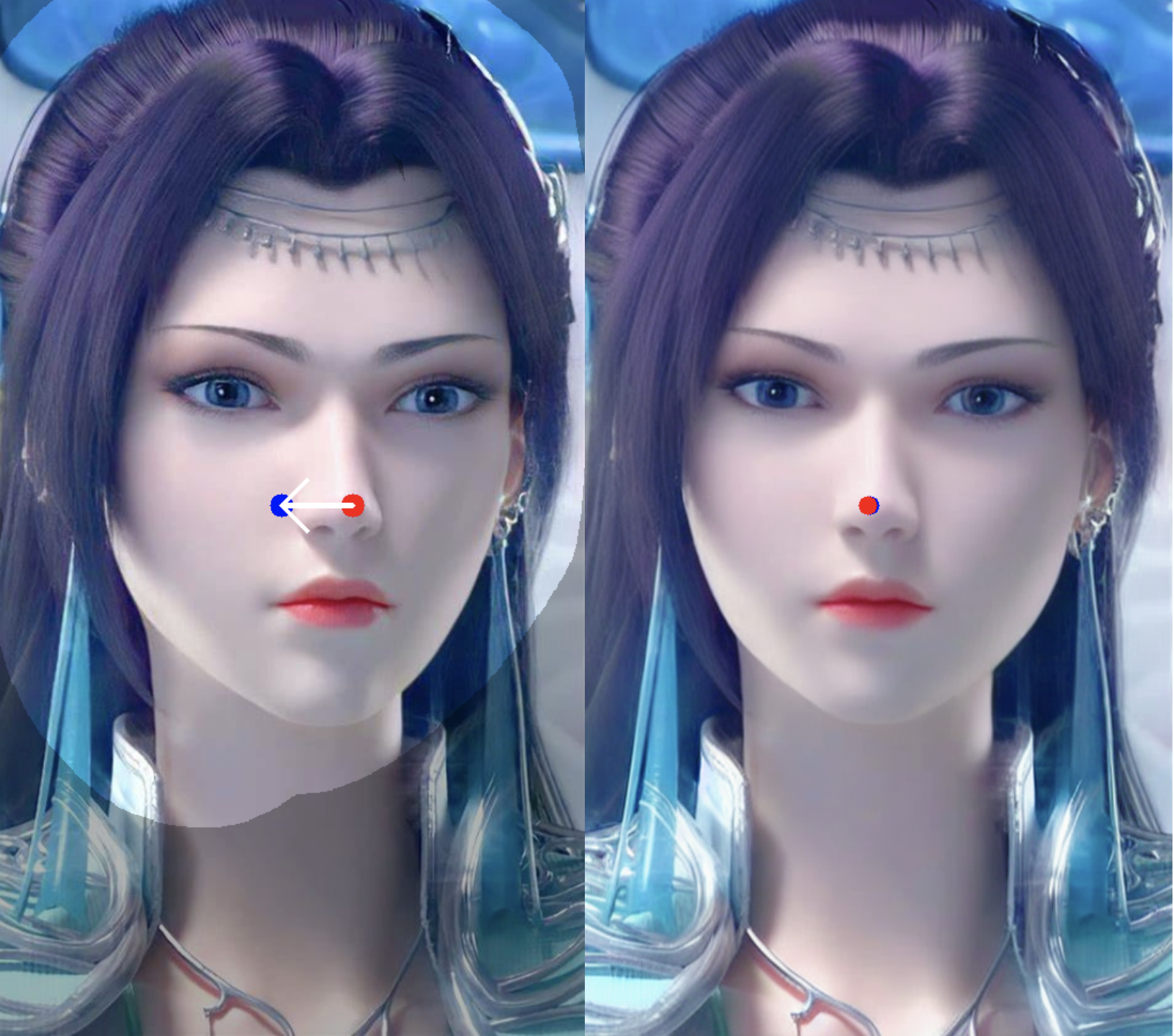

An iexample is changing the viewing direction of the "Mona Lisa" by moving her nose a little to the right. The input image with source (red) and destination (blue) is shown on the left, the result of DragDiffusion in the middle and StableDrag-Diff on the right.

The tool works well on photos, illustrations, and other AI-generated images, with human faces and subjects like cars, landscapes, and animals.

The key innovations are a point tracking method to precisely localize updated target points and a confidence-based strategy to maintain high image quality at each step, the researchers explain. The confidence value evaluates the editing quality and reverts to original image features if it drops too low, preserving the source material without limiting editing options.

While AI image generation from text has rapidly advanced, enabling highly realistic photos, image manipulation is still catching up in comparison. Some AI models offer inpainting to alter selected areas with text input, but StableDrag's point-based editing promises more precision. The researchers say they will open source the code soon.

Apple is taking a different manipulation approach with MGIE, which uses text prompts to add, remove or change objects without selecting specific regions.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.