Teachers take note: This free tool aims to detect AI content

A new tool from the anti-plagiarism platform Crossplag can supposedly identify AI texts.

AI text tools like ChatGPT are made for generating generic texts about topics that have already been described in detail beforehand. Or to put it another way: ChatGPT and Co. are essay automation tools that pose new challenges to the educational apparatus.

The age of "AIgiraism"

Crossplag is a platform that specializes in detecting academic plagiarism. In AI text generators, Crossplag has found a new end boss: micro-plagiarism from millions of texts compiled into a new text on par with original content. It may even be original content, but that's open to debate.

In light of the new threat, Crossplag proclaims the age of AI plagiarism, calling it "aigiarism." AI text may be the Achilles heel of academic integrity, the Crossplag team speculates. The term aigiarism was previously mentioned by Mike Waters on Twitter.

ChatGPT started having immediate negative implications in the academic world as it proved successful in helping students generate content without doing any work.

Crossplag

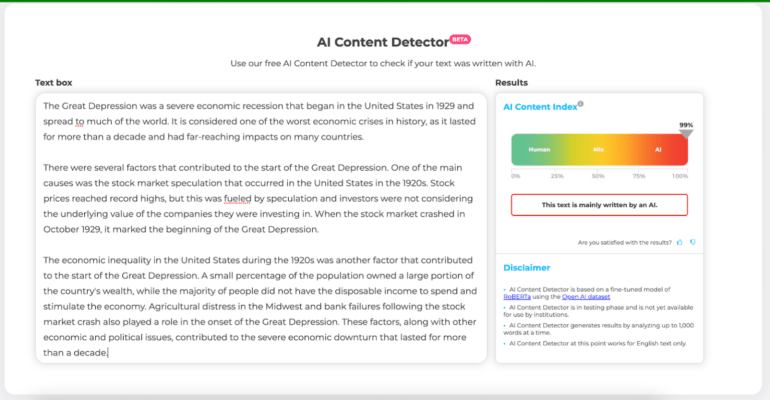

As a countermeasure, Crossplag introduces a free AI content detector: It's designed to detect whether a text came from a machine or a human - or whether both worked on it.

Even with extensive tweaks to an AI text, the tool can still "find the paths of AI-generated content," the platform writes. The beta version works only for English texts and has not yet been released for institutional use. It can analyze up to 1,000 words at a time.

Using AI against AI

For AI content detection, Crossplag uses a RoBERTa model fine-tuned with a GPT-2 dataset, also a large transformer-based language model like GPT-3.5, which is behind ChatGPT.

The AI system is said to identify "human patterns and irregularities" in texts. Other clear indications are repetitive language and inconsistencies in tone. I have requested data on the reliability of the system and will update the article when I get it.

In addition, the tool is supposed to benefit from intertwining with the Crossplag platform, as the AI generates text from existing sources "more often than not." The platform does not describe how this works exactly.

The question of transparency in AI-generated media is likely to be with us for a while, despite the AI Content Detector. It generally arises with generative AI. Besides text, there is a plagiarism discussion for images and soon perhaps for videos or 3D models.

OpenAI, the company behind ChatGPT, is experimenting with a watermark integrated into AI text to allow unique identification. The company plans to present the detection system in a scientific paper next year. China will ban AI-generated media without labeling from early 2023.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.