Tencent's Hunyuan-GameCraft transforms single images into interactive gaming videos

Tencent has released Hunyuan-GameCraft, an AI system that generates interactive videos from individual images.

Unlike standard video generators that produce fixed video clips, GameCraft lets users steer the camera in real time using WASD or arrow keys, allowing free movement through the generated scenes. The system is built on Tencent's open-source text-to-video model, HunyuanVideo. Tencent says the system delivers especially smooth, consistent camera motion.

Video: Tencent

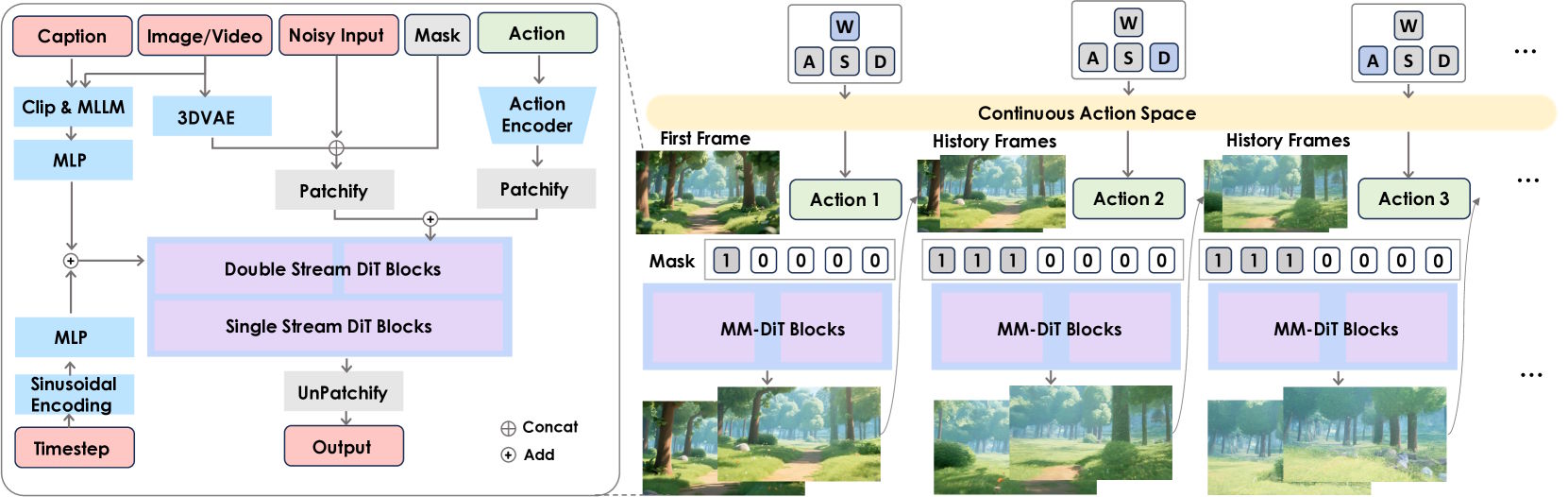

The framework supports three axes of translation—forward/backward, left/right, and up/down—plus two axes of rotation for looking around. Rolling the camera is left out, which Tencent says is uncommon in games. An action encoder translates keyboard input into numerical values the video generator understands. The system also factors in speed, so movement can be adapted based on how long a key is pressed.

Hybrid training for long, consistent videos

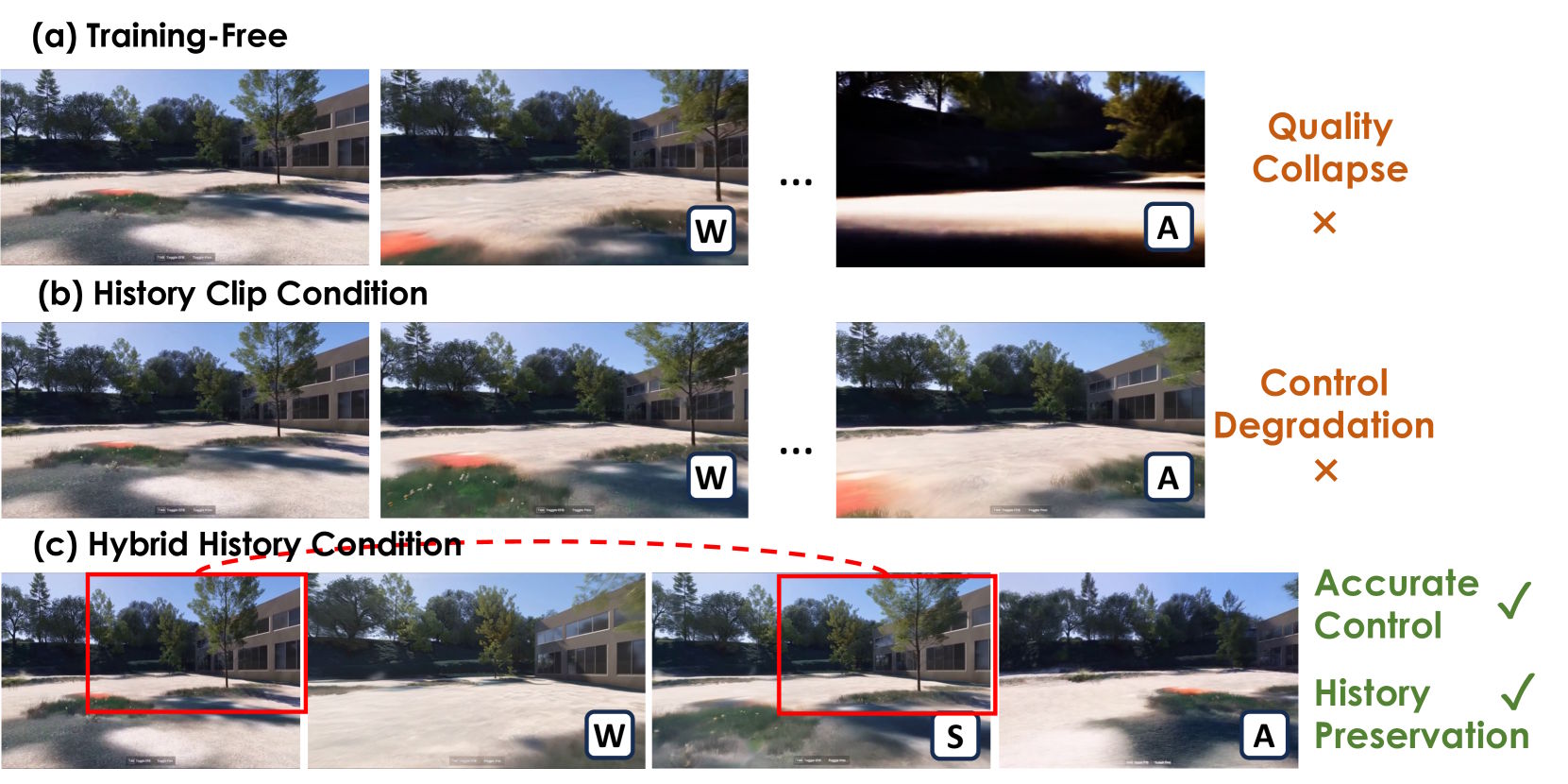

To keep video quality high over longer sequences, GameCraft uses a training technique called Hybrid History-Conditioned Training. Instead of generating everything at once, the model creates each new video segment step by step, drawing on earlier segments. Each video is broken into roughly 1.3-second chunks. A binary mask tells the system which parts of each frame already exist and which still need to be generated, helping the model stay both consistent and flexible.

According to Tencent, training-free approaches often result in visible quality drops, while pure history conditioning hurts responsiveness to new input. The hybrid strategy combines both, producing videos that stay smooth and consistent while reacting instantly to user input, even during long sessions.

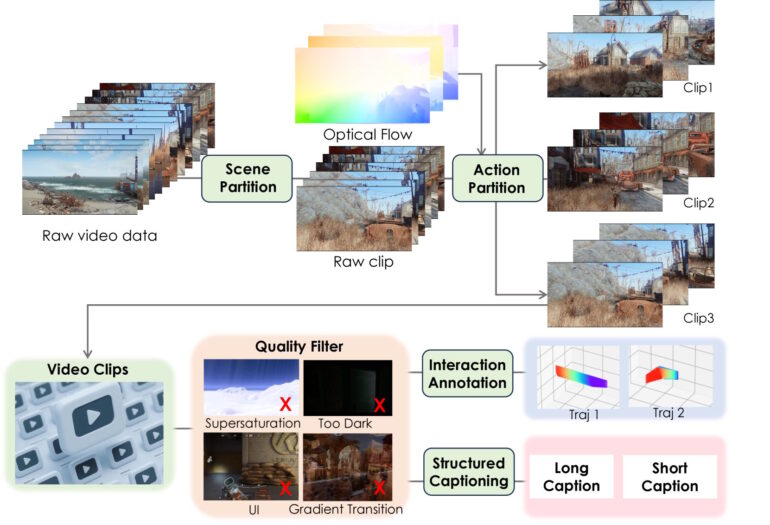

Training on more than a million gameplay videos

GameCraft was trained on over a million gameplay recordings from more than 100 AAA titles, including Assassin's Creed, Red Dead Redemption, and Cyberpunk 2077. Scenes and actions were automatically segmented, filtered for quality, annotated, and given structured descriptions.

Developers also created 3,000 motion sequences from digital 3D objects. Training ran in two phases across 192 Nvidia H20 GPUs for 50,000 iterations. In head-to-head tests with Matrix-Game, GameCraft cut interaction errors by 55 percent. It also delivered better image quality and more precise control than specialized camera control models like CameraCtrl, MotionCtrl, and WanX-Cam.

To make GameCraft practical, Tencent added a Phased Consistency Model (PCM) that speeds up video generation. Instead of running every step of the typical diffusion process, PCM skips intermediate steps and jumps straight to plausible final frames, boosting inference speed by 10 to 20 times.

GameCraft reaches a real-time rendering rate of 6.6 frames per second, with input response times under five seconds. Internally, it runs at 25 fps, processing video in 33-frame segments at 720p resolution. This balance of speed and quality makes interactive control practical.

The full code and model weights are available on GitHub, and a web demo is in the works.

GameCraft joins a growing field of interactive AI world models. Tencent's earlier Hunyuan World Model 1.0 can generate 3D scenes from text or images but is limited to static panoramas. Competitors include Google DeepMind's Genie 3 and the open-source Matrix-Game 2.0 from Skywork.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.