Tencent's X-Omni uses open source components to challenge GPT-4o image generation

Tencent's X-Omni team shows how reinforcement learning can fix the usual weaknesses of hybrid image AI systems. The model excels at rendering long texts in images and sometimes sets new performance benchmarks.

Autoregressive AI models that generate images token by token face a core limitation: errors can accumulate during the generation process, which reduces image quality. To address this, most current systems use a hybrid approach, combining autoregressive models for semantic planning with diffusion models for the final image generation.

But hybrids have their own issue: the tokens generated by the autoregressive part often don't match what the diffusion decoder expects. Tencent's research team set out to fix this with X-Omni, using reinforcement learning to bridge the gap.

Unified reinforcement learning

X-Omni combines an autoregressive model that generates semantic tokens with the FLUX.1-dev diffusion model from German startup Black Forest Labs as its decoder. Unlike earlier hybrid systems, X-Omni doesn't train these two parts separately. Instead, it uses reinforcement learning to get them working together.

X-Omni first generates semantic tokens, then the diffusion decoder uses those tokens to create images. An evaluation system gives feedback about image quality, so the autoregressive model learns to make tokens that the decoder can use more effectively. The research paper says that image quality keeps getting better during reinforcement learning. After 200 training steps, X-Omni beats the best results from regular hybrid training.

X-Omni uses semantic tokenization instead of focusing on pixels. A SigLIP-VQ tokenizer breaks images into 16,384 different semantic tokens, which represent concepts instead of pixel details. The system is built on Alibaba's open source Qwen2.5-7B language model, with extra layers added for image processing.

For reinforcement learning, the team built a comprehensive evaluation pipeline: a human preference score for aesthetics, a model for scoring high-resolution images, and the vision-language model Qwen2.5-VL-32B to check if generated images match the prompts. For text accuracy, they used the OCR systems GOT-OCR-2.0 and PaddleOCR.

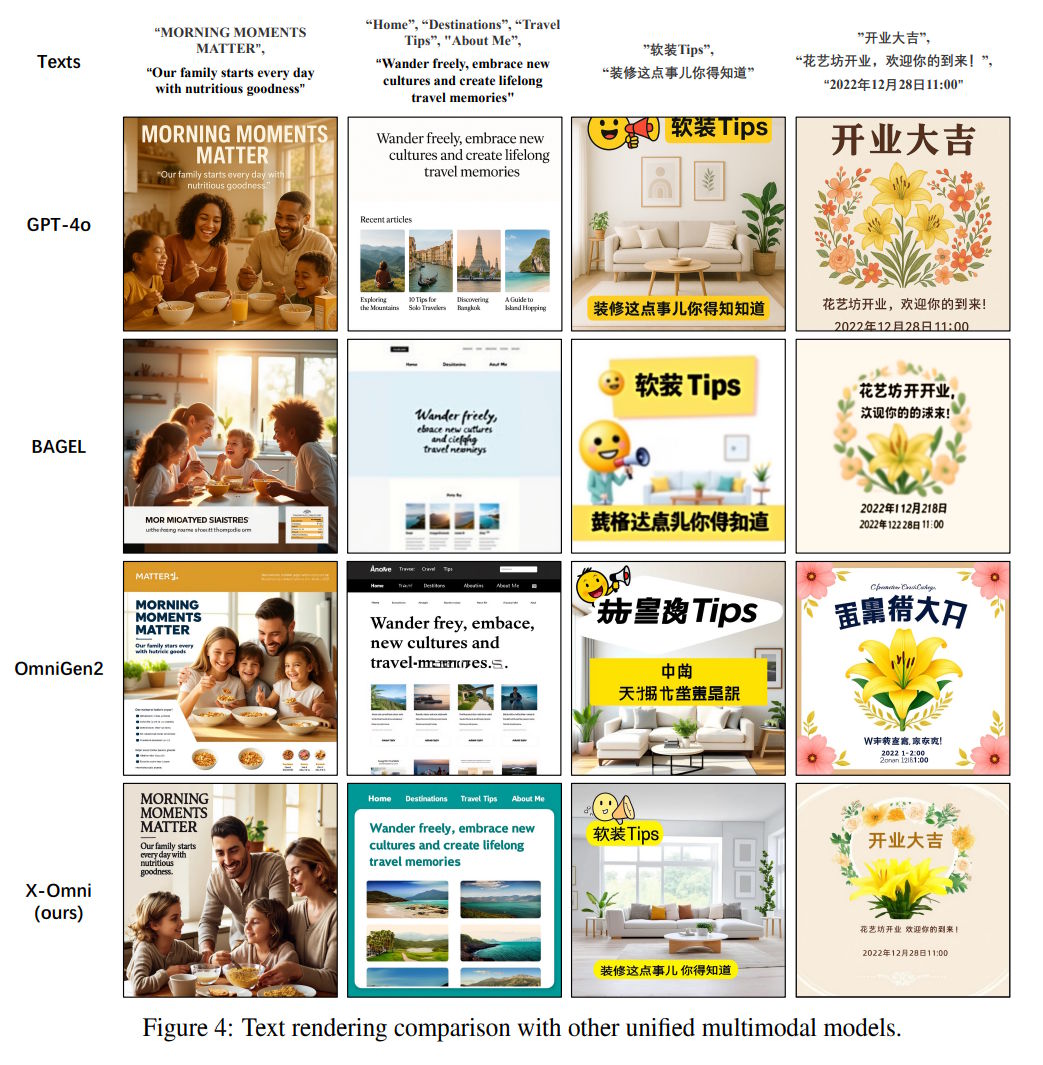

X-Omni stands out for how well it displays text in images. On established benchmarks, it scores 0.901 for English text, beating all comparable systems. For Chinese text, it even edges out GPT-4o. To test longer passages, the team created a LongText benchmark, where X-Omni leads most competitors, especially for Chinese.

For general image generation, X-Omni notched 87.65 on the DPG benchmark - the highest among all "unified models" and a bit above GPT-4o. The model also performs well on image understanding tasks and beats some specialized models in the OCRBench.

Open source and modular

X-Omni's reinforcement learning approach is promising, but the paper doesn't claim a huge leap in performance. In most benchmarks, the gains over alternatives are modest. GPT-4o remains a strong performer, and Bytedance's Seedream 3.0 also does well, though it only generates images.

What stands out is how X-Omni brings together open-source tools from different research teams - including competitors - to build a model that holds its own against commercial offerings like OpenAI's.

When it launched a few months ago, GPT-4o's image generation in ChatGPT set new standards, likely by combining autoregressive and diffusion architectures to improve prompt understanding and text rendering.

Tencent has released X-Omni as open source on Hugging Face and GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.