Researchers from Google DeepMind have developed a hybrid AI architecture that combines transformers with networks specialized in logical reasoning. The result is a language model that performs well at generalizing, even on complex tasks.

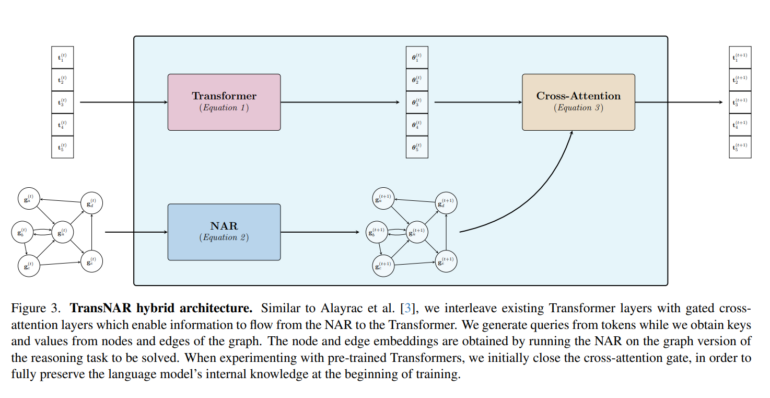

A team from Google DeepMind has developed a new AI architecture called TransNAR. It combines the strengths of two different AI approaches: the language processing capabilities of transformer language models and the robustness of specialized AI systems for algorithmic reasoning, known as Neural Algorithmic Reasoners (NARs).

The goal was to compensate for the weaknesses of the individual approaches. Transformer language models like GPT-4 are very good at processing and generating natural language. However, they often fail without external tools on tasks that require precise algorithmic calculations.

This is precisely where NARs excel. These AI systems based on Graph Neural Networks (GNNs) can robustly execute complex algorithms when the tasks are represented in graph form. However, they require rigid structuring of the input data and cannot be directly applied to unstructured problems in natural language.

The DeepMind researchers have now combined both approaches: A transformer language model processes the text of the task, while a NAR processes the corresponding graph representation. The two models exchange information via neural attention mechanisms - the NAR can thus also be understood as a kind of "internal tool": communication does not take place via an API, but within the embeddings.

With the hybrid architecture, the language model can access the robust computations of the NAR and the system can execute algorithms based on a natural language description.

TransNAR outperforms pure transformers, sometimes by a large margin

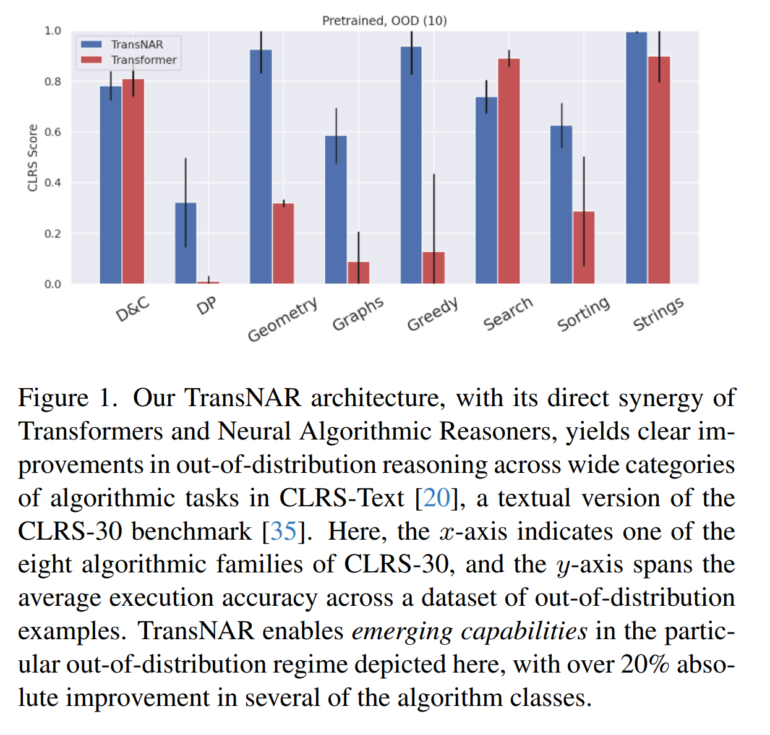

The researchers tested their TransNAR model on the CLRS dataset. This dataset contains descriptions of 30 different algorithms from computer science, such as binary search or sorting methods.

The results showed that TransNAR performs significantly better than a pure transformer model, especially in out-of-distribution cases where the test data deviates from the training data, i.e., the system has to generalize. In several classes of algorithms, TransNAR achieved an absolute improvement of more than 20%.

The team says the results show that by combining complementary AI approaches, the ability of language models to perform algorithmic reasoning can be significantly improved.