The U.S. would adhere to strict ethical guidelines, according to a U.S. Air Force general. But that should not be expected of a potential adversary, he said.

During an event at the Hudson Institute, Lt. Gen. Richard G. Moore Jr. was asked how the Pentagon views autonomous warfare. AI ethics is a topic of discussion at the highest levels of decision-making in the Defense Department, said the three-star Air Force general and deputy chief of staff for Air Force plans and programs.

“Regardless of what your beliefs are, our society is a Judeo-Christian society, and we have a moral compass. Not everybody does,” Moore said. “And there are those that are willing to go for the ends regardless of what means have to be employed.”

What role AI will play in the future of warfare depends on "who plays by the rules of warfare and who doesn’t. There are societies that have a very different foundation than ours," he said.

"It is not anticipated to be the position of any potential adversary."

In a statement to the Washington Post, Moore said that while AI ethics may not be the United States’s sole province, it is unlikely that its adversaries would act according to the same values.

“The foundation of my comments was to explain that the Air Force is not going to allow AI to take actions, nor are we going to take actions on information provided by AI unless we can ensure that the information is in accordance with our values,” the Washington Post quoted Moore as saying. “While this may not be unique to our society, it is not anticipated to be the position of any potential adversary.”

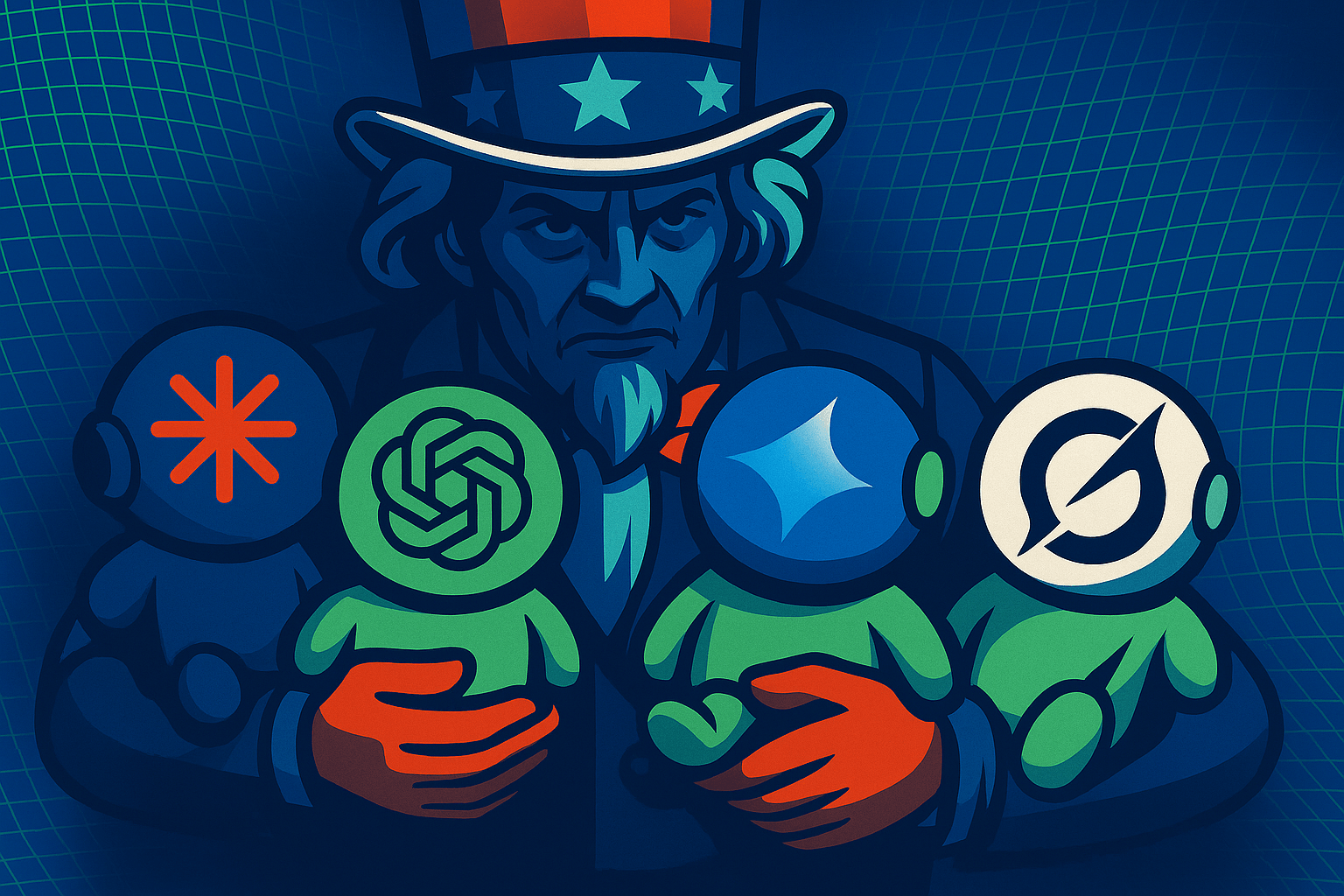

Moore did not specify who this potential adversary might be. What is clear, however, is that China in particular is perceived by the United States as a potential adversary in an armed conflict in which AI plays a central role.

China also has reasons not to wage autonomous wars

But even though the ethical guidelines in both nations are very different, and historically come from very opposite places - in the U.S. often from Christian authors, and in China from Marxism-Leninism texts and Communist Party rules - both nations may ultimately have their reasons for not giving AI systems ultimate control over weapons systems.

"Once you turn over control of a weapons system to an algorithm, worst case, then the party loses control," Mark Metcalf, a lecturer at the University of Virginia and retired U.S. naval officer, said in an interview with The Washington Post.

Ethical standards as a disadvantage?

However, Moore's statements can also be interpreted in this direction: While refraining from fully autonomous warfare is morally imperative, it may prove to be a disadvantage in a full-scale war, for example in terms of response speed.

Even if the official doctrine of the U.S. armed forces is not to cross the threshold of full autonomy, the Pentagon must still find answers to an adversary that does not play by these rules. If successful, this would likely increase the chances that these principles will prevail in the long run, especially in the event of an actual conflict.