Users claim Claude AI is getting dumber, Anthropic says it's not

Users are reporting that Anthropic's Claude chatbot has become less capable recently, echoing similar complaints about ChatGPT last year. Anthropic says it hasn't made any changes, highlighting the challenges of maintaining consistent AI performance.

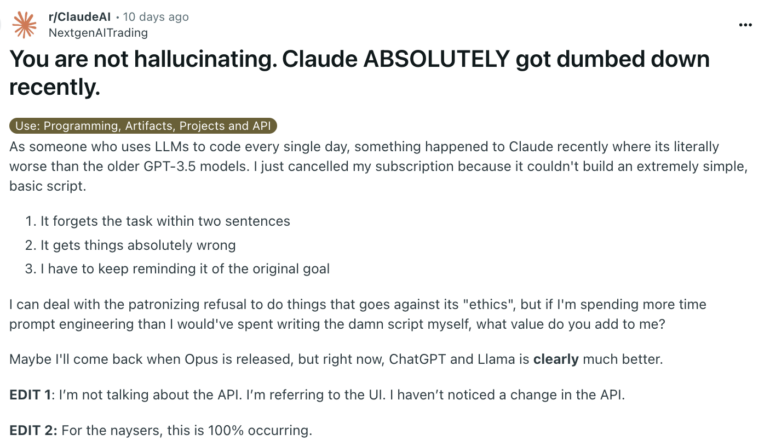

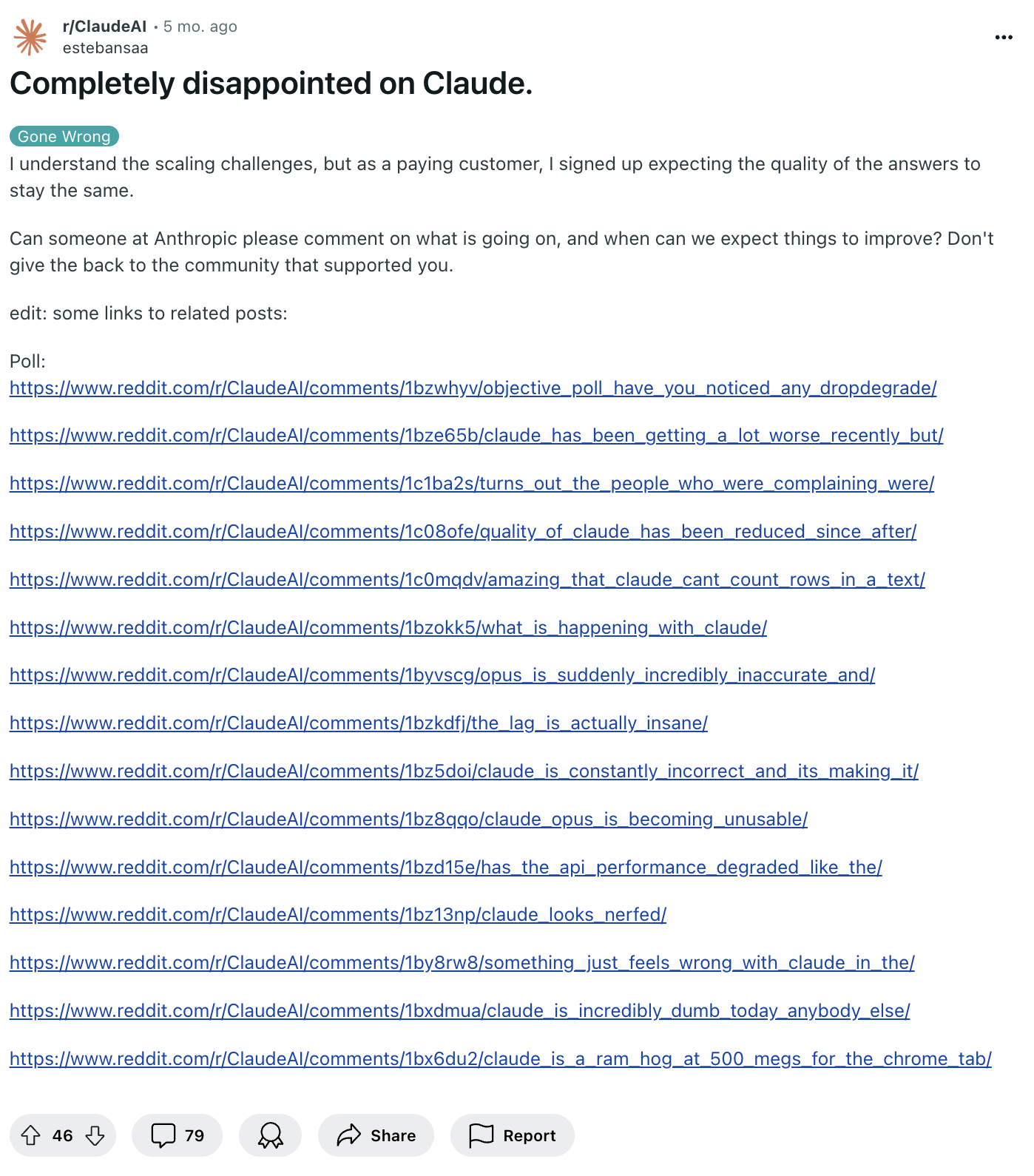

A Reddit post claiming "Claude absolutely got dumbed down recently" gained traction, with many users agreeing the chatbot's abilities have declined. The original poster said Claude now forgets tasks quickly and struggles with basic coding, prompting them to cancel their subscription.

Something is going on in the Web UI and I'm sick of being gaslit and told that it's not. Someone from Anthropic needs to investigate this because too many people are agreeing with me in the comments.

u/NextgenAITrading on Reddit

Anthropic's Alex Albert responded, stating their investigation "does not show any widespread issues" and confirming they haven't altered the Claude 3.5 Sonnet model or inference pipeline.

We'd also like to confirm that we've made no changes to the 3.5 Sonnet model or inference pipeline. If you notice anything specific or replicable, please use the thumbs down button on Claude responses to let us know. That feedback is very helpful.

Alex Albert, Developer Relations at Anthropic

To increase transparency, Anthropic now publishes its system prompts for Claude models on its website. Similar complaints about Claude surfaced in April 2024, which Anthropic also denied. Some users later reported performance returning to normal.

Recurring pattern of perceived AI degradation

This pattern of users perceiving AI decline followed by company denials has occurred before, notably with ChatGPT in late 2023. Complaints about GPT-4 and GPT-4 Turbo persist today, even for the latest GPT-4o model.

Several factors may explain these perceived declines. Users often become accustomed to AI capabilities and develop unrealistic expectations over time. When ChatGPT launched in November 2022 using GPT-3.5, it initially impressed many. Now, GPT-3.5 appears outdated compared to GPT-4 and similar models.

Natural variability in AI outputs, temporary computing resource constraints, and occasional processing errors also play a role. In our daily use, even reliable prompts sometimes produce subpar results, though regenerating the response usually resolves the issue.

These factors can contribute to the perception of decreased performance even when no significant changes have been made to the underlying AI models. But even when the model is changed or updated, it's often not clear what exactly is going on.

OpenAI recently released an updated GPT-4o variant, stating that users "tend to prefer" the new model because they were unable to provide a concrete changelog. The company said it would like to provide more details about how model responses differ, but cannot due to a lack of advanced research into methods for granularly evaluating and communicating improvements in model behavior.

OpenAI has previously noted that AI behavior can be unpredictable, describing AI training, model tuning, and evaluation as an "artisanal, multi-person effort" rather than a "clean industrial process. Model updates can improve some areas while degrading others. This shows how difficult it is to maintain and communicate generative AI performance at scale.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.