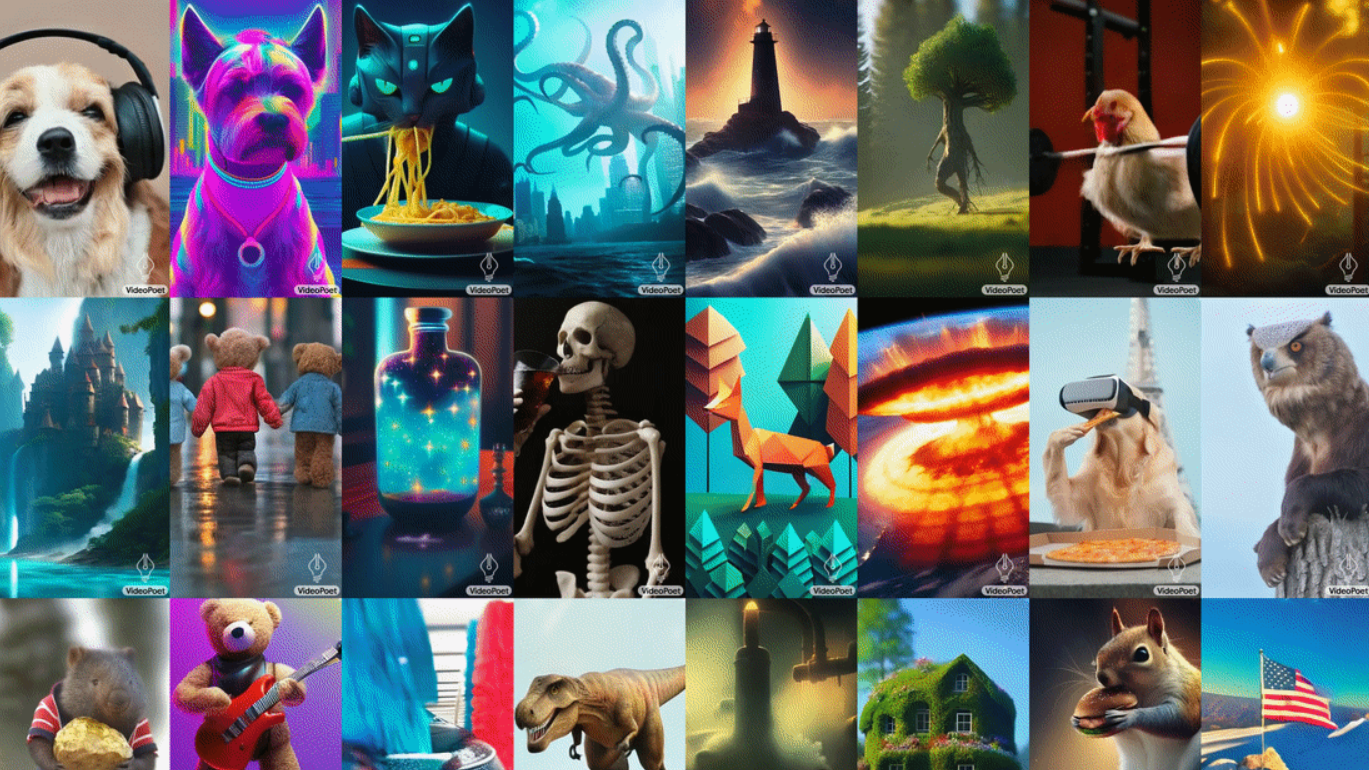

Google has unveiled VideoPoet, a new generative AI system that can create and edit videos from text and other input.

According to Google, VideoPoet is a large language model designed for a variety of video generation tasks, including text-to-video, image-to-video, video stylization, video inpainting and outpainting, and video-to-audio. Unlike competing models, VideoPoet integrates many capabilities into a single model, rather than relying on separately trained components for each task.

Video: Google

VideoPoet uses multiple tokenizers (MAGVIT V2 for video and image and SoundStream for audio) to train an autoregressive language model across video, image, audio, and text modalities. Once the model generates tokens conditioned on some context, these can be converted back into a viewable representation with the tokenizer decoders.

Video: Google

VideoPoet can generate videos with variable length and a range of motions and styles, depending on the text content. It can also take an input image and animate it with a prompt, predict optical flow and depth information for video stylization, and generate audio. By default, the model generates videos in portrait orientation to tailor its output towards short-form content.

Video: Google

Camera movements can also be controlled in videos by using text prompts to describe camera movement.

Video: Google

VideoPoet can also create videos with sound, like this cat playing the piano.

Video: Google

VideoPoet a step towards "any-to-any" generation

According to Google, VideoPoet was evaluated against several benchmarks, and the videos generated were compared to those of other models. On average, participants preferred between 24 and 35% of VideoPoet's examples because they matched the prompt better than competing models such as Phenaki, VideoCrafter and Show-1.

According to Google, the framework could support "any-to-any" generation in the future and be extended to text-to-audio, audio-to-video and video captioning, "among many others".

Using Bard as a scriptwriter, Google has also produced a short film using VideoPoet:

The company has not revealed whether it has any plans to make the model available, but integration into a planned Bard Advanced at some point seems possible. More examples in full resolution can be found on the VideoPoet project page.