Virtual rat with AI-powered brain could open new field of "virtual neuroscience"

Harvard neuroscientists collaborated with Google's DeepMind to create a biomechanically realistic virtual rat controlled by an artificial neural network. This new approach could provide insights into how brains generate complex, coordinated movements.

Scientists at Harvard University have teamed up with researchers at Google's DeepMind AI lab to create a virtual rat that moves just like a real rodent. The goal is to better understand how brains control and coordinate the effortless agility of human and animal movement - something no robot has been able to closely emulate yet.

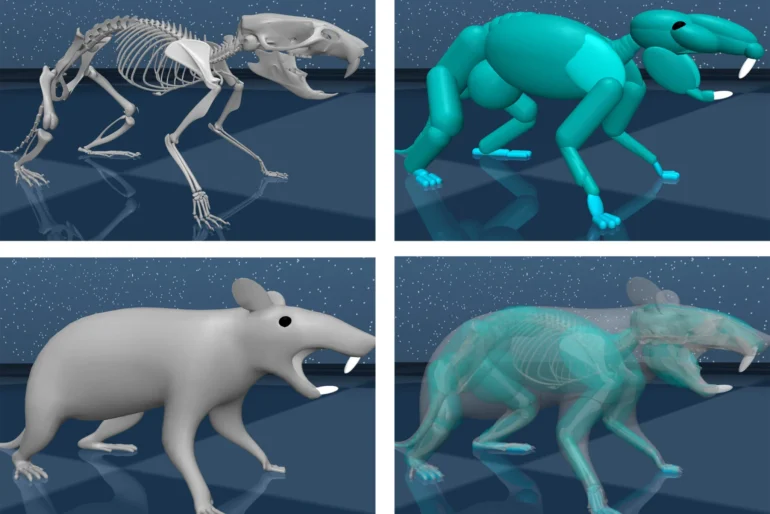

Professor Bence Ölveczky from Harvard's Department of Organismic and Evolutionary Biology led the effort to build a biomechanically realistic digital rat model. Using high-resolution data recorded from real rats, the team trained an artificial neural network that acts as the virtual rat's "brain." This AI brain controls the virtual body in a physics simulator called MuJoCo, where forces like gravity are present.

Published in the journal Nature, the study found that activations in the virtual control network accurately predicted neural activity measured in the brains of real rats producing the same behaviors. "DeepMind had developed a pipeline to train biomechanical agents to move around complex environments. We simply didn't have the resources to run simulations like those, to train these networks," said Ölveczky.

AI rat could open up the field of 'virtual neuroscience'

Matthew Botvinick, Senior Director of Research at Google DeepMind and co-author of the study, said working with the Harvard team was "a really exciting opportunity for us." He explained, "We've learned a huge amount from the challenge of building embodied agents: AI systems that not only have to think intelligently, but also have to translate that thinking into physical action in a complex environment."

The team trained the artificial neural network to implement inverse dynamics models, which scientists believe our brains use to guide movement. Similar to how our brain calculates the trajectory for reaching a cup of coffee and translates it into motor commands, the virtual rat's network learned to produce forces to generate desired movements based on reference trajectories from real rat data. This enabled the virtual rodent to imitate a wide range of behaviors, including novel ones it wasn't explicitly trained on.

The researchers believe these simulations could launch a new field of "virtual neuroscience" where AI-simulated animals, trained to behave like their real counterparts, provide convenient and transparent models for studying neural circuits and for example how they are affected by disease. Beyond fundamental brain research, the platform could potentially be used to engineer improved robotic control systems.

Next, the scientists want to give the virtual rat autonomy to solve tasks encountered by real rats. "From our experiments, we have a lot of ideas about how such tasks are solved, and how the learning algorithms that underlie the acquisition of skilled behaviors are implemented," said Ölveczky. "We want to start using the virtual rats to test these ideas and help advance our understanding of how real brains generate complex behavior."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.