A look back at the computer future: Who would have thought it, deepfakes work. What now?

If media literacy is the answer to AI media, then deepfakes have already won

Two recent studies show that people can hardly distinguish synthetic media - also called "deepfakes" depending on the context - from original media. With simple portrait images, the distinction is almost impossible.

Only when the complexity of the fake increases (video, audio) do people get it right significantly more often than by chance, but still wrong in many cases. The researchers did not even use the latest deepfake technology.

Both deepfake studies, and not only those, recommend that people need to be taught media literacy so they can better detect AI fakes. I think this is a fallacy.

If people can hardly recognize AI fakes even under ideal conditions, i.e., with a focus on the media content and the knowledge that they are supposed to detect a fake - then they will certainly not succeed in the hectic social media maze.

Many fake photos and videos in the context of Russia's war against Ukraine would actually be relatively easy to recognize as fakes based on obvious errors (wrong season, wrong region, wrong language, wrong uniform, etc.) in the image itself. Nevertheless, they are believed and circulate widely on the web.

And in the future, are humans supposed to unmask AI content that has been faked specifically for one purpose? Because of blurry gaps between teeth or a slightly out-of-focus iris? Because a few hairs stick out oddly? Because the voice has an unusual intonation at one point? Because a video is only 95 percent lip-sync?

In my opinion, it is illusory to believe that more (or any) media literacy alone can eliminate the potential danger of deepfakes. Also because we have to expect that at some point deepfakes can no longer be detected with the naked eye, even by professionals. Generative AI is still at the beginning of its development, despite the great progress made in recent years.

Either we find a technical solution or we end up as "Deepfake inventor" Ian Goodfellow predicted back in 2017: in a world where we can no longer trust photos and videos - and therefore no longer readily believe authentic recordings.

>> Deepfake test: Do people recognize synthetic media?

Future of computing now!

?? Eve CEO Jerome Gackel: Matter is a gamechanger for the smart home

?? Meta Quest (2): New update improves tracking, Oculus Move expands

?? OpenXR aims to standardize advanced haptics for VR and AR

?? Deepmind and Linkedin founders promise new interface era

?? Autonomous driving: Intel takes Mobileye public

?? AI for pigs: Grunt analysis to promote animal welfare

?of the week: Magic Leap 2 has a good start

The first independent reports about Magic Leap 2 are positive. Testers especially praise the larger field of view and the dimming technology.

>> Magic Leap 2: New AR glasses surprise testers

Something ?? to finish

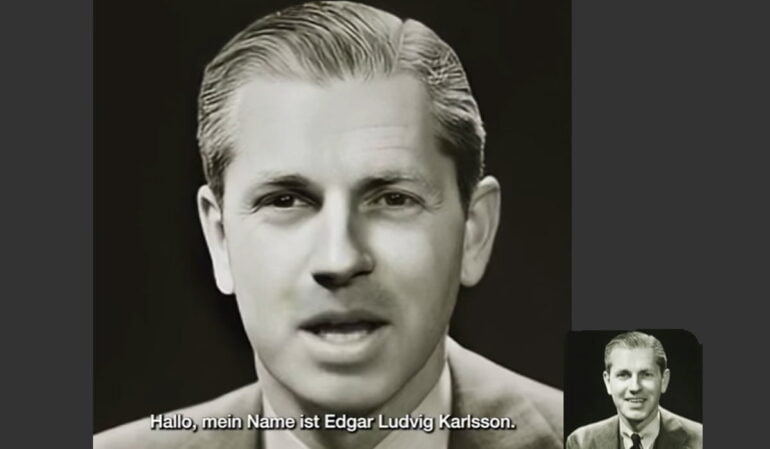

Remember MyHeritage? That's right, the website that makes portrait images of deceased people wink or smile with an AI tool. The portraits can now also speak lip-sync.

>> MyHeritage: New AI tool lets deceased people speak in photos