Zhipu AI's GLM-4.5 is yet another open-source Chinese LLM closing the gap with Western models

Zhipu AI has released GLM-4.5 and GLM-4.5V, a new family of open-source language models built for logical reasoning, programming, and agent-based tasks.

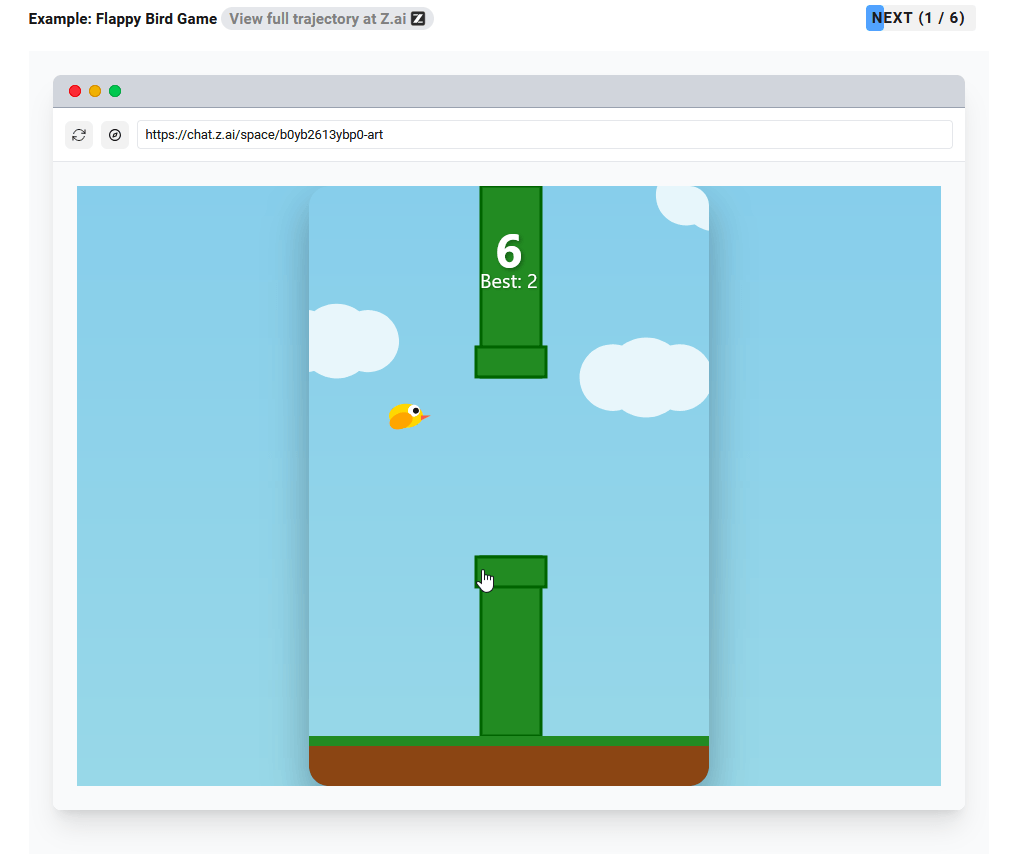

To showcase the models' practical capabilities, Zhipu AI points to examples like generating interactive mini-games and physics simulations, producing presentation slides with autonomous web search, and developing full web applications with integrated frontends and backends.

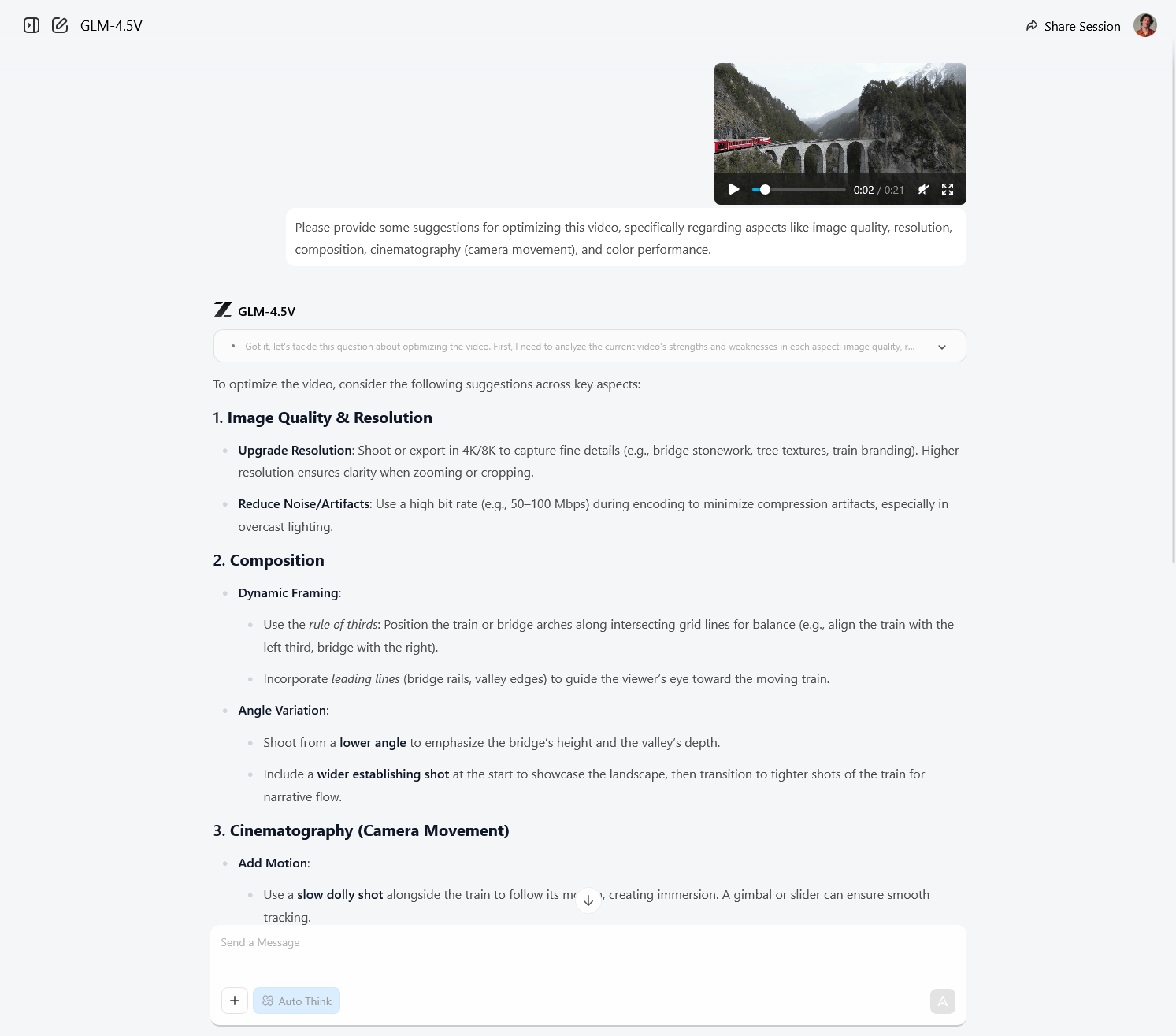

The multimodal version, GLM-4.5V, adds image and video analysis, can reconstruct websites from screenshots, and perform screen operations for autonomous agents. Users can try these features for free in a ChatGPT-style interface at chat.z.ai after logging in.

The lineup includes three models: the standard GLM-4.5, the lighter GLM-4.5-Air, and the multimodal GLM-4.5V. Each model offers a hybrid approach with two modes: "think mode" for complex reasoning and "quick response mode" for faster answers.

Strong results with fewer parameters

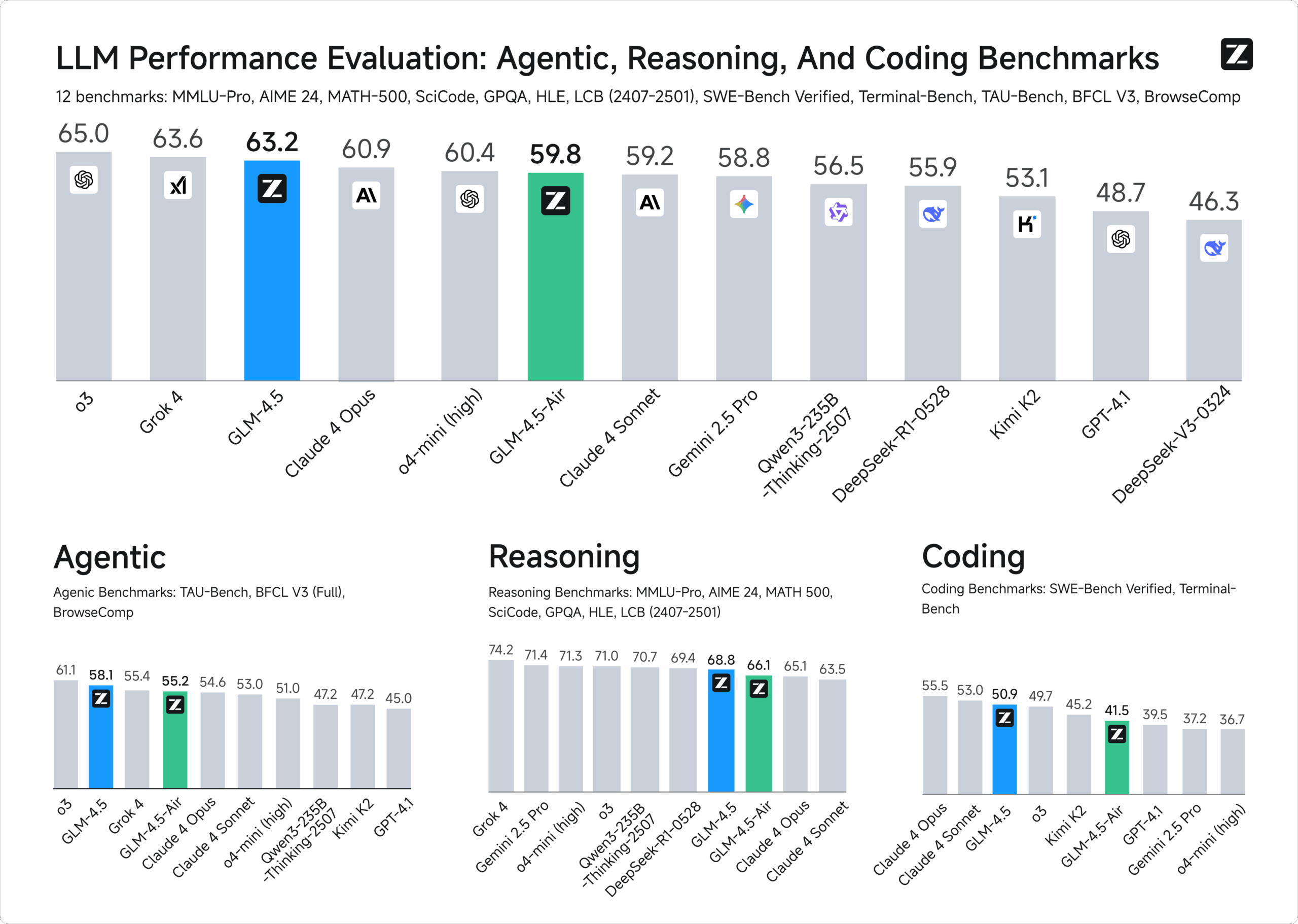

Zhipu AI claims GLM-4.5V delivers the strongest performance among open-source models of a similar size. In tests across twelve benchmarks, GLM-4.5 ranked third overall and second for autonomous tasks. It scored 70.1 percent on TAU-Bench agent tasks, 91.0 percent on AIME 24 math problems, and 64.2 percent on SWE-Bench Verified software engineering tasks.

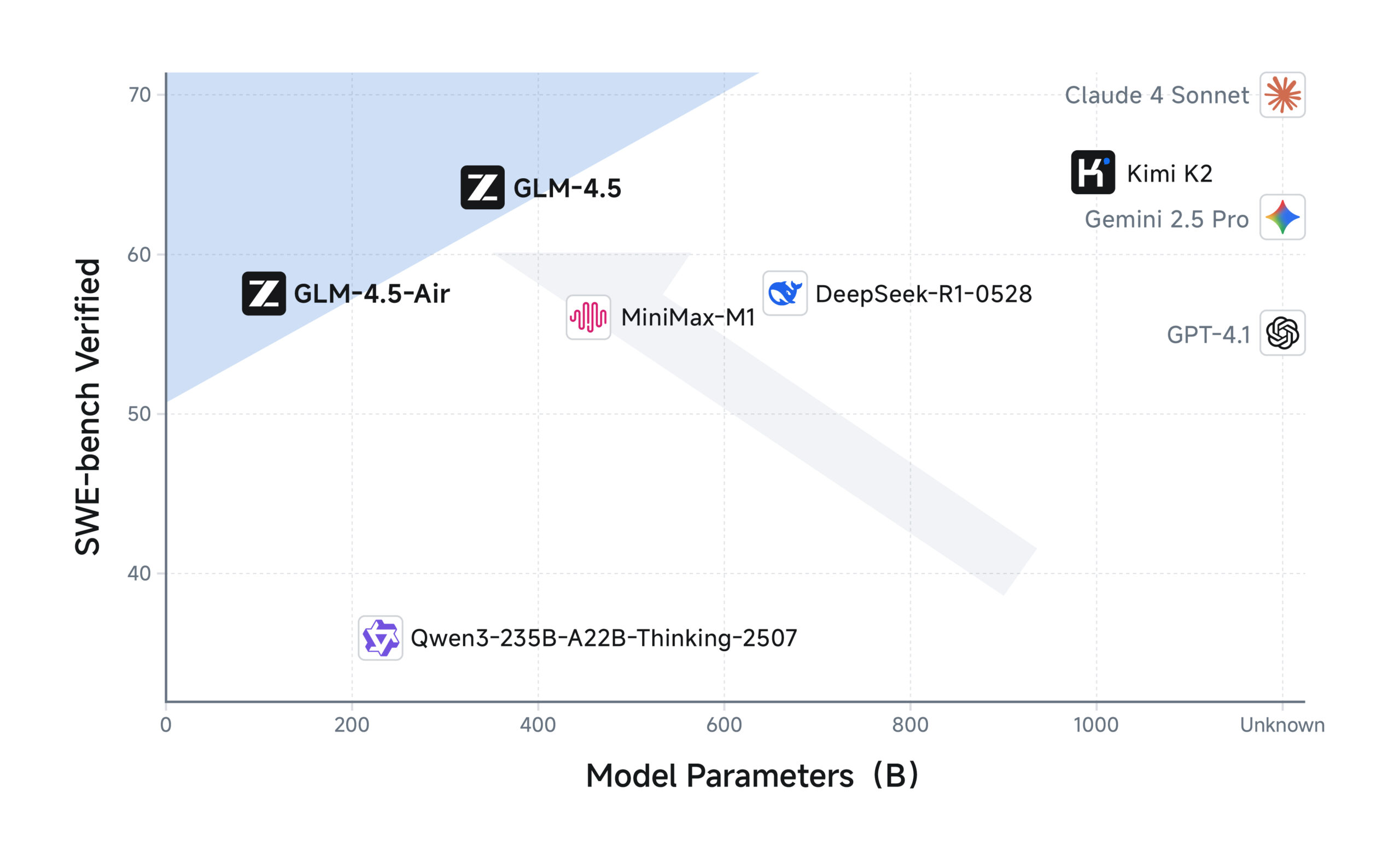

Parameter efficiency stands out: GLM-4.5 uses just half as many parameters as Deepseek-R1 and a third of what Kimi K2 requires, yet matches or beats their performance. For web navigation, GLM-4.5 reaches 26.4 percent on BrowseComp, surpassing even the much larger Claude Opus 4, which scores 18.8 percent.

Deeper architecture for better reasoning

GLM-4.5 uses a mixture-of-experts architecture with a total of 355 billion parameters and 32 billion active at any time. The compact GLM-4.5-Air has 106 billion parameters, with 12 billion active. GLM-4.5V builds on the Air version.

Unlike models such as Deepseek-V3 and Kimi K2, Zhipu AI favors deeper networks with more layers rather than wider ones with more parameters per layer. Their research found that increasing depth boosted reasoning abilities. Training covered around 23 trillion tokens in multiple phases, starting with general data and progressing to specialized code and reasoning tasks.

Zhipu's rise to a billion-dollar valuation

All models are available through the Z.ai platform with OpenAI-compatible API endpoints. The code is open source on Github, and model weights can be downloaded from Hugging Face and Alibaba's Modelscope.

Zhipu AI first attracted attention in 2022, when its GLM-130B model outperformed offerings from Google and OpenAI. Founded in 2019 by professors from Tsinghua University and based in Beijing, the company now employs more than 800 people, most of whom work in research and development.

Major investors include Chinese tech giants like Alibaba, Tencent, and Xiaomi and several sovereign wealth funds. International backers such as Saudi Aramco's Prosperity7 Ventures have also joined in, and the company is now valued at over $5 billion. Like Deepseek, Zhipu AI is known for its strong academic team and independent research and is currently preparing for an IPO.

All Chinese AI models are subject to government censorship, reflecting the priorities and ideology of the Chinese administration. Meanwhile, the US government under Trump is pushing for its own restrictions on US AI models, driven by a different set of political values. In both cases, these models risk becoming tools for state propaganda and the broader culture wars—different ideologies, but ultimately similar forms of censorship that shape how AI systems are used and what they can say.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.