3D-GPT generates 3D worlds in Blender

Researchers demonstrate 3D-GPT, an AI model that generates 3D worlds following prompts in Blender.

The team sees 3D-GPT as a potential support for classical procedural methods that generate 3D content via customizable parameters and rule-based systems.

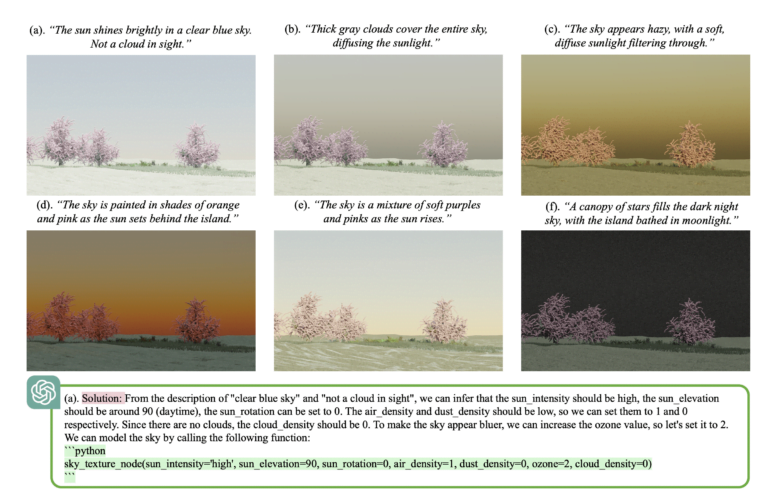

Instead, 3D-GPT uses large language models of OpenAI, to interpret natural language instructions from humans for procedural 3D generation.

3D-GPT uses chatbot agents and Blender

To do this, the AI system breaks down complex modeling tasks into smaller components that are handled by AI agents. The task dispatch agent knows all available functions within the procedural generation system and assigns them according to the prompt. This information is passed on to the other agents.

The conceptualization agent is responsible for detecting and correcting missing details and inaccuracies in the original text. What color should the flowers on a tree be? What kind of trees are we talking about, and how long are the branches? The agent adds the necessary details to the description given by the human.

All of this information is then passed to the modeling agent, which uses the augmented prompt, associated functions, and tool functionality documentation to write Python code that directly controls the 3D software to generate 3D content.

3D GPT shows the potential of AI for 3D development

Using a number of examples, the researchers show that the approach can enable precise control through procedural generation. This could significantly reduce the workload, they say, by eliminating the need to manually define many parameters.

Video: Sun, Han et al.

Video: Sun, Han et al.

However, this reliance on procedural rendering algorithms is also a limitation of 3D-GPT. Its potential in certain modeling categories, such as hair or fur, is limited, they say. In addition, 3D-GPT cannot yet control some advanced features of 3D software, so manipulating branches or mixing colors of textures remains a challenge.

More information and the code is available on the 3D-GPT project page.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.