Unintentional AI leak from Mistral becomes an unexpected powerhouse

A new AI model is making waves in the open-source scene. Now it's clear: it's a leak of an older Mistral model.

A few days ago the AI model "miqu-1-70b" appeared on HuggingFace and finally on 4chan. The files of the AI model "miqu-1-70b" were first posted on HuggingFace by a user named "Miqu Dev". On the same day, an anonymous user posted a link to the files on 4chan. The CEO of Mistral, Arthur Mensch, confirmed yesterday that it was a leaked model from his company.

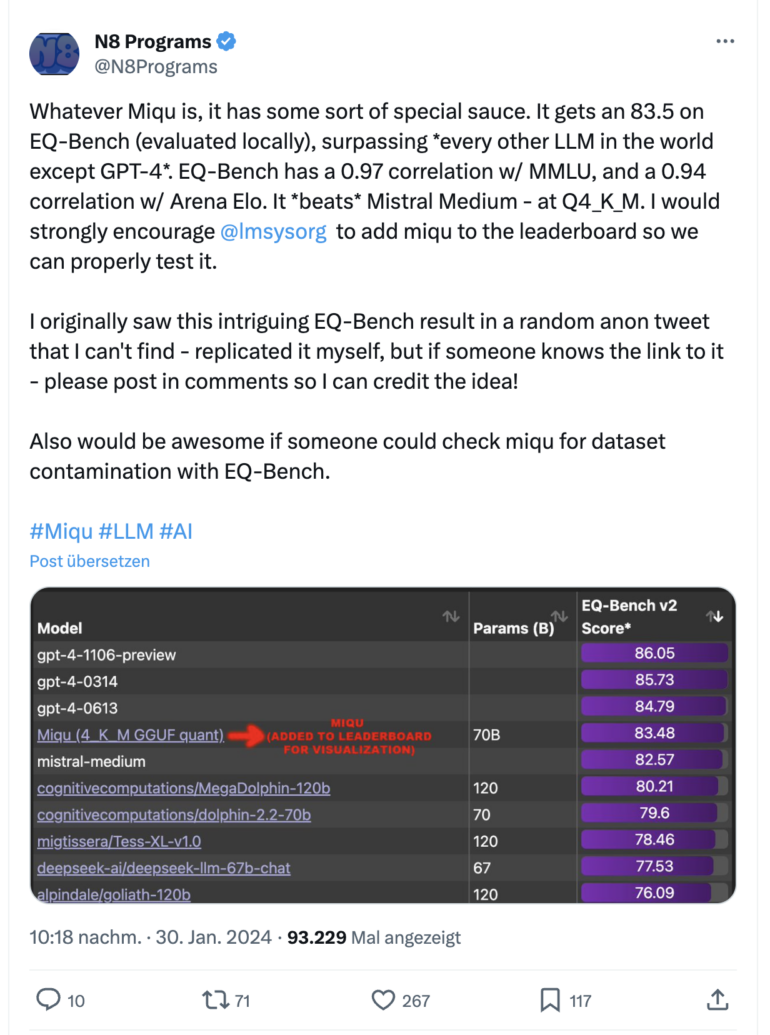

After the leak on 4chan, the model quickly attracted attention: first tests in the community show that the performance of the model is equal to or even better than Mixtral, Mistral's strongest open-source model to date, in most tests. In some tests, it even outperformed the strongest Mistral Medium model, and in one benchmark it even beat all language models except GPT-4.

Miqu-1-70B is an old Mistral model based on Llama-2

After much speculation, Mistral CEO Arthur Mensch confirmed on X yesterday that an "over-enthusiastic employee" of an early access customer had released a quantized and watermarked version of an old model trained by Mistral. According to Mensch, this is an old model that the company trained based on Meta's Llama 2. The pre-training of the model was completed at the time of the release of Mistral-7B, the company's first language model. Some had speculated that Mistral itself had leaked the model after the Paris-based AI startup first released its latest Mixtral model via a torrent.

The company appears to have no plans to remove the model from HuggingFace - whether an official release with licenses is planned is unknown. Interestingly, Mensch responded to the HuggingFace post, not by asking for it to be removed, but by jokingly suggesting that the poster "might consider attribution".

According to Mensch, the company has made great progress since the development of the leaked model - so a model on par with GPT-4 is probably to be expected with Mistral-Large.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.