Google launches Imagen 3 and two million token context windows in its Vertex AI cloud

Google introduces new products for its Vertex AI Cloud.

Gemini 1.5 Flash combines low latency, competitive pricing, and a 1 million token context window. Google says this makes it an excellent choice for various use cases such as retail chatbots, document processing, and research agents.

Gemini 1.5 Pro offers a context window of up to two million tokens, making it suitable for multimodal use cases like analyzing a lot of code, audio files, or videos. Users should be aware of the "lost in the middle" problem that the Gemini models are also likely to suffer from.

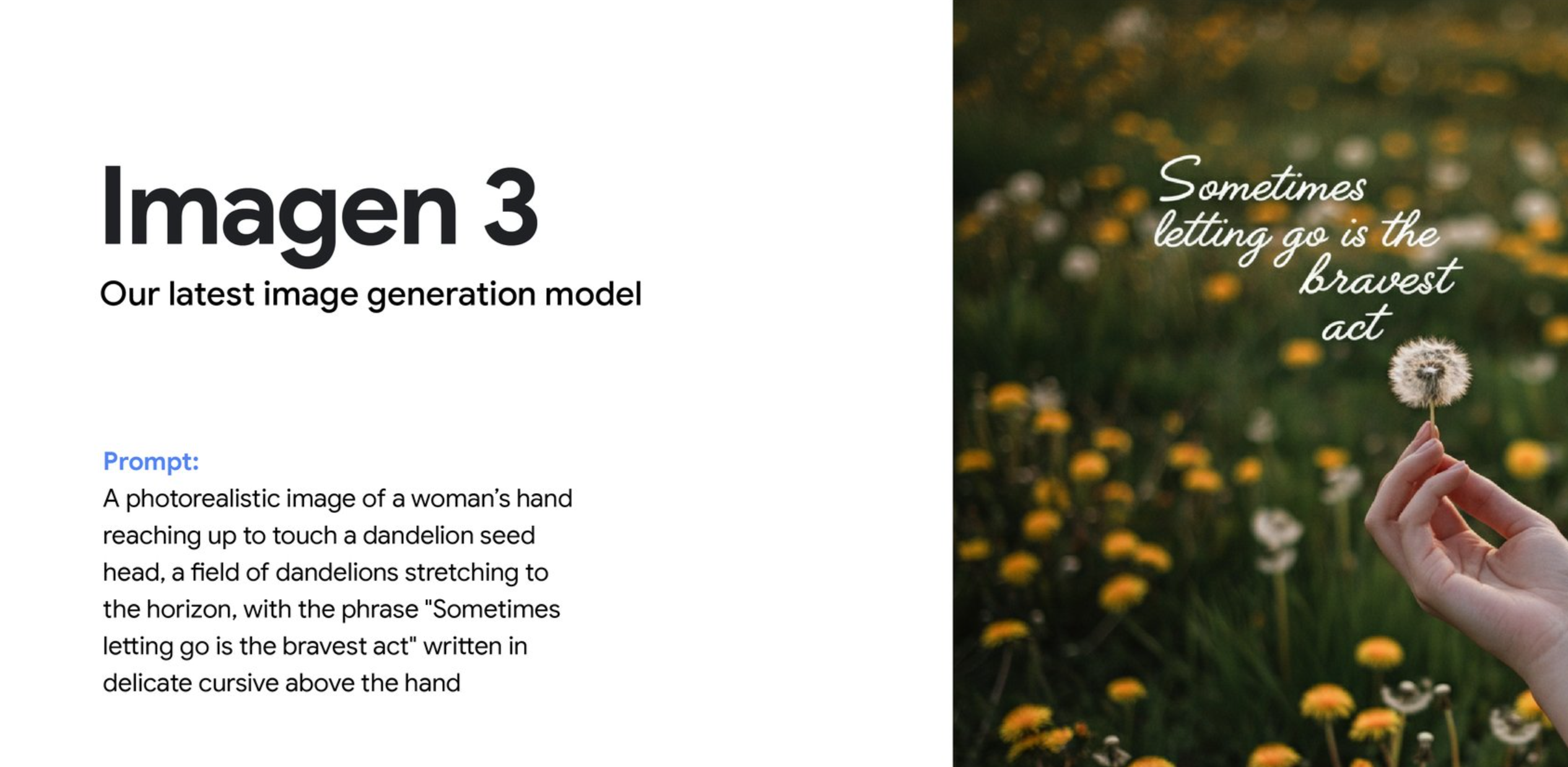

Google says Imagen 3, its latest image generation model, generates images over 40 percent faster than its predecessor and follows prompts better. I was able to briefly test the model in a preview version and didn't get the impression that it comes close to current industry-leading generators like Ideogram or Midjourney in terms of quality and prompt accuracy.

Access to Imagen 3 in the Vertex Cloud can be requested here. All generated images are automatically labeled with Deepmind's SynthID.

Google is also expanding the selection of third-party and open-source models in Vertex AI, reducing costs through context caching, improving AI data grounding, and releasing Gemma 2 as a powerful open-source model. Read all the news here.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.