Mistral Large 2 just one day after Llama 3 signals the LLM market is getting redder by the day

Key Points

- French AI company Mistral AI has released Large 2, a new language model that aims to provide similar performance to Meta's Llama 3 while being more efficient, with only about a quarter of the parameters (123B vs. 405B).

- Large 2 boasts significant improvements in code generation, mathematics, logic, multilingual support, and function calls compared to its predecessor, and has been optimized to be more cautious and critical in its responses to minimize "hallucinations".

- The model is now available through various platforms, with weights available for download and hosted on HuggingFace under the Mistral Research License for non-commercial use, while a Mistral Commercial License is required for commercial use with own hosting.

French AI company Mistral AI has released Large 2, a new language model that aims to deliver similar performance to Meta's just-released Llama 3 while being more efficient.

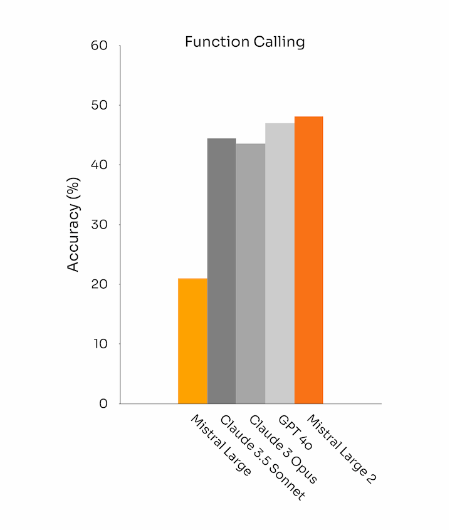

Mistral Large 2, the second generation of Mistral AI's flagship model, boasts significant improvements in code generation, mathematics, logic, multi-language support, and function calling over its predecessor.

With a 128,000-token context window, Large 2 supports dozens of languages, including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean. It also handles over 80 programming languages such as Python, Java, C, C++, JavaScript, and Bash.

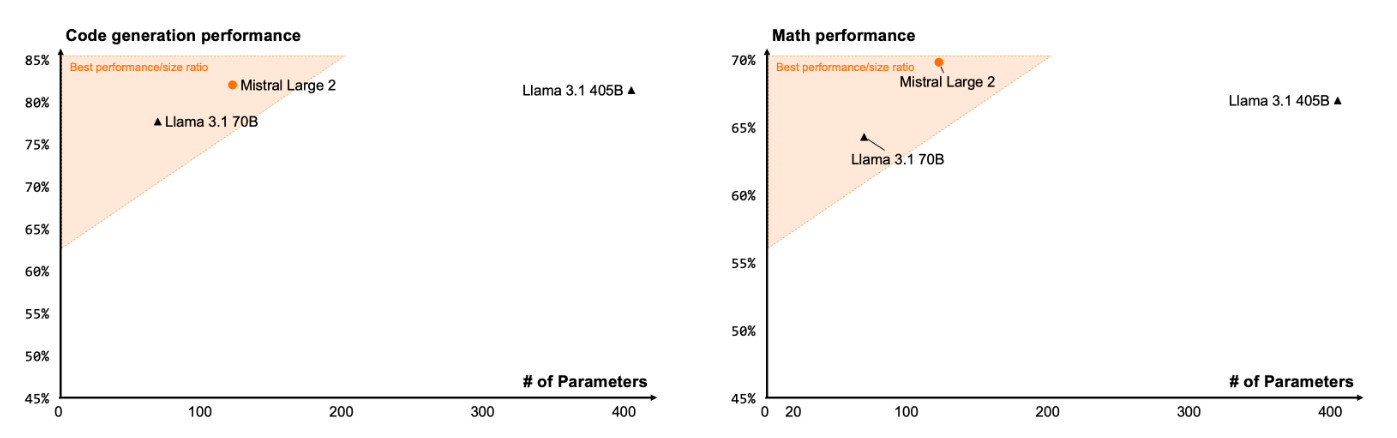

Mistral says Large 2 sets new standards in "performance/cost of serving ratio." In the widely cited Massive Multi-task Language Understanding (MMLU) benchmark, the pre-trained version achieves 84.0% accuracy, setting a record "on the performance/cost Pareto front of open models".

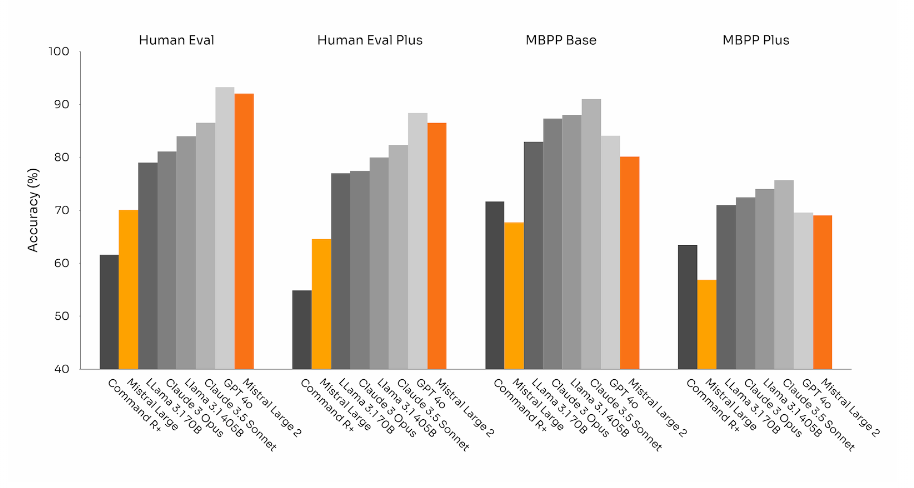

For coding tasks, Large 2 significantly outperforms its predecessor and rivals leading models such as GPT-4o, Claude 3.5 Sonnet, and Llama 3 405B.

Notably, it achieves this with only about a quarter of the parameters (123B vs. 405B) compared to Llama 3.

One focus of development has been to improve reasoning and minimize the model's tendency to "hallucinate" plausible-sounding but factually incorrect or irrelevant information. Large 2 has been optimized to be more cautious and critical in its responses, admitting when it cannot find a solution or lacks sufficient information to provide a confident answer.

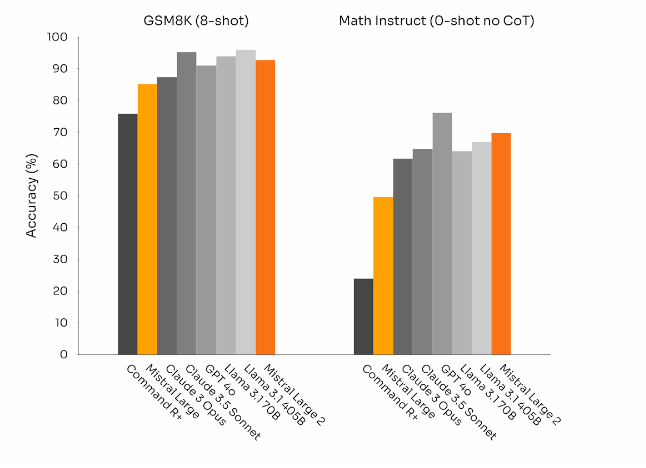

This emphasis on accuracy is reflected in its improved performance on math tasks, the company says, though it doesn't set records in this area.

The model also features improved function calling and information retrieval capabilities, as it has been trained to reliably execute both parallel and sequential function calls. This should allow Large 2 to serve as the foundation for complex business applications, Mistral says.

Mistral Large 2 is now available through the Mistral platform, Azure AI Studio, Amazon Bedrock, IBM watsonx.ai, and Google Vertex AI.

The weights for the instructional model are available for download (228GB) and hosted on HuggingFace under the Mistral Research License, which permits use and modification for research and non-commercial purposes.

For commercial use with own hosting a Mistral Commercial License is required. With 123 billion parameters, the model is designed for inference on a single node with long context applications.

Mistral Large 2 heats up LLM competition as market turns into a red ocean

The rapid release of Mistral Large 2, just one day after Llama 3 405B, indicates the LLM market is becoming increasingly competitive. More models are vying for the same customers and applications with similar performance. For months, the focus has been on efficiency and pricing, with costs dropping sharply while development expenses remain high.

The key question is whether a model provider can break out of this intense competition by significantly upgrading reasoning capabilities, expanding existing business areas, and opening new ones. If not, the young market may soon face a serious test in living up to the high valuations set by investors.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now