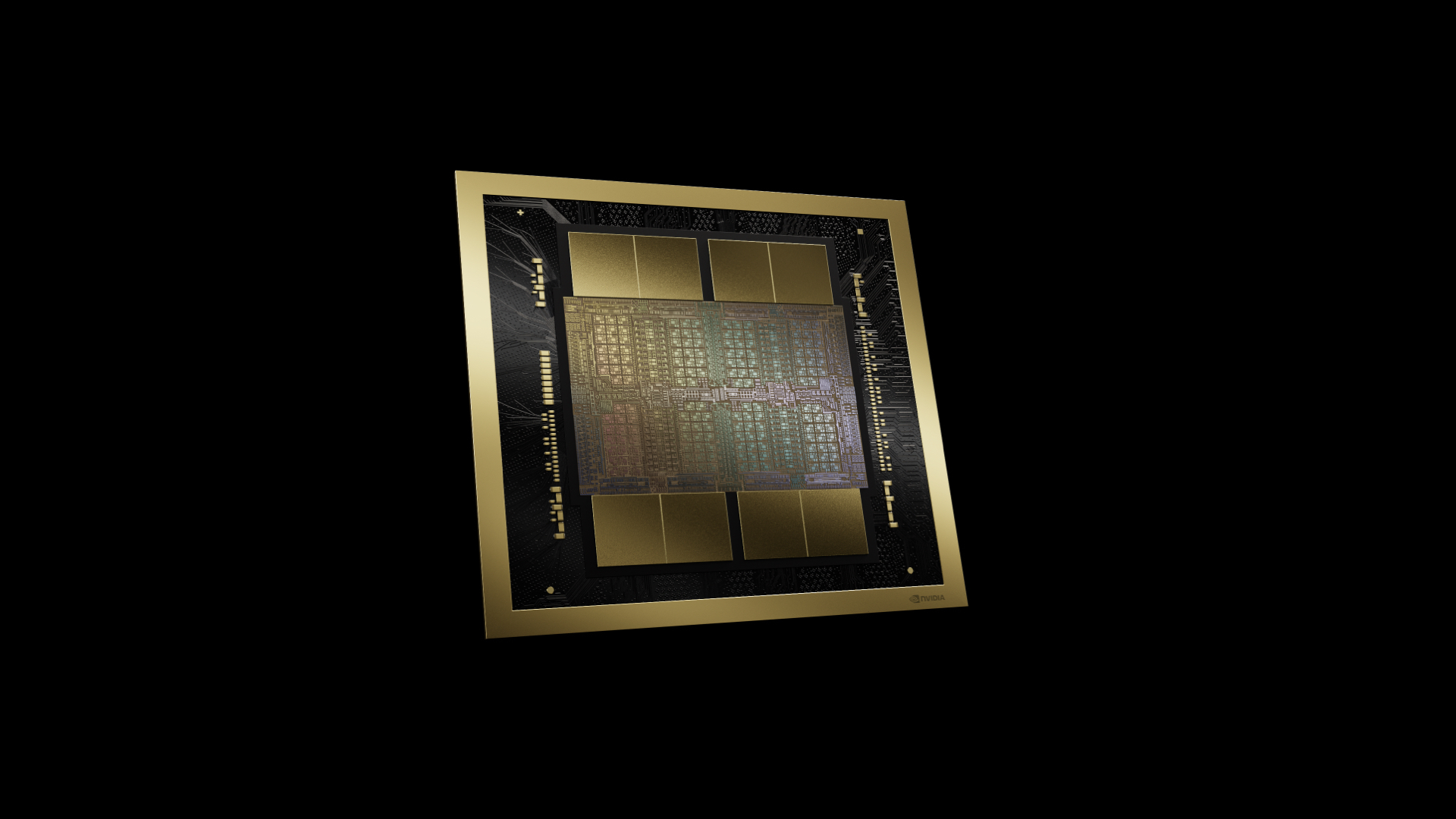

AI inference benchmark: Nvidia dominates with Blackwell architecture

Nvidia has announced record-breaking results for AI inference in the MLPerf Inference v4.1 benchmark.

The company's new Blackwell architecture delivers up to four times more performance per GPU than the H100 when running the Llama 2 70B model.

This significant improvement is due to the use of lower precision: Nvidia used the new FP4 precision of the Transformer Engine for Blackwell for the first time. The H200 GPU with HBM3e memory, which was also showcased, achieves up to 1.5 times higher performance than the H100.

While companies are still waiting for deliveries of various B100 variants, Nvidia has already announced future products: "Blackwell Ultra" (B200) is set to launch in 2025, followed by "Rubin" (R100) in 2026 and "Rubin Ultra" in 2027.

AMD's competing MI300X GPU is already available and was included in the MLPerf benchmark for the first time — with mixed results.

Nvidia leads key AI hardware benchmark for years

In the MLPerf benchmark, tech companies compete to show off their best AI hardware. MLCommons runs this test to compare different chip designs and systems fairly. They release results each year for both AI training and inference tasks.

Nvidia has been on top for years now, and it shows in their skyrocketing profits. No other chipmaker has cashed in on the AI craze quite like them. We still don't know if throwing more computing power at AI will fix its basic problems. Take "AI bullshit," where chatbots sound smart but spout wrong answers. New hardware coming out soon might help researchers test if raw power alone can solve these issues.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now