The Instinct MI300X is AMD's answer to Nvidia's AI chips. According to CEO Lisa Su, the product has generated a lot of interest. But Nvidia came prepared.

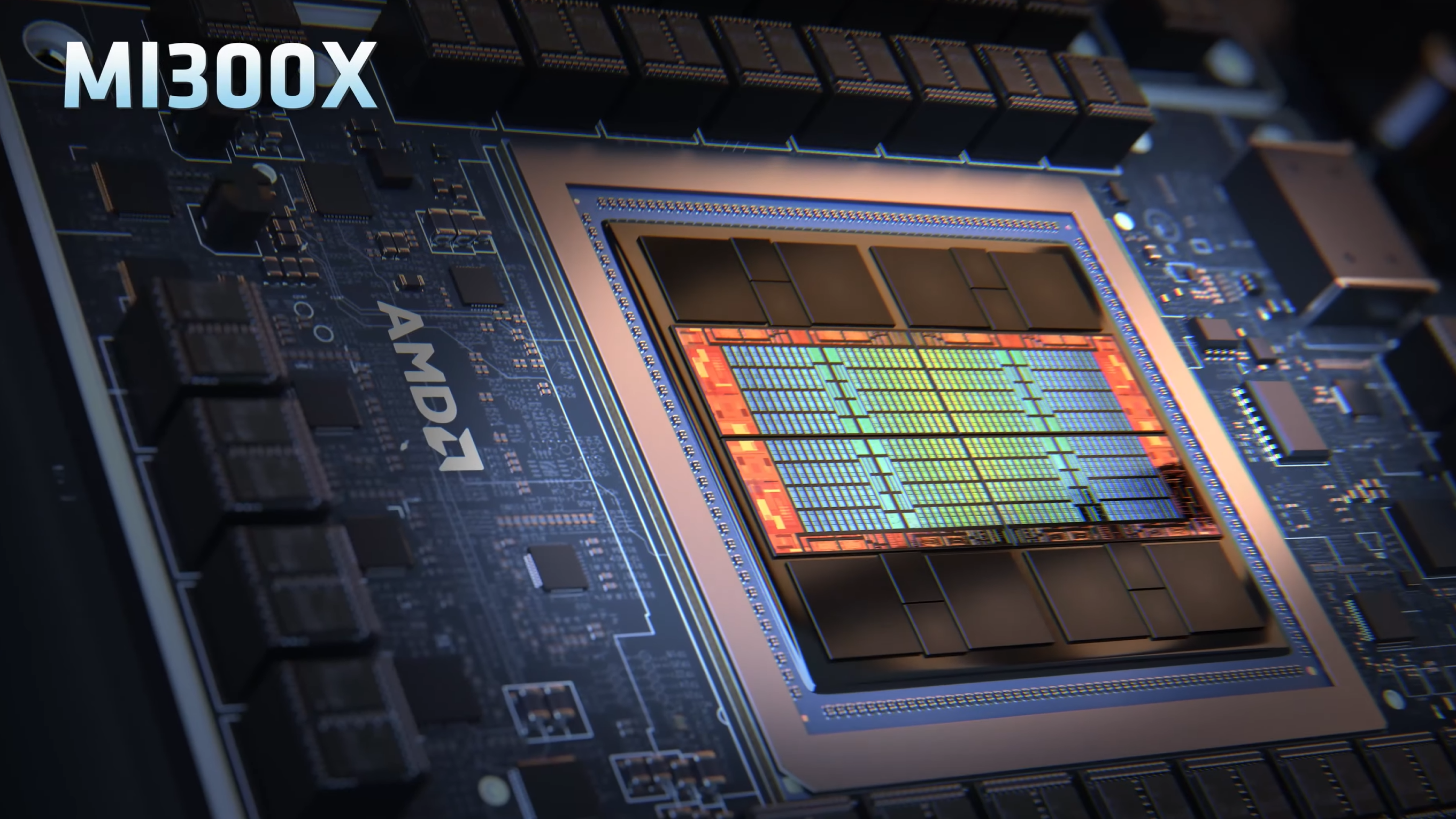

As part of the Advancing AI event, AMD unveiled its latest AI chips, the Instinct MI300X GPU and the MI300A APU. According to AMD, the MI300 series has been built using advanced manufacturing technologies, including 3D packaging, and is said to outperform Nvidia's GPUs on various AI workloads. According to AMD, the Instinct MI300X GPU offers up to 1.6 times the performance of Nvidia's H100 on AI inference workloads and similar performance on training workloads. In addition, the accelerator has 192 gigabytes of HBM3 capacity with a bandwidth of 5.3 TB/s. AMD claims the 128 GB MI300A is the world's first data center APU to compete with Nvidia's Grace Hopper super chip.

The new processors are currently being shipped to OEM partners and AMD stressed that it has the production capacity to meet demand, which could be a competitive advantage given the current GPU shortage.

H200 GPU: Nvidia is ready for competition

In addition to Intel, whose latest Gaudi chips can keep up with Nvidia's H100 in some benchmarks, AMD now has a real competitor. Whether the performance shown in AMD's benchmarks can be reproduced in practice remains to be seen. Nvidia is likely to have a head start here, as the company has been equipping numerous supercomputers with its own AI hardware for years, and has already shown several times that significant performance leaps are possible with software optimizations.

In addition, Nvidia has already introduced a new GPU, the H200 GPU, and a new Grace Hopper super chip, which, thanks to the faster HBM3e memory with 141 gigabytes of memory and a bandwidth of 4.8 terabytes per second, should almost double the inference of AI models compared to the H100.

Systems and cloud instances with H200 are expected to be available from the second quarter of 2024, including HGX H200 systems and in various data center environments. From 2024, H200 will also be used in the GH200 supercomputers, including the JUPITER supercomputer at Forschungszentrum Jülich. The successor B10o is also scheduled for 2024.

AMD focuses on lower prices and improved software

Although AMD did not reveal the price of the MI300X, CEO Lisa Su emphasised that the AMD chip would have to cost less than Nvidia's offering to convince customers to buy it. Price aside, there are other challenges in convincing companies that have relied on Nvidia to invest time and money in another GPU vendor. Su admits that "It takes work to adopt AMD." To address this issue, AMD has improved its ROCm software suite to compete with Nvidia's CUDA software.

Given the high prices and GPU shortages, there seems to be a lot of interest: AMD has announced that some of the biggest GPU buyers, including Meta and Microsoft, are already interested in the MI300X. Meta plans to use the GPUs for AI inference workloads such as AI sticker processing, image processing, and running its assistant. Microsoft CTO Kevin Scott said the company will provide access to the MI300X chips through its Azure web service. Oracle's cloud will also use the chips, and OpenAI announced that it will support AMD GPUs in one of its software products called Triton, which is used in AI research to access the chips' capabilities.

Will this be enough to catch up with industry leader Nvidia? AMD forecasts total revenue from data center GPUs of around $2 billion in 2024. By comparison, Nvidia reported more than $14 billion in data center sales last quarter alone. Su pointed out that AMD does not necessarily need to beat Nvidia to succeed in the market: "We believe it could be $400 billion-plus in 2027. And we could get a nice piece of that."