Multimodal AI model Aria is open source and beats many competitors

The US-based start-up Rhymes AI has released Aria, which it claims is the world's first open-source, multimodal mixture-of-experts model. Aria is said to be on a par with or better than open and commercial specialized models of comparable size.

Rhymes AI has released its first AI model, Aria, as open source software. According to the company, Aria is the world's first open-source, multimodal Mixture-of-Experts (MoE) model.

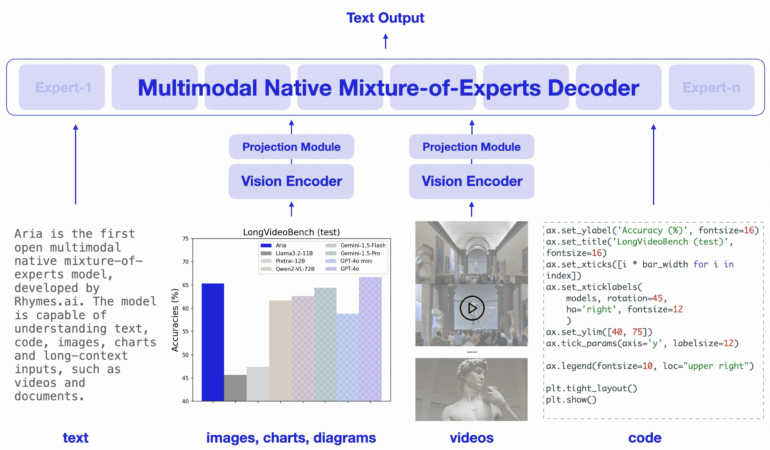

A natively multimodal model is defined by Rhymes AI as one that matches or exceeds the understanding capabilities of specialized models with comparable capacity across multiple input modalities such as text, code, image and video.

MoE models replace the feed-forward layers of a transformer with multiple specialized experts. For each input token, a router module selects a subset of the experts, reducing the number of active parameters per token and increasing computational efficiency. Well-known representatives of this class include Mixtral 8x7B and DeepSeek-V2. GPT-4 is presumably also based on this architecture.

The MoE decoder from Aria activates 3.5 billion parameters per text token and has a total of 24.9 billion parameters. A lightweight visual encoder with 438 million parameters converts visual inputs of variable length, size, and aspect ratio into visual tokens. Aria has a multimodal context window of 64,000 tokens.

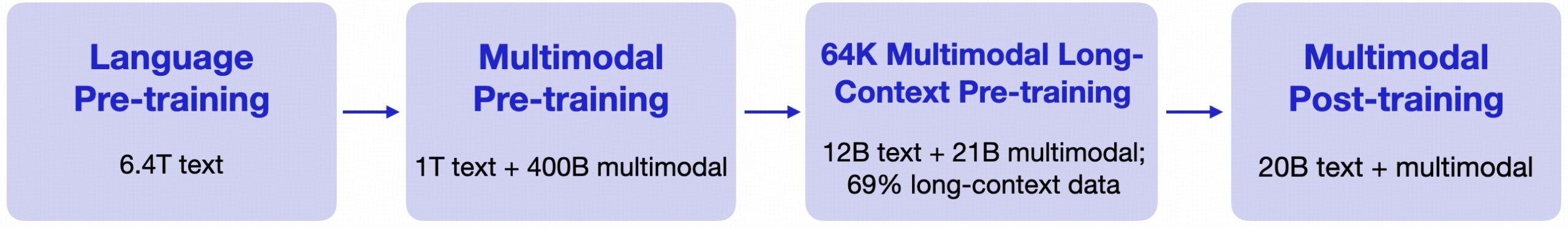

Rhymes AI pre-trained Aria in four phases: first with text data only, then with a mixture of text and multimodal data, followed by training with long sequences and finally with a final tuning.

In total, Aria was pre-trained with 6.4 trillion text tokens and 400 billion multimodal tokens. The material comes from the well-known data sets from Common Crawl and LAION, among others, and was partially enriched synthetically.

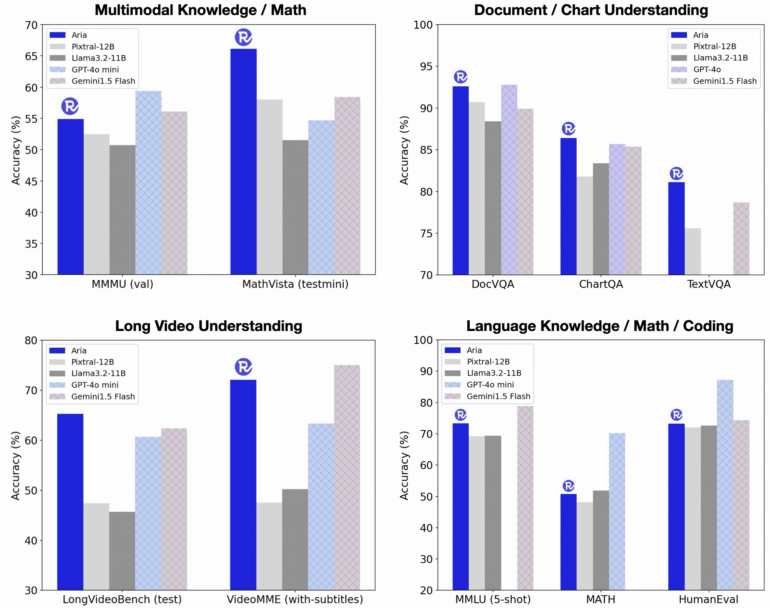

Compared to models such as Pixtral-12B and Llama-3.2-11B, Aria shows superior performance on a variety of multimodal, linguistic and programming tasks, according to benchmarks, with lower inference costs due to the lower number of activated parameters. In addition, Aria should also be able to keep up with proprietary models such as GPT-4o and Gemini-1.5 in various multimodal tasks.

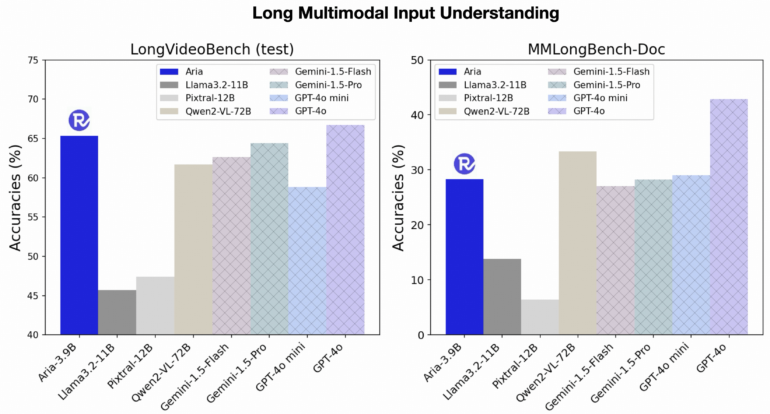

According to Rhymes AI, Aria also achieves good results with long multimodal inputs such as videos with subtitles or multi-page documents. In contrast to other open source models, Aria is said to understand long videos better than GPT-4o mini and long documents better than Gemini 1.5 Flash.

Rhymes AI cooperates with AMD

Rhymes AI has made the source code of Aria available on GitHub under the Apache 2.0 license, which allows both academic and commercial use. To facilitate adoption, the company has also released a training framework that allows Aria to be fine-tuned on a variety of data sources and formats using a single GPU.

Rhymes AI was founded by former Google AI experts. Similar to some other up-and-coming AI companies, its goal is to develop powerful models that are accessible to all. The company has received 30 million US dollars in seed capital.

To optimize the performance of its models by using AMD hardware, Rhymes AI has entered into a partnership with the chip manufacturer. At AMD's "Advancing AI 2024" conference, Rhymes AI presented its BeaGo search application developed for consumers, which runs on AMD's MI300X accelerator and, according to Rhymes AI, delivers comprehensive AI search results for text and images.

Video: Rhymes AI

In a video, BeaGo compares itself with Perplexity and Gemini. The app, which is currently available free of charge for iOS and Android, apparently only supports text and English voice input in addition to the search engine connection. It also suggests AI summaries of current news and links to various online articles.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.