Systematic use of GenAI cognitively overwhelms many people, Microsoft Research finds

Key Points

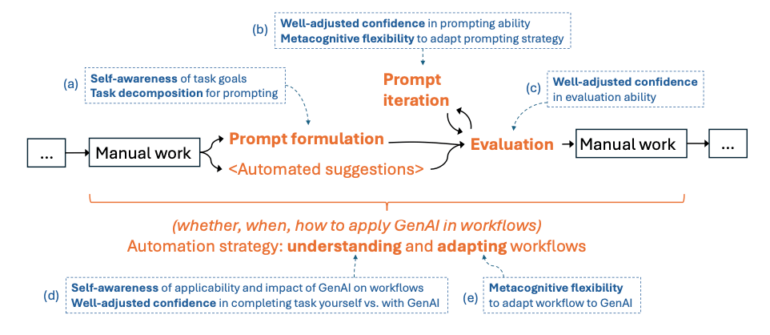

- A Microsoft Research study finds that effectively using generative AI requires strong metacognitive skills, similar to those of a manager delegating tasks to a team, such as clearly articulating goals, breaking them down into communicable tasks, evaluating outputs, and adjusting plans accordingly.

- The study identifies three key areas where metacognition is crucial: prompting (formulating clear instructions for AI), evaluating AI outputs (requiring appropriate confidence in one's evaluation abilities), and deciding whether and how to automate tasks (demanding self-awareness about AI's suitability for one's workflow).

- The researchers suggest strategies to enhance AI interactions, such as better planning through "Think Aloud," active self-evaluation, and strategic self-management. They also propose interactive chat interface improvements, including integrated planning tools, self-assessment prompts, and workflow management features for platforms like ChatGPT, Microsoft Copilot, and GitHub Copilot.

A new Microsoft Research study shows that using generative AI systems effectively requires strong metacognitive abilities—our capacity to monitor and control our own thoughts. The researchers found that many people struggle with the cognitive demands of professional AI use and proposed specific improvements.

The professional and systematic use of generative AI systems cognitively overwhelms many people. According to a new study by Microsoft Research, this is not only due to the complexity of the systems themselves, but above all to the high demands placed on our metacognition.

"The metacognitive demands of working with GenAI systems parallel those of a manager delegating tasks to a team," the researchers write. "A manager needs to clearly understand and formulate their goals, break down those goals into communicable tasks, confidently assess the quality of the team’s output, and adjust plans accordingly along the way."

Three main cognitive challenges

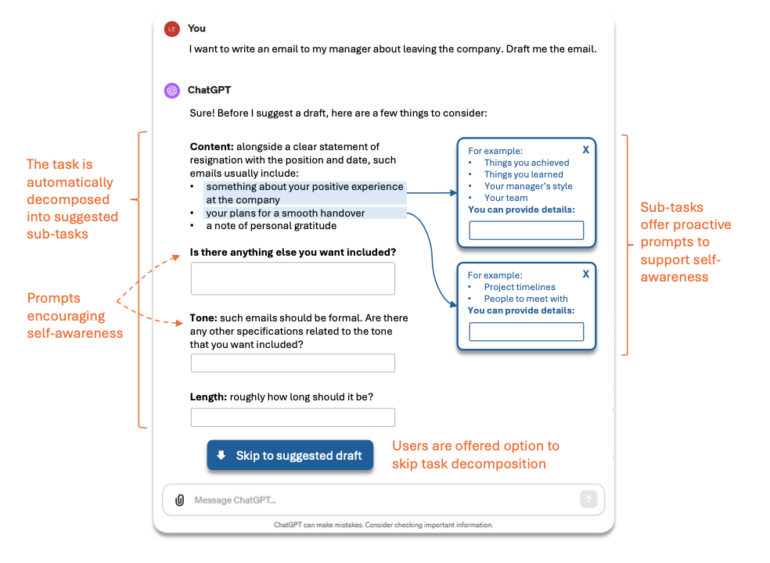

First, in prompting—formulating instructions for AI. Users need to clearly identify their goals and break them into subtasks. Many aspects that remain implicit in manual work—like the desired tone of an email—must be explicitly communicated to the AI. This becomes particularly evident in systematic work with generative models, beyond the chat principle. Here, individual work steps must first be thought through mentally, put in sequence, and translated as instructions for the model.

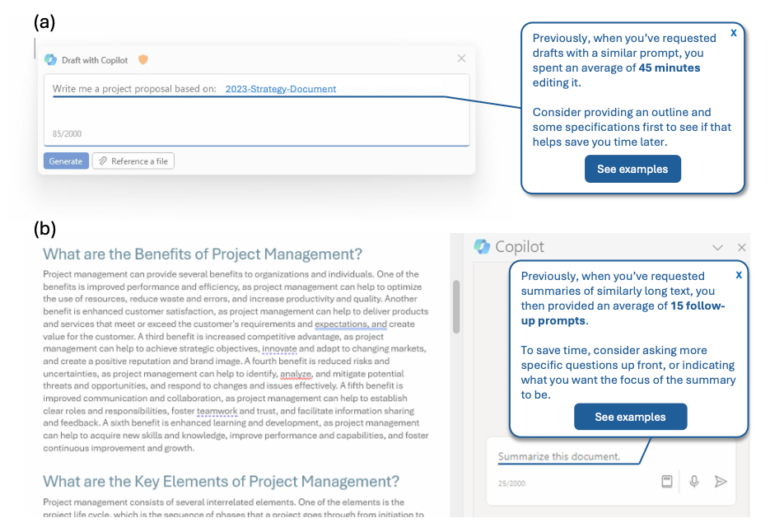

Second, in evaluating AI outputs. Unlike search engines, GenAI responses aren't deterministic and can vary with identical queries. This requires "well-adjusted confidence" in one's evaluation abilities. Other studies show that domain expertise makes it easier to assess AI output quality quickly and accurately.

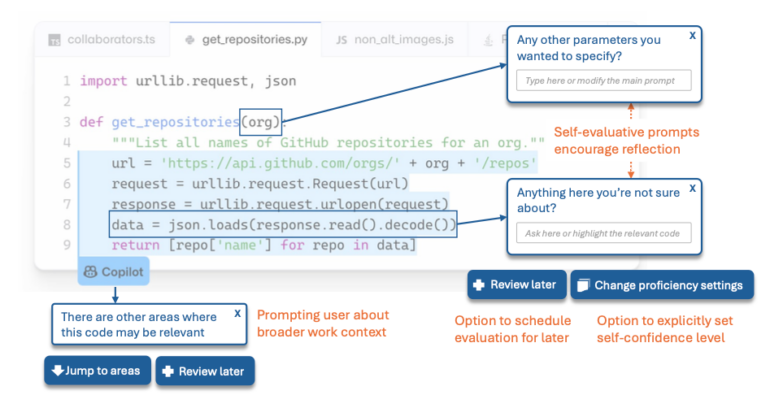

Third, in deciding whether and how to automate tasks. This demands self-awareness about AI's suitability for one's workflow and flexibility in adapting work processes.

Practical improvements

The researchers suggest several strategies to enhance AI interactions:

- Better planning through "Think Aloud": Users should verbalize or write down their thoughts while using AI. This helps clarify goals and break tasks into systematic steps.

- Active self-evaluation: Take time for reflection after each AI interaction by asking:

- Was my AI instruction precise enough?

- How much time did I spend revising AI output?

- Would another approach have been more efficient?

- Strategic self-management: Users should define distinct work modes:

- A "thinking mode" for careful prompt planning

- A "reflection mode" for reconsidering decisions

- An "exploration mode" for creative AI experimentation

"GenAI systems, with their model flexibility and generality, have the potential to adaptively nudge this kind of self-evaluation at key moments during user workfows, effectively acting as a coach or guide for users," the researchers write.

Interface design suggestions

The authors propose several approaches for more interactive chat interfaces to reduce users' metacognitive workload. These include integrated planning tools, self-assessment prompts, and workflow management features for platforms like ChatGPT, Microsoft Copilot, and GitHub Copilot.

Current usage issues

Usage data reveals significant room for improvement: 26 percent of surveyed programmers avoid tools like GitHub Copilot due to disruptive AI suggestions, while 38 percent cite time-consuming debugging of generated code. Only 20 to 30 percent of Copilot suggestions are accepted by users.

The researchers note that implementing these improvements requires balancing several factors: interventions should adapt to expertise and workflow, support should gradually decrease with experience, and there must be equilibrium between assistance and cognitive load.

New ways of working

Intense planning, preparation, and segmentation of tasks before handing them over to generative AI systems represents a completely new way of working for most people. Carefully considering at which point, to what extent, and with what quality systematic prompts yield the desired results is not easy and requires guidance and training. From my own experience, I can report: Anyone who overcomes the initial mental hurdle in professional dealings with generative AI opens the door to a new world of working in tandem.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now