Figure AI shows robot that can finally put the fridge away

Key Points

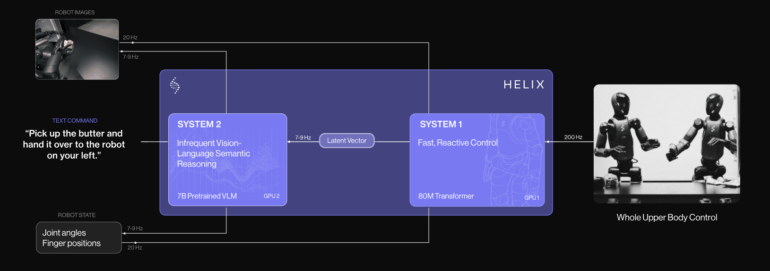

- Figure has introduced the Helix AI system, which is designed to control humanoid robots in real time using voice commands. It consists of a multimodal language model with 7 billion parameters as the "brain" and a second AI with 80 million parameters to translate the commands into robot movements.

- Helix should be able to control 35 degrees of freedom simultaneously, from finger movements to head and torso control. In demonstrations, robots respond to voice commands and identify and grasp objects. Two robots work together in a show kitchen without having trained on the specific objects.

- 500 hours of data have been used to train Helix. The system runs on graphics processors embedded in the robots. Figure CEO Brett Adcock sees this as a fundamental breakthrough for scaling robots in the home, as Helix can flexibly respond to new situations. Its suitability for practical use remains to be seen.

Figure, a robotics startup, has developed a new AI system called Helix that allows humanoid robots to perform complex movements through voice commands. The system aims to handle unfamiliar objects without requiring specific training for each item.

The system combines two distinct AI components. The first is a 7-billion-parameter multimodal language model that processes speech and visual information at 7-9 Hz, serving as the robot's "brain." The second component, an 80-million-parameter AI, converts the language model's instructions into precise robot movements at 200 Hz.

Advanced movement capabilities show promise for household tasks

Helix can simultaneously control 35 degrees of freedom, managing everything from individual finger movements to head and torso control. Figure has demonstrated the system's capabilities through videos showing robots responding to voice commands, identifying objects, and grasping them accurately.

In one particularly impressive demonstration, two robots work together in a show kitchen, placing food items into a refrigerator - all without prior training on these specific objects.

Introducing Helix, our newest AI that thinks like a human

To bring robots into homes, we need a step change in capabilities

Helix can generalize to any household item ?pic.twitter.com/sPzPV4B50J

- Brett Adcock (@adcock_brett) February 20, 2025

Training with limited data

The system required only 500 hours of training data, significantly less than comparable projects. It runs on embedded GPUs within the robots themselves, making commercial applications technically feasible.

Figure CEO Brett Adcock sees this development as crucial for scaling robots in household settings. Unlike traditional robots that need reprogramming for each new task, Helix adapts to new situations - though its real-world performance remains to be proven.

Strategic shifts in AI partnerships

Figure AI recently ended its collaboration with OpenAI on robot-specific AI models, despite OpenAI remaining a significant investor. On social platform X, Adcock explained that while large language models (LLMs) continue growing more powerful, they're becoming commoditized and represent "the smallest piece of the puzzle" in Figure's vision.

The company now focuses on developing its own AI models for high-speed robot control in real-world situations. Meanwhile, OpenAI appears to be rekindling its interest in robotics, recently seeking hardware engineers for a new robotics team after previously shuttering its robotics department.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now