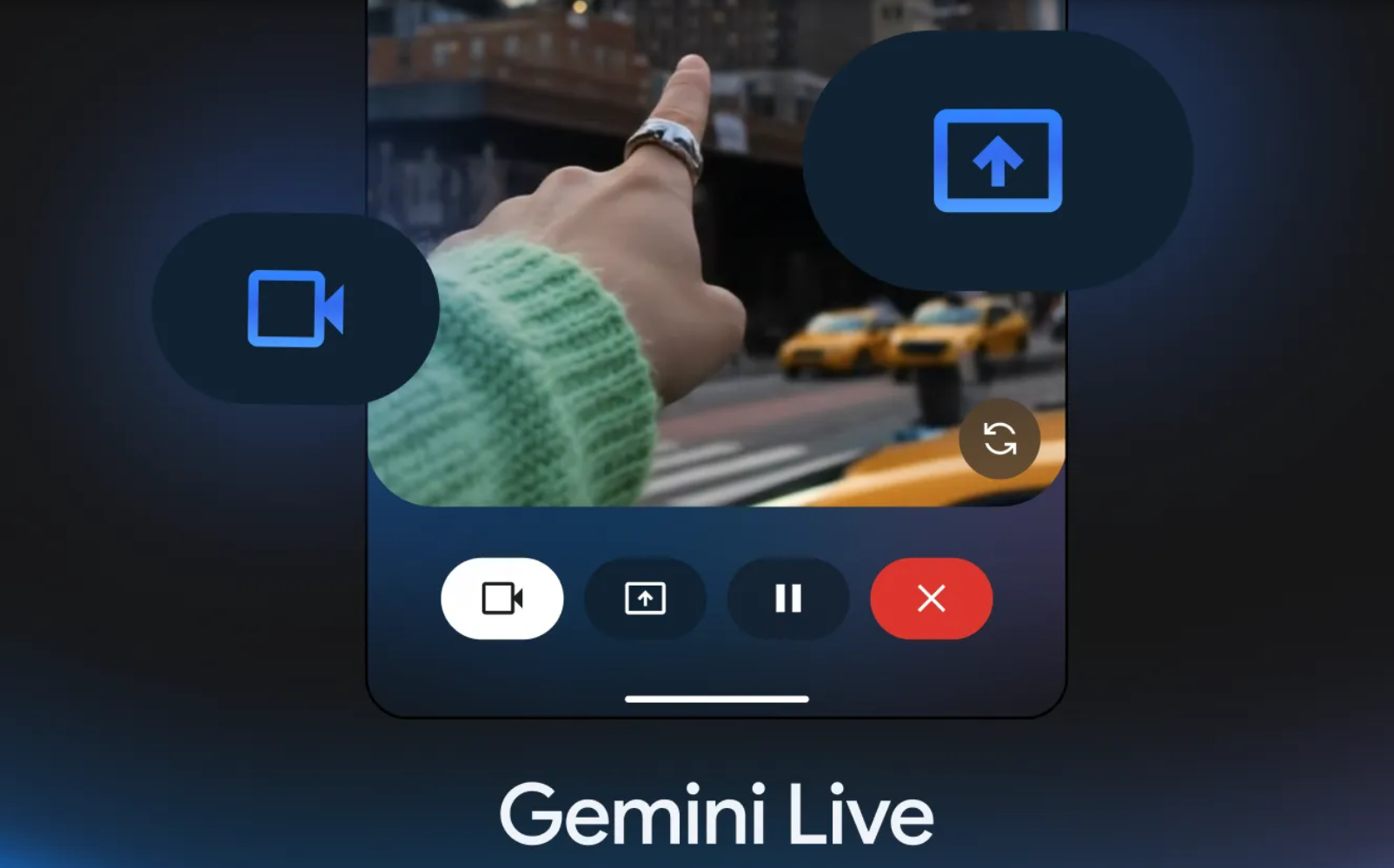

Google rolls out Gemini Live camera and screen sharing features

Update April 7, 2025:

The camera and screen sharing features announced in March for Gemini Live are now available. According to Google, the new functionality is initially available to Gemini Advanced subscribers with compatible Android devices. In addition, Google has started rolling out the update to all Gemini app users on Pixel 9 and Samsung S25 smartphones.

The new features allow users to discuss visual content displayed on their smartphone screens or viewed through the camera with Gemini in real time. Google has suggested several use cases for this new feature, such as styling recommendations, technical support, or creative advice for home decorating. The following example shows how Gemini Live can be used to troubleshoot a problem on a Google Nest device.

Original article, March 3, 2025:

Google is bringing live video analysis to its Gemini assistant. The company announced this at the Mobile World Congress.

Google is presenting AI functions for its Gemini assistant at the Mobile World Congress (MWC) in Barcelona. The company announced that subscribers to the Google One AI Premium plan for Gemini Advanced will have access to live video and screen sharing features later this month.

Gemini Live will have two major new features: Firstly, the ability to analyze live video, and secondly, a screen sharing feature. With both functions, users can share visual content with the AI assistant in real time - in the case of live video via camera images from outside, and in the case of screen sharing via their own smartphone screen in order to have content commented on.

The new functions are initially only available for Android devices and support multiple languages. At the MWC, Google will be demonstrating the integration of these functions on partner devices from various Android manufacturers.

AI assistants arrive in the real world

The addition of visual functions is an important step in the development of AI assistants, which are increasingly expected to act multimodally and interact with the real world.

Google's goal for 2025 is "Project Astra", a universal multimodal AI assistant that can process text, video and audio data in real time and store it in a conversational context for up to ten minutes. Astra will also be able to use Google Search, Lens and Maps.

It is not known whether Google is actually planning to release Astra or, more likely, whether the functions presented for Astra will be integrated into Gemini.

With Gemini Live, Google is positioning itself against its competitor OpenAI and its ChatGPT: ChatGPT's Advanced Voice Mode has supported live and screen sharing since December.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.